Dans le premier chapitre, nous étudions plusieurs classes de problèmes de contrôle déterministes ou stochastiques à coûts discontinus. Les formulations de type programmation linéaire sont très utiles dans l'étude des problèmes de contrôle de nuit ou d'horizon nocturne. Dans le cas de problèmes de contrôle des coûts discontinus et dynamiques régis par une équation déterministe.

Dans la section 1.2 intitulée « Méthodes LP dans les problèmes de contrôle déterministe » nous étudions : les problèmes de contrôle des coûts discontinus sous contraintes d'état ([G1]), la linéarisation des problèmes de contrôle des coûts classiques, de type Lp voire L1 ([G9]). Problèmes de contrôle des processus markoviens déterministes par morceaux et leurs applications aux réseaux de gènes stochastiques.

LP methods in control problems with Brownian dynamics

- Introduction

- Mayer stochastic control problems with discontinuous cost

- Adding stopping times

- Adding state constraints

- Abstract and linearized dynamic programming principles (DPP)

- Asymptotic values and uniform Tauberian results for nonexpansive

- Miscellaneous

We consider the stochastic control system. i) the functions b and are bounded and uniformly continuous on RN 2U;. Using the characterization of the set of constraints in the linear formulation, we prove a semigroup property. In the second part of the article [G19] we consider the relationship between the existence of the (uniform) limits lim.

LP methods in deterministic control problems

- Introduction

- Dynamic Programming Principles in classical control problems

- Linearization techniques and dynamic programming principles for L 1 -

- Discontinuous control problems with state constraints : linear formu-

- Min-max control problems

- Viability results for singularly perturbed control systems

In the first part of the article [G9] we investigate further properties of the set of constraints that appear in classical (deterministic) control problems. Identifying the boundary of primary and dual problems gives an alternative characterization of the value function in T1 control problems. This dual formulation gives an intuitive idea of the relationship between the primary value function and the Hamilton-Jacobi equation related to the control problem.

However, we consider the (convex, weak *-compact) sets of constraints associated with the dynamics of the two players, and naturally define a common set of constraints. We give an affirmative answer to the existence of value and of saddle points. Using the semigroup properties of the sets of constraints, we provide a linear dynamic programming principle.

One of the advantages of transforming a nonlinear control problem into a linear optimization problem consists in the possibility of obtaining approximation results for the value function. Furthermore, when considering the ergodic control problem (see e.g. [BCD97]), the study of the behavior of the value function is simplified when this value is expressed by a linear problem. The main difficulties in our environment are the lack of convexity in the dynamics and the discontinuity of the costs.

These assumptions appear under the same form in [Son86b] and are necessary to derive the uniform continuity of the value function if the cost function is regular enough.

Stochastic gene networks

- From biochemical reactions to PDMPs

- General On/O¤ Models

- Cooks model

- Cooks model with several regimes

- Bacteriophage

We assume standard conditions on the local features (uniform continuity, boundedness and Lipschitz behavior in the state component). Let us just mention that an extensive literature is available on the theory of continuous or impulsive control for piecewise deterministic Markov processes. From the mathematical point of view, the system will be given by a process(X(t); D(t)) on the state space E = R2 f0;1g.

The vector field for the D component can be considered 0: a consumption of 0 should be expected whenever X = 0 and D = 0, i.e. The authors of [CGT98] distinguish several regimes (stable, slow unstable, fast unstable) that control the stage activation. To account for this, one must consider that ka and/orkd depend on external control.

The simplification proposed by the authors of [HPDC00] consists in considering a mutant system in which only two operator sites (known as OR2 and OR3) are present. The cI gene expresses the repressor (CI), which dimerizes and binds to DNA as a transcription factor at one of two available sites. Using the notation in [HPDC00], we let X stand for the repressor, X2 for the dimer, D for the DNA promoter site, DX2 for binding to the OR2 site, DX23 for binding to the OR3 site, and DX2X2 for binding to both pages.

The concentration of RNA polymerase is denoted by P and the number of proteins per mRNA transcript by n.

Mathematical contributions

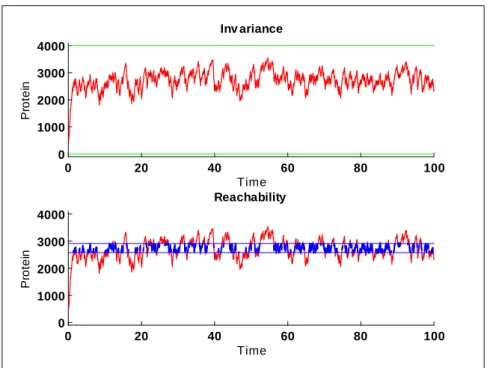

- Geometrical criteria for viability and invariance

- Linearization methods

- Reachability of open sets

- Zubovs approach to asymptotic stability

The first contribution is the following characterization of the near viability property with respect to the controlled piecewise deterministic Markov process. We have embedded the set of control processes into a set of probabilistic measures through occupational measures. This set of constraints is given explicitly by a deterministic condition involving the coefficient functions.

The primary value function is given with respect to the previously introduced set of constraints. One should expect the trajectories of the controlled PDMP starting from a region around the stable point to converge to it. De nition 36 Given an initial condition x2 Oc (or even x2RN), the set O can be reached from x if there exists some allowed control process u such that the set . fXtx;u20; t 2[0;1)g has positive probability.

One can reduce the dimension by considering a suitable family of test functions ('m)0mM and introducing a certain accuracy of the test. De nition 38 The domain of stabilizability for the controlled piecewise deterministic Markov system is the set. This measure has no reason to be in the set of occupational measures (ie to be associated with a control process).

As for the reachability criterion, one narrows down the dimension by considering a suitable final family of test functions ('m)0mM and introducing a certain accuracy of the test.

Applications

Cooks model for haploinsu¢ciency

Cooks model with several regimes

This means that, in the so-called "unstable" case (positive activation/deactivation), for all initial protein concentrations, the threshold level can be reached with positive probability. This is the simplest method to show that all concentrations of interest belong to the asymptotic zero-controllability domain. In the general cases, the best approach is to calculate the value function V solution of (52) numerically.

Hastys model for bacteriophage

For Brownian di¤usions with an end-dimensional state space, the exact controllability has been characterized in [Pen94] (see also [LP02], [LP10]). For exact (terminal) controllability, a full-fledged operator is needed who acts on the control in the noise term (cf. [Pen94]). Estimated controllability for the Brownian setting has been examined in [BQT06] (no noise control) and [G15, G16] (general setting).

The authors generalize the Kalman condition to some equivalent criterion for approximate controllability using duality techniques. In the nite-dimensional framework ([BQT06], [G15, G16]), the stochastic observability criterion uses (in an essential way) feasibility properties for the dual equation associated with the control system. To understand what happens for systems with non-dimensional components, we followed two possible leads.

The first approach consists of investigating stochastic control systems with a finite-dimensional state space but an infinite-dimensional component. In the first case, as for Brownian diffusions, the approximate conductivity and the approximate zero conductivity are proven to be equivalent. For the second class of systems, we prove that approximate and approximate zero controllability are not equivalent in all generality.

The second approach consists of studying survivability properties for stochastic semi-linear control systems on a real, separable Hilbert space.

Controllability of jump-di¤usions with linear coe¢cients

As usual, we let e=0b be the compensated random measure associated with: We are dealing with a linear stochastic di¤erential equation of the form In the case of classical differential stochastic systems driven by Brownian motion, the necessary and sufficient condition for exact controllability is that Rank(D1) = N: This condition is no longer sufficient. The proof technique relies mainly on aspects of duality and the Reversible Stochastic Mean-Field Differential Equations (introduced in [BDLP09], [BLP09]).

Within the framework of di¤erential games, our research aims to replace Varaya-Elliot-Kalton type strategies with regular selections with values in these constraint sets in order to deal with general deterministic/stochastic dynamics. BDLP09] Rainer Buckdahn, Boualem Djehiche, Juan Li, and Shige Peng, Understanding backward stochastic di¤erential equations: a boundary approach, Ann. BLP09] Rainer Buckdahn, Juan Li, and Shige Peng, Understanding backward stochastic differential equations and associated partial di¤erential equations, Stochastic Process.

Tessitore, A characterization of approximately controllable linear stochastic differential equations, Stochastic partial differential equations and applicationsVII, Lect. BQT08], Controlled stochastic differential equations under constraints in small dimensional spaces, SIAM JOURNAL ON CONTROL AND OPTIMIZATION no. Plaskacz, Semicontinuous solutions of the Hamilton-Jacobi-Bellman equations with state constraints, Differential Embedding and Optimal Control, vol.

Yong, A linear-quadratic optimal control problem for stochastic mean differential equations in the nineteenth horizon, arXiv. Peng, In nineteen horizons backward stochastic di¤erential equation and exponential convergence index assignment of stochastic control systems, Automatica no. Quincampoix, Value functions for di¤erential games and control systems with discontinuous terminal costs, SIAM J.

Controllability of linear systems of mean- eld type

Viability of Stochastic Semilinear Control Systems via the Quasi-Tangency

Quasi-tangency characterization

The central idea needed to characterize (near) viability is the concept of stochastic quasitangency.

Applications

Zidani, A general Hamilton-Jacobi framework for nonlinear state-constrained control problems, ESAIM: Control, Optimization, and Calculus of Variations (2012). Alm01] A Almudevar, A dynamic programming algorithm for optimal control of piecewise deterministic Markov processes, SIAM J. Capuzzo Dolcetta, Optimal control and viscosity solutions of hamilton-jacobi-bellman equations, Systems and Control: Foundations and Applications, Birkhäuser, Boston, 1997 .

Bettiol, On the ergodic problem for the Hamilton-Jacobi-Isaacs equations, ESAIM- Control Optimization and Calculus of Variations no. Ichihara, Limit theorem for controlled backward SDEs and homogenization of Hamilton-Jacobi-Bellman equations, Applied Mathematics and Optimization51 (2005), no. Jakobsen, On the rate of convergence of approximation schemes for the Hamilton-Jacobi-Bellman equations, ESAIM, Math.

CD09] ,The vanishing discount approach for the mean continuous control of piecewise deterministic Markov processes, Journal of Applied Probability no. CD12] , Continuous control of piecewise deterministic Markov processes with long-run average cost, Stochastic processes, nancy and control, Adv. Quincampoix, Linear programming approach to deterministic in nite horizon optimal control problems with diskout, SIAM J .

IMZ13] Cyril Imbert, Régis Monneau and Hasnaa Zidani, A hamilton-jacobi approach to intersection problems and application to tra¢c ows, ESAIM: Control, Optimization and Calculus of Variations.