VR already disrupts the user's normal vision, but it is possible to add sensation by shifting the user's viewpoint independently of real-world head movement. Optically transparent HMDs require adjustment of the angle and distance of the optical combiner from the eye.

![Figure 1. Marketing image for Varjo XR-1 illustrating the difference between video see- see-through and optical see-see-through methods [Varjo, 2020]](https://thumb-eu.123doks.com/thumbv2/9pdfco/1890897.267063/9.892.161.733.126.437/figure-marketing-varjo-illustrating-difference-optical-methods-varjo.webp)

XR input techniques

Interfaces better meet user expectations by recognizing the type of user action [Aigner et al., 2012]. These are difficult to extract by machine capture and labeling without imposing constraints [Aigner et al., 2012].

![Figure 3 by Bowman et al. [1999] presents a comprehensive breakdown of a selection and manipulation task in VE fitted with, at the time, known interaction techniques for them in categories](https://thumb-eu.123doks.com/thumbv2/9pdfco/1890897.267063/12.892.192.683.222.642/presents-comprehensive-breakdown-selection-manipulation-interaction-techniques-categories.webp)

3 Methods

- Research problem

- Research design

- Grounded theory methodology

- Interview process

- Data Analysis

Qualitative material analysis is performed at every stage of the research process [Corbin and Strauss, 1990]. This chapter goes through in detail the matters related to the implementation of the interview study process.

4 Findings

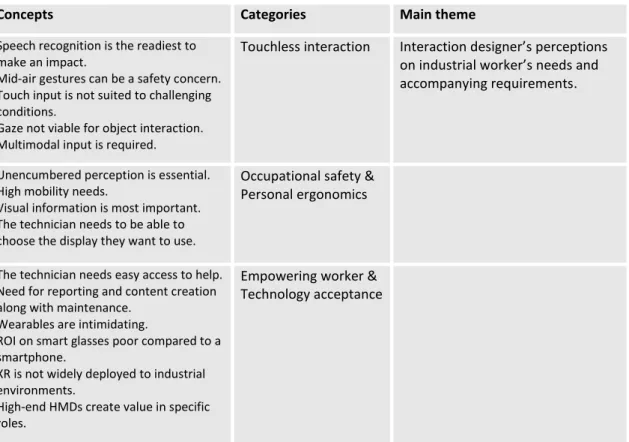

Touchless inputs

Symbolic airborne gestures can be helpful, but it can be difficult for new users to use airborne gestures in the first place. A few participants emphasized that gestures in the air must be as reliable as a touchscreen to be useful or not implemented at all. It was common knowledge that low or close-to-body gestures in the air do not work with HoloLens, as the hands must be extended prominently to be visible to the optical sensor array on the user's forehead and must be kept within a zone.

One requirement expressed by two interviewees was that for field work, mid-air gestures should work with work gloves. One participant knew that at least thin white work gloves were compatible with HoloLens mid-air gestures. A combination of visual and audio feedback on gestures in the air is important as haptics are lacking.

Small symbolic gestures tracked inside out with a hand-held device can provide safer gesture recognition in the field, as gestures are performed in the air closer to the body.

Occupational safety and ergonomics

However, during the fieldwork conditions, participants noted that distracting AR HMD imagery can pose a safety risk due to impaired awareness in an unfamiliar environment, but that all output and input technologies have the potential to be distracting. Technicians have high mobility needs and HoloLens 1 and 2 were not considered practical for service technicians due to their physical size and weight. Instead, HoloLens were considered useful for use cases that require less mobility.

Many participants reported that helmet installation and bump cap fitting of smart glasses was a slow and challenging task for a maintenance technician. Additionally, most wanted the ideal field work HMD to be light enough for comfortable all-day use with all-day battery life and secure cable management. Solutions cited as being cumbersome were recharging the device during lunch break and switching devices to recharge.

Nevertheless, due to the device itself limiting the FOV by its frame and suboptimal brightness of the display in a well-lit environment resulting in poor readability, monocular passthrough displays were deemed better for maintenance.

Empowering worker and technology acceptance

Digitization brings efficiency in repetitive tasks, such as delegating the less critical part of informing the next parameters to be passed to the service equipment to smart glasses. The knowledge and experience of the technicians must be at a high level, not only in performing the work. The participants saw the critical point regarding the user's acceptance of technology as factors external to the medium.

In fact, some technicians think they don't want to be seen using smartphones at the customer's location because the customer might think badly of it. For the form factor of glasses to be worthwhile, they have to be rugged and cheap enough to be economical. Before smartphone is recognized as an unsuitable tool for a user's job, smart glasses should not be expected to be embraced by users.

All participants indicated that the technology of smart glasses is evolving very quickly and hope to use them in the future.

5 Discussion

Interpretation of the interview findings

However, it seems that most of these were actually related to usability issues on the hardware elements of the current generation of user interfaces. This thesis did not collect perceptions of the usability of graphical user interfaces and the most prominent usability issues in the interaction findings. Remarkably, the XR technologies exist parallel to the real world, and as such the design of the interaction techniques should take this into account.

This method of interaction, where the second task runs alongside the main task, can be called peripheral interaction, or reflexive interaction, if the interface is closer to the third possibility of implicit interaction, where the user's awareness of the system is not expected [Matthies et al. ., 2019]. Designing to support and empower the end user means supporting the user only where they need assistance. The center of vision performs better in terms of speed, comfort and learnability than text located at the bottom right for head-mounted displays [Lin et al., 2021].

In addition, any wearable technology introduced into industrial settings must be compatible with the personal protective equipment and clothing technicians must wear when dictated by the environment or safety procedures and the mobility requirements of the work being performed.

Literature review of suitable input methodologies

Clearly, gaze can be used to indicate and select incorrect words by pausing on words for 0.8 seconds [Sengupta et al., 2020]. In virtual object manipulation, gaze can be used for directional object selection along with mid-air gestures to perform 6-DoF object manipulation [Slambekova et al., 2012]. Optically captured airborne static gesture interaction in particular requires long dwell times for recognition [Istance et al., 2008].

In the future, data nuggets captured in real time may replace the field worker's safety gloves [Brice et al., 2020]. Eyes-free directional swipe gestures can be performed on any surface using a vision-based motion sensor on a wristband [Yeo et al., 2020]. Eyes-free interaction is facilitated by tactile feedback from touching the controls [Yi et al., 2012].

They return tactile feedback when activated and useful for interactions on the wearable body [Goudswaard et al., 2020].

Implications

In order for the framework to be more useful in the design of wearables for industrial field workers, the model could have higher accuracy than Khalaf et al. In addition, positioning technology and spatially mapped environments through the camera could allow maintenance logs to be on-site. Mid-air gesture control and gaze input were found to be among the most discussed emerging interaction techniques for industrial applications.

There is a possibility that through the described technological combination, world-oriented pointing could become relevant if the user needs to be able to point to a direction outside his field of vision. Another option for touch interfaces would be for the user to interact with a gesture sensor near a surface in the air with dynamic hand gestures and more fine-grained finger rubbing. It must be judged how, when, or if implicit interaction could generate feedback during field operations and present it to the worker.

These interaction techniques must be perceived in a way that will not interfere with the primary task the industrial worker is engaged in.

Limitations and further work

Other options are to use slightly larger hand movements, like feeling the hand like a joystick-like controller, or to use limited rapid kinetic movements of the arm. In retrospect, one thing that could be changed from the interviews is the use of the video conference meeting. The research could not be supplemented with an observation of workplaces or practices due to the COVID-19 pandemic.

This was partly due to time between research phases, making the tacit knowledge notes that had to be rediscovered later, hindering the completion of the thesis, as the research felt incomplete deep into the process. Further research can provide a more nuanced understanding of the industrial use of new XR interaction technologies, especially from the industrial field workers' perspective. In addition, more in-depth studies on the comparison of binocular and monocular devices on the same industrial tasks may yield more information for the future direction of the use of the display device and their respective readability.

One of the technologies enabling maximum mobility for multimodal fusion could be the use of a computer stick and battery handbag-like unit.

6 Summary

Interfaces should respect the user's priorities by staying away from them, except when necessary, and efficiently help the user, who has priority to move from the current work to the next work and at the end of the day go home, without compromising . on safety - the number one priority of any industrial company. It is the researcher's sincere hope that this research effort will be a catalyst for deeper investigation into appropriate XR industrial interaction techniques and help to ensure, along with traditional interaction heuristics, a more standard quality of industrial applications. XR within the industry. It means finding where this tool can fit - the right job for the tool, rather than looking at use cases and targeting where it "kind of works".

XR is the future of computing, not necessarily all computing, but a specific type of computing, and it will make all of our lives much better in the future.

In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, (pp. In Proceedings of the 30th Annual ACM Simposium on User Interface Software and Technology, (pp. 553–563). In Proceedings of the 33th Annual. ACM-konferensie oor menslike faktore in rekenaarstelsels, (bl.

Opgehaald op 10 oktober 2019, van https://docs.microsoft.com/en-us/windows/mixed-reality/design/inter-action-fundamentals. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology, (pp. 133–145). In 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People en Smart City Innovation, (pp. 133–139).

Earable TEMPO: A new, hands-free input device that uses the movement of the tongue as measured by a wearable ear sensor.

![Figure 2. Different ways of image generation for AR displays [Bimber and Raskar, 2006]](https://thumb-eu.123doks.com/thumbv2/9pdfco/1890897.267063/9.892.232.667.726.1072/figure-different-ways-image-generation-displays-bimber-raskar.webp)

![Figure 4. Classification of interaction approaches for smart glasses [Lee and Hui, 2018]](https://thumb-eu.123doks.com/thumbv2/9pdfco/1890897.267063/13.892.276.646.527.972/figure-classification-interaction-approaches-smart-glasses-lee-hui.webp)

![Figure 5. Gesture Classification Scheme [Aigner et al., 2012]](https://thumb-eu.123doks.com/thumbv2/9pdfco/1890897.267063/16.892.424.772.187.606/figure-gesture-classification-scheme-aigner-al.webp)