We address the question of the expressiveness of the computational model by showing that the 'conversational nodes' can be Turing machines. A Java prototype is used to perform the 'Sudoku filling problem' and demonstrate how the choices of chapter 3 meet the requirements listed in chapter 2. Chapter 5 gives some arguments in favor of our chosen trade-off between the controllability of the explanatory paradigm and the expressivity of the imperative techniques, and establish a general discussion about the AUSTIN machine around two questions: .. i) can we give a logical semantics to the conversational arithmetic? . ii) our system is thought for improving the cooperation between.

1 Introduction

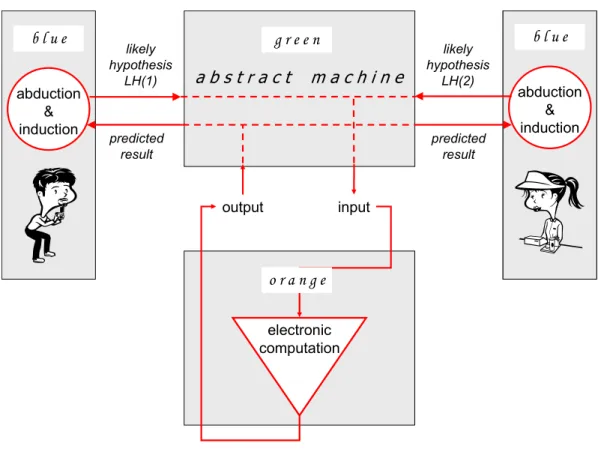

Initial motivation: the ‘PULL’

The situation is that of “collaborative theory construction5” in the sense of building a unified set of expressions and inference rules so that appropriate subsets can be interpreted by different experts as a model for their respective observations and beliefs. The process consists of two complementary 'movements': one of 'theory discovery', the other of 'theory verification', and the global challenge is a clever division of these two movements between humans and computers; a detailed argumentation6 in favor of human-computer cooperation can be read, for example, in [Langley, 2000].

Problem definition

The current work deepens and completes the analyzes underpinning the framework, clarifies the computational support requirements of the "collaborative theory building" process, and details the specifications of the "AUSTIN conversational abstract machine" aimed at sustaining it. BLACKBOARD5 The detailed specifications of the communication events and finding an efficient control scheme are far from easy.

Structure of the thesis

A first example is given in [3.1] to introduce the main concepts; it consists of a very simple conversation aimed at collectively answering a single question. The third and main example is presented in [3.8] and consists of the complete solution of the 'sudoku filling problem' described earlier; this example illustrates both the computation and a generative grammar for sentences proposed in [3.7].

2 Collaborative theory construction

Thoughts about minds, languages and computation

- Blue, the “world of mind”

- A quick review of neuroanatomy

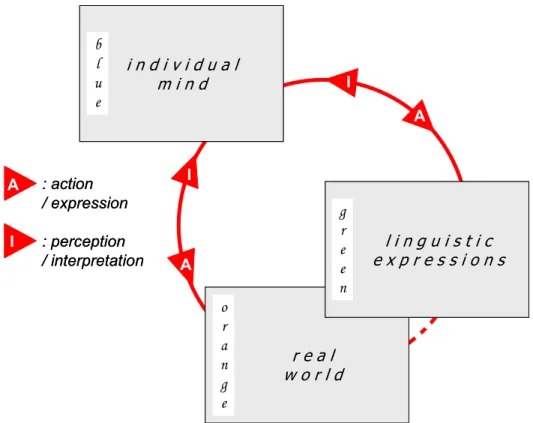

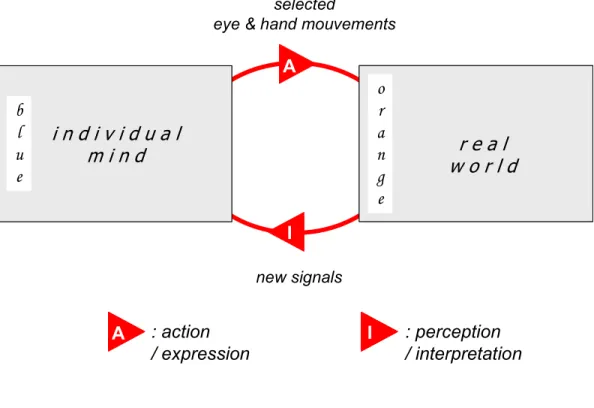

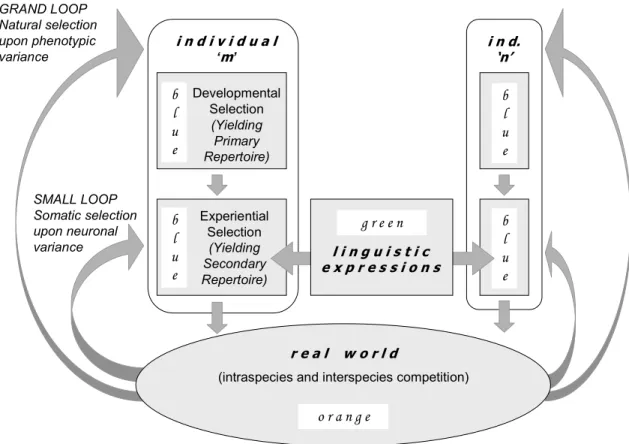

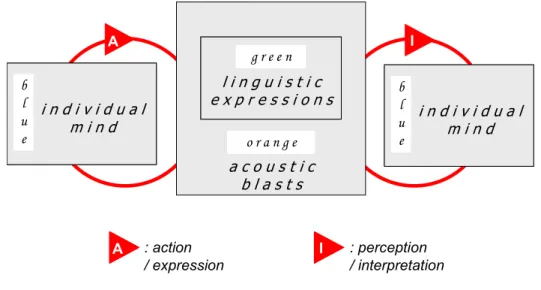

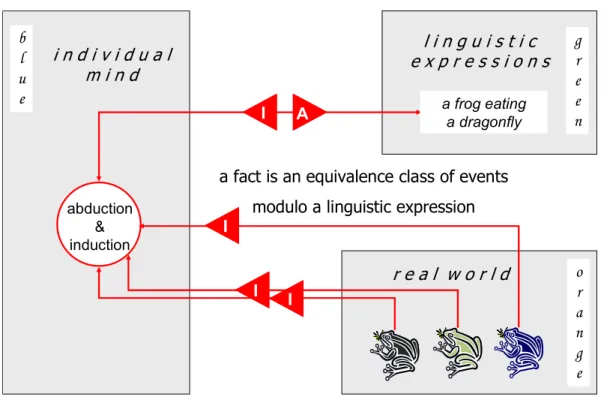

Our coarse-grained analysis will cover a wide spectrum, ranging from the natural languages of everyday life to the formal languages of mathematics and computer science; therefore "linguistic expression" should be understood as "expression in language", be it natural or artificial. - The ORANGE 'real world' where events or 'changes of state' obey the laws of causality, where theories can be tested against facts and where the formulas and syntactic rules of the green world can be electronically transformed and calculated. The positive news for the reader of "The Society of Mind" is that neurobiology's description of the mind will not conflict with Minsky's ideas about human intelligence as the result of the interaction of simple mindless parts14.

General Characteristics: The adult human brain weighs about 3 pounds and contains about 100 billion nerve cells, or neurons.

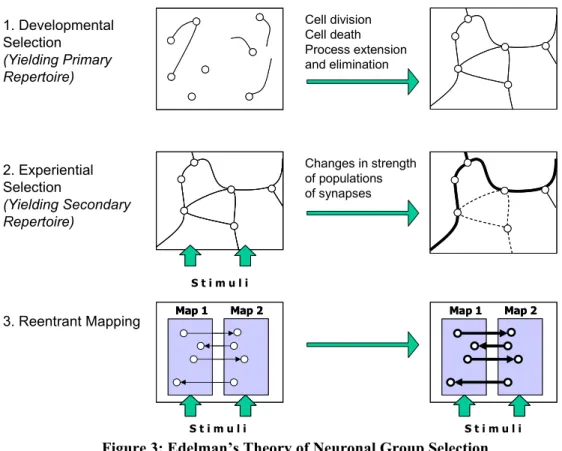

- The brain is a jungle … with reentry!

- The theory of neuronal group selection

- A model for associative recall

- Human memory is not a store of “fixed or coded attributes”

- Green, the “world of linguistic expressions”

- About conversations involving non-intentional artefacts

- Logical interaction between artefacts

- A framework for collaborative theory building

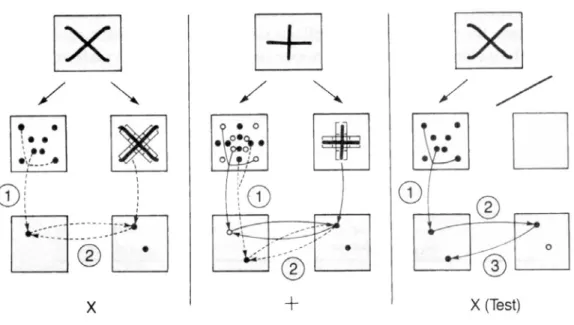

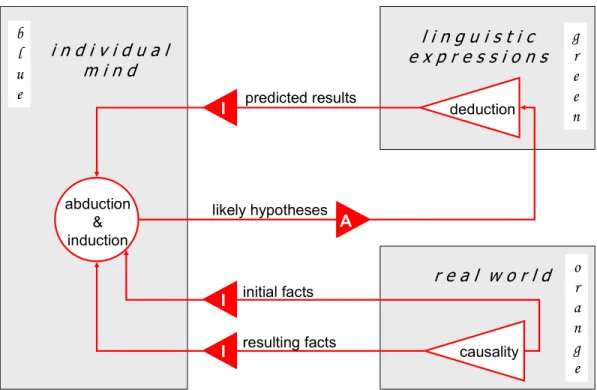

- Theory building: from Peirce to Popper

- Theory discovery: the role of selectionist thinking

- Intertwining selectionist thinking and logical deduction

- Summarizing the requirements

- Filling a Sudoku

- Conceptualizing around ‘Sudoku’

- A first version of the theory

- Refining the theory

- Moderating principles for a conversational abstract machine

- Refining the problem definition

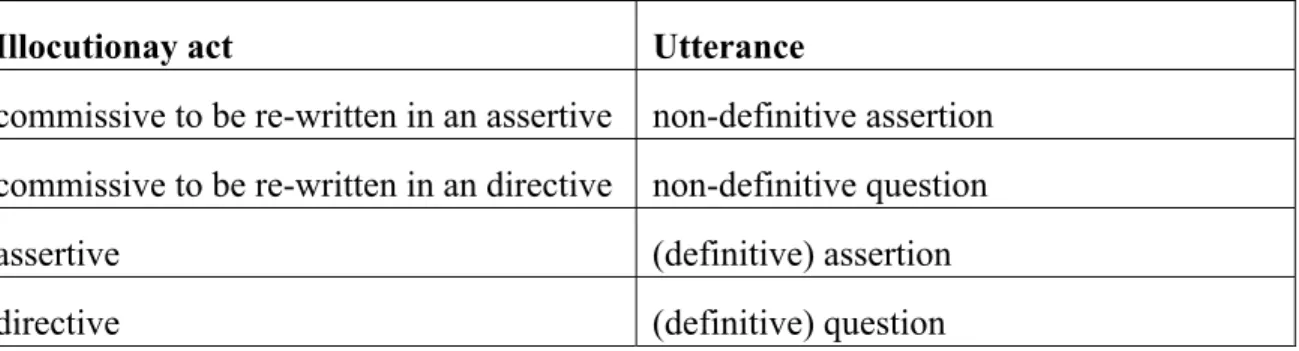

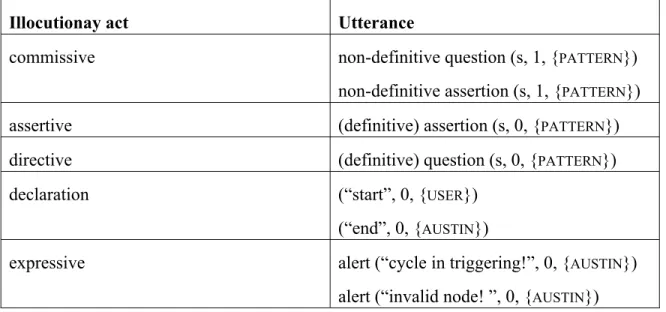

The point of assertive speech acts is to commit the hearer to the truth of the claim. We propose to call 'logical consequence of the interaction within the formal system' the result of the global rewriting.

3 AUSTIN: a conversational abstract machine

Introducing the concepts .1 Formalizing the moderating principles

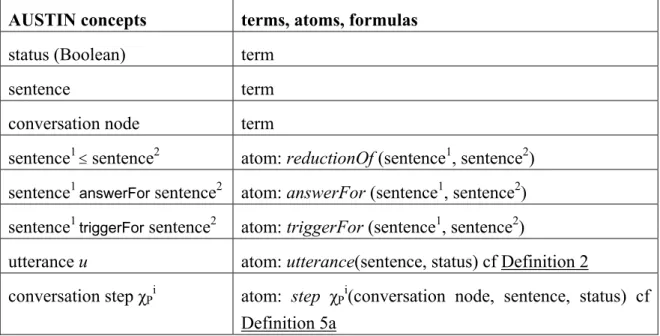

- Denotations

- A quick overview of the calculus

- A first colourful example

52 "substitution" should be understood from the point of view of the model called, the original sentences remain present as long as the "model ready to be called" is present; this will be managed in the concept of "statements". Noting immediately that 'c' is emitted from both (χB,∗) and (χC,∗), the reader may at first approximation consider that two instances of 'c' are present at the beginning of the conversation. Our calculation relies on the fact that each participant is called only when its initiator (either a question e.g. a// what is the number of instances of x? // or an assertion e.g. a' // .. here is a set of instances of x //) is prompted by one of the sentences already elicited in the conversation:.

Sentences and utterances .1 Sentences

- Utterances

- Changes of state in the set of utterances

An utterance may or may not be removed from the conversation: only in the latter case will it be considered a valuable trigger or response for other utterances. A definitive utterance remains in the conversation until its end, while a non-definitive utterance will eventually be removed. 55 The non-definitive propositions and questions "disappear" as soon as their list of emitters becomes empty: according to definition 2, they are no longer considered utterances.

Conversation nodes and conversational patterns

- Conversation nodes are “reducers”

- Conversational patterns are semi-lattices of conversation nodes

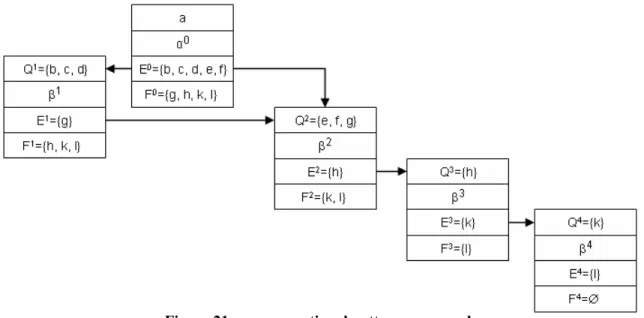

The first Node is the startNode α0 which broadcasts questions about the description of the journey: where from (b). The starter “s” of the startNode is also referred to as the starter of the entire conversation pattern. In other words, we need “|” repeat within a given conversation pattern in successive waves corresponding to successive activations of the nodes: ((χ 3 |χ 1)|(χ 2 |χ 1)).

- Invocation, activation and revocation of steps

- Another example: buying a train ticket

While the second condition is external (all steps of all conversational patterns can potentially provide answers), the first condition is internal (only antecedents of a step within a conversational pattern are allowed to reduce preliminary questions); when all preliminary questions have reached their definite expression, we will say that the postStep is READY TO BE ACTIVATED60. WHITE” will mean: [status = “1” and step NOT READY TO BE ACTIVATED because preliminary questions are not all reduced]. LIGHT GRAY” will mean: [status = “1” and step READY TO BE ACTIVATED because preliminary questions are all reduced].

Computing the conversation

- Testing the current state of the conversation

- Ruling the transitions

- Transitions and further tests in a loop

- Will this conversation end in finite time?

- Are there several possible final states?

- Tackling the question of complexity Let us take the following denotations

- Turing Machines seen as particular conversational patterns Let TM be a Turing Machine,

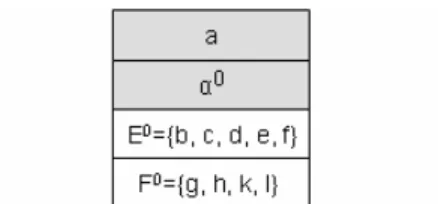

Let Χ be a conversation frame, let χP={χPi}i≥0 ∈ Χ, let ω0 be an initial state of a conversation, let ω be the current state of the conversation. Χ ap pattern consisting of nodes, let ω1 = be the state from which the call arises from invoke (xP0, a). This is obvious in the case of “invoke” or “revoke”; let's prove it in case of.

Technical glossary

By extension, a postStep is a follower of another conversation step if the associated nodes meet the condition.. invocation: i) used in a dynamic sense (invocation of a startStep of a conversation pattern) activation of the embedded reducer when activated by an appropriate sentence (plays the role of a context) ii) used in a static meaning (invocation of a conversational pattern) synonymous with emitter which is a pair (conversational pattern, context of activation) [Definition 5b; The reduction of "internal input 1" will give birth to definite utterances, while the reduction of "internal input 2" will give birth to non-definitive utterances. By extension, a conversation step is a follower of a postStep if the associated nodes satisfy the condition.

A generative grammar for sentences inspired by conceptual graphs

- From conceptual graphs to sentences

- Hints about the logical semantics

- Back to the decision problems

To check that the calculation has given all answers to s4, the "moderator" of the conversation must analyze if some more answers can come from other invoked patterns: as long as s1 is present, it will not answer s4. Let us now assume that s6 (or s7) is the starting point for a conversation pattern; we intuitively understand that s2 (or s3 or s4) can play the role of invocation context. This technical part has been kept brief and the demonstrations are not given in detail; the intention is only to make the reader understand what kind of comparisons need to be made during the computation to support the logical semantics outlined in [3.9.2].

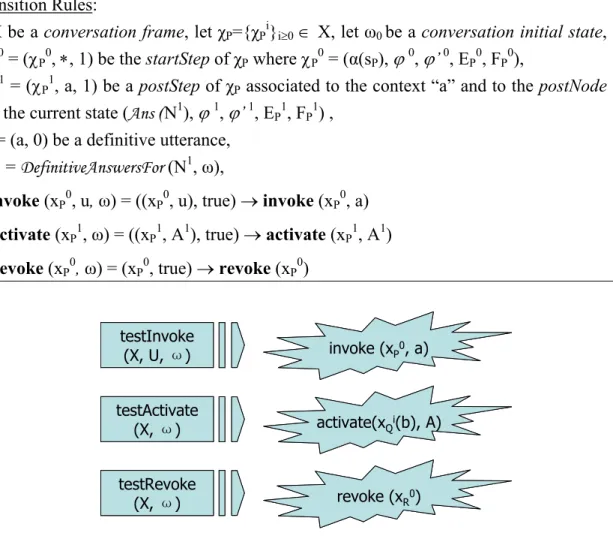

- The ‘Sudoku filling problem’

- A bunch of concepts and roles

- Assertives describing the initial grid

- Starters and initial commissives

- Inside the nodes of the conversational patterns

- The ‘Sudoku filling’ conversation

CA06 will be rewritten in assertions that accept values; CA07 will be overwritten in assertions that reject values; CD08 will be rewritten into a directive that requires accepted values for the current pair (row, column) in all countries; CD09 will be rewritten into a directive that requests the rejected values for the current pair (row, column) in all countries. CA06 will be rewritten in assertions that accept values; CA07 will be overwritten in assertions that reject values; CD08 will be overwritten in directives that require accepted values for (row, column) pairs belonging to the current array;. CD09 will be rewritten to a directive that requests rejected values for (row, column) pairs belonging to the current array.

![Figure 33 : Sudoku n° 53 [Le Monde]](https://thumb-eu.123doks.com/thumbv2/1bibliocom/462519.68548/148.918.174.780.210.619/figure-33-sudoku-53-monde.webp)

4 Compositional programming in AUSTIN

- Main concepts of the conversational calculus

- AUSTIN programs: notions of alert, result and residue

- Acceptability of programs

- Residue and result of an acceptable program Let ( ☺ , X) be an acceptable program

- Checking the acceptability before running the conversation

- Composition of AUSTIN Programs

- Analyzing AUSTIN Programs

- Detection of cycles within conversation frames

- Termination of a conversation and analys is of residues

- Compositionality of AUSTIN programs illustrated in the

- Going into the nodes of the Launcher

- Experimenting compositionality

A conversation defines a conversation frame, denoted by 'Χ', consisting of a set of conversation patterns and an initial set U (☺) of statements made by 'user' as input to be computed (e.g. the initial state of ' Sudoku grid). The remainder (☺, Χ) = (U1(ωf)), X1(ωf)) is the part of the final state consisting of non-final statements and non-activated steps. The result (☺, Χ) = (U0(ωf), X0(ωf)) is part of the final state, which consists of final statements and activated steps.

5 The AUSTIN machine put in perspective

Questioning the logical semantics

- Shapiro’s framework for concurrent logic programming languages

- Logical deduction in AUSTIN

When a sequential algorithm is chosen as a computational rule to explore the tree, as in PROLOG, the completeness of the transition system disappears. This results in an abstract machine that does not model input from an external environment, and is therefore not reactive in the full sense of the term. Note that the suspend result of the try function is not used in the 'reduce' and 'fail' transitions.

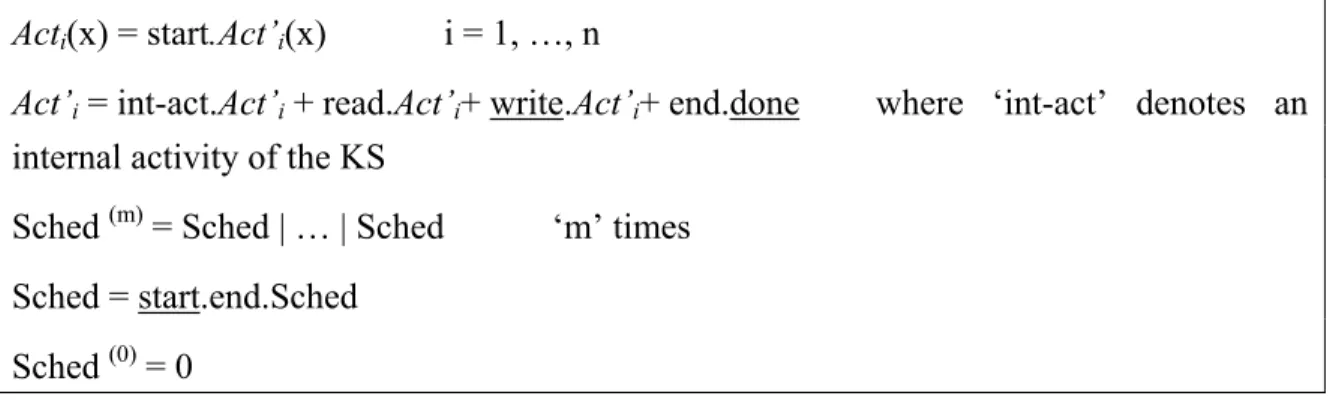

AUSTIN as a Blackboard

- The Blackboard Architecture

- Definition of the main concepts Blackboard

- Formalization of the communications

- About the control: applicability and selection

- AUSTIN: a blackboard with semantically controlled events

A prerequisite is that part of the state of a CS that determines the applicability of a blackboard using only blackboard state information. Act'i = int-act.Act'i + read.Act'i+ write.Act'i+ end.done where 'in-act' indicates an internal activity of the CS. Applicability of a CS is determined by the mapping Matchi between the current state of the blackboard and the state of the CS.

6 Summarizing the issues and planning future work

Future experimental work

- Improving interface and diagnostic facilities for programmers

- Using AUSTIN for controlling the interaction between ‘services’

- Parallelizing computation

A natural application of the AUSTIN machine would be addressing "service grouping," in the sense of allowing web services or grid services to dynamically interoperate and integrate into higher-level services. Call pattern call parallelization is already implemented in the current Java prototype through separate threads corresponding to different calls. Parallelization in the management of the “test pipe” and the “transition pipe” based on the ideas of [3.6.3] should allow further computational benefits.

Future theoretical work

Thus, analyzing in detail how transitions interfere through overlapping sets of utterances can lead to the management of independent groups of transitions, with subsequent "subsets of utterances" in which local states are defined. Exploring the semantically driven parallelism in the context of demanding computations can lead to useful results.

7 References

Roussel, “The birth of Prolog”, in The second ACM SIGPLAN conference on History of programming languages, p. Shapiro, "Fully abstract denotational semantics for flat concurrent prolog", in Proceedings of the IEEE Symposium on Logic in Computer Science. Gurevich, "On Kolmogorov Machines and Related Issues", in The Logic in Computer Science Column, Bulletin of European Assoc.

![Figure 10 applies to the stream of work conducted by Jean Sallantin and his group [Nobrega et al, 2000] around the interaction between humans and machines during the theory building process; it articulates the two conjectured reasoning modes into a fram](https://thumb-eu.123doks.com/thumbv2/1bibliocom/462519.68548/68.918.172.776.368.824/conducted-sallantin-nobrega-interaction-machines-articulates-conjectured-reasoning.webp)