7th International Conference on

Digital Arts

José Bidarra, Teresa Eça, Mírian Tavares, Rosangella Leote, Lucia Pimentel, Elizabeth Carvalho, Mauro Figueiredo (Eds.)

March 19–20, 2015 Óbidos, Portugal

Copyrigth c 2015 by Artech-International

All rigths reserved. No part of this book may be reproduced or transmitted in any means, elec-tronic or mechanical, including photocopying, recording or by any information storage and re-trieval system, without permission in writing from the publisher.

Published by Artech-International.

Proceedings of 7th International Conference on Digital Arts

Editors: José Bidarra, Teresa Eça, Mírian Tavares, Rosangella Leote, Lucia Pimentel, Elizabeth Carvalho, Mauro Figueiredo

ISBN: 978-989-99370-0-0

Composition, pagination and graphical organization: José Inácio Rodrigues, Mauro Figueiredo

7th International Conference on

Digital Arts

March 19–20, 2015 Óbidos, Portugal

International Scientific and Art Committee Chairs

José Bidarra, DCeT, Univ. Aberta, Co-President (PT) Teresa Eça, I2ADS, Univ. do Porto, Co-president (PT) Mírian Tavares, FCHS, Univ. Algarve, Vice-president (PT) Rosangella Leote, UNESP, Vice-president (BR)

Lucia Pimentel, UFMG, Vice-president (BR)

Steering Committee

Adérito Marcos, Chairman, DCeT-Univ. Aberta (PT) Álvaro Barbosa, EA-UCP (PT)

Christa Sommerer, K.U. Linz (AT) Henrique Silva, Bienal de Cerveira (PT) Lola Dopico, FBA-Univ. Vigo (ES) Nuno Correia, DIFCT-UNL (PT) Seamus Ross, Univ. Toronto (CA)

Organizing Committee Chairs

Elizabeth Carvalho, Chairman, Univ. Aberta (PT) Mauro Figueiredo, Co-chairman, Univ. Algarve (PT)

Local Organizing Committee

José Coelho, Univ. Aberta (PT) Amílcar Martins, Univ. Aberta (PT)

Secretariat

ZatLab: Gesture Recognition Framework for Artistic

Performance Interaction - Overview

André Baltazar

UCP - School of Arts, Center for Science and Technology in the Arts - Porto, Portugal

abaltazar@porto.ucp.pt

Luís Gustavo Martins

UCP - School of Arts, Center for Science and Technology in the Arts - Porto, Portugal

lmartins@porto.ucp.pt

ABSTRACT

The main problem this paper addresses is the real-time recognition of gestures, particularly in the complex domain of artistic performance. By recognizing the performer ges-tures, one is able to map them to diverse controls, from lightning control to the creation of visuals, sound control or even music creation, thus allowing performers real-time manipulation of creative events.

The work presented here takes this challenge, using a mul-tidisciplinary approach to the problem, based in some of the known principles of how humans recognize gesture, to-gether with the computer science methods to successfully complete the task. Therefore, this paper describes a ges-ture recognition framework developed with the goal of be-ing used mainly in artistic performance domain. First one will review the previous works done in the area, followed by the description of the framework design and there is also the review of two artistic applications of the framework.

The overall goal of this research is to foster the use of gestures, in an artistic context, to the creation of new ways of expression.

Keywords

HCI, gesture recognition, machine learning, interactive per-formance

1. INTRODUCTION

There is so much information in a simple gesture. Why not use it to enhance a performance? We use our hands con-stantly to interact with things. Pick them up, move them, transform their shape, or activate them in some way. In the same unconscious way we gesticulate in communicat-ing fundamental ideas: stop; come closer; go there; no; yes; and so on. Gestures are thus a natural and intuitive form of both interaction and communication [19]. Children start to communicate by gestures (around 10 months age) even before they start speaking. There is also an ample evidence that by the age of 12 months children are able to understand the gestures other people produce [15]. For the most part gestures are considered an auxiliary way of communication to speech, tough there are also studies that focus on the role of gestures in making interactions work [14].

Gestures and expressive communication are therefore

in-Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee.

Copyright remains with the author(s).

trinsically connected, and being intimately attached to our own daily existence, both have a central position in our (nowadays) technological society. However, the use of tech-nology to understand gestures is still somehow vaguely ex-plored, it has moved beyond its first steps but the way to-wards systems fully capable of analyzing gestures is still long and difficult [17]. Probably because if in one hand, the recognition of gestures is somehow a trivial task for hu-mans, in other, the endeavor of translating gestures to the virtual world, with a digital encoding is a difficult and ill defined task. It is necessary to somehow bridge this gap, stimulating a constructive interaction between gestures and technology, culture and science, performance and commu-nication. Opening thus, new and unexplored frontiers in the design of a novel generation of multimodal interactive systems.

This paper proposes a new interactive gesture recogni-tion framework called Zatlab System (ZtS). This framework is flexible and extensible. Thus, it is in permanent evolu-tion, keeping up with the different technologies and algo-rithms that emerge at a fast pace nowadays. The basis of the proposed approach is to partition a temporal stream of captured movement into perceptually motivated descriptive features. The analysis of the features will them reveal (or not) the presence of a gesture (similar to the way a human “unconsciously” perceives a gesture [10]).

The framework described will take the view that per-ception primarily depends on the previously knowledge or learning. Just like humans do, the framework will have to learn gestures and their main features so that later it can identifies them. It is however planned to be flexible enough to allow learning gestures on the fly.

Designed to be efficient, the resulting system can be used to recognize gestures in the complex environment of perfor-mance, as well as in “real-world” situations, paving the way to applications that can benefit not only the performative arts domain, but also, probably in the near future, helping the hearing impaired to communicate.

2. BACKGROUND

Several projects done in interactive dance stand out as im-portant references on how video analysis technologies have provided interesting ways of movement-music interaction. Early works of composers Todd Winkler [20] and Richard Povall [13], or the choreographer Robert Weschler work with Palindrome1. Also, Mark Coniglio continued development of his Isadora programming environment2plus the

ground-breaking work Troika Ranch3.

Other example of research in this field of gestures is the

1http://www.palindrome.de

2http://www.troikatronix.com/isadora.html 3http://www.troikaranch.org/

7th International Conference on Digital Arts – ARTECH 2015

J. Bidarra, T. Eça, M. Tavares, R. Leote, L. Pimentel, E. Carvalho, M. Figueiredo (Editors) 269

seminal work of Camurri et al, at Infomus Lab- Genoa, with several studies published, including:

• An approach for the recognition of acted emotional states based on the analysis of body movement and gesture expressivity. By using non-propositional move-ment qualities (e.g. amplitude, speed and fluidity of movement) to infer emotions, rather than trying to recognise different gesture shapes expressing specific emotions, they proposed a method for the analysis of emotional behaviour based on both direct classifica-tion of time series and a model that provides indica-tors describing the dynamics of expressive motion cues [7].

• The Multisensory Integrated Expressive Environments, a framework for mixed reality applications in the per-forming arts such as interactive dance, music, or video installations, addressing the expressive aspects of non-verbal human communication [6].

• The research on the modelling of expressive gesture in multimodal interaction and on the development of multimodal interactive systems, explicitly taking into account the role of non-verbal expressive gesture in the communication process. In this perspective, a particu-lar focus is on dance and music as first-class conveyors of expressive and emotional content [5].

• The Eyesweb software, one of the most remarkable and recognised works, used toward gestures and affect recognition in interactive dance and music systems [4]. Also Bevilacqua et al, at IRCAM-France worked on some projects that used unfettered gestural motion for expressive musical purposes [8] [2]. The first, involved the development of software to receive data from a Vicon motion capture system and to translate and map it into music controls and other media controls such as lighting. And the other ([2]) consisted in the development of the toolbox “Mapping is not Music” (MnM) for Max/MSP4 dedicated to mapping

between gesture and sound.

More recently, Nort and Wanderley [11] presented the LoM toolbox. This allowed artists and researchers access to tools for experimenting with different complex mappings that would be difficult to build from scratch (or from within Max/MSP) and which can be combined to create many dif-ferent control possibilities. This includes rapid experimen-tation of mapping in the dual sense of choosing what pa-rameters to associate between control and sound space as well as the mapping of entire regions of these spaces through interpolation.

Schacher [16] searched answers for questions related to the perception and expression of gestures in contrast to pure motion-detection and analysis. Presented a discus-sion about a specific interactive dance project, in which two complementary sensing modes were integrated to obtain higher-level expressive gestures, Polloti et al. [12] studied both sound as a means for gesture representation and ges-ture as embodiment of sound and Bokowiec [3] proposed a new term, “Kinaesonics”, to describe the coding of real-time one-to-one mapping of movement to sound and its expres-sion in terms of hardware and software design.

Also, already in the scope of this project, the author pub-lished a first version of the framework in Artech 2012 con-ference [1]. The paper described a modular system that allowed the capture and analysis of human movements in an unintrusive manner (using the Kinect as video capture

4http://cycling74.com/products/max/

system and a custom application for video feature extrac-tion and analysis developed using openFrameworks5). The

extracted gesture features were subsequently interpreted in a machine learning environment (provided by Wekinator [9]) that continuously modified several input parameters in a computer music algorithm (implemented in ChucK [18]. The paper published was one of the steps for the framework presented in this paper.

Despite all these relevant works made in this sub theme of the Human-Computer Interaction field, there are always new technologies emerging and new algorithms to apply to somehow improve and go further. This is the purpose of this work, to push through existing technology and contribute with a new framework to analyse gestures and use them to interact/manipulate/create events in a live performance setup.

3. THE FRAMEWORK

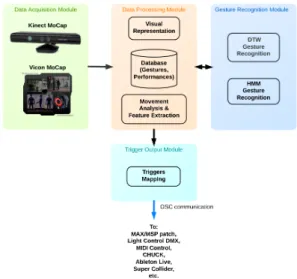

An overview of the proposed gesture recognition framework is presented in Figure 1. A summarized description of the main blocks that constitute the proposed system will be presented in this section.

The ZtS is a modular framework that allows the capture and analysis of human movements and the further recog-nition of gestures present in those movements. Thus, the Data Acquisition Module will process data captured from a Microsoft Kinect or a Vicon Blade Motion Capture System. However it can be easily modified to have input from any type of data acquisition hardware.

The data acquired goes through the Data Processing Mod-ule. Here, it is processed in terms of movement analysis and feature extraction for each body joint (relative positions, velocities, accelerations). This data will also allow a visual representation of the skeleton captured.

Having the features of the movements as the input, in the Gesture Recognition Module, these are processed by two types of Machine Learning algorithms. The Dynamic Time Warping (DTW) and Hidden Markov Models (HMM). If a gesture is recognized, it is passed to the Processing Module and this will store it, represent it or transmit it to the Trigger Output Module.

In the Trigger Output Module the movement features se-lected or gestures detected are mapped into triggers. These triggers can be continuous or discrete and can be sent to any program that allows Open Sound Control (OSC) com-munication protocol.

In summary, the framework was entirely implemented as a standalone application to provide a fast installation and friendly graphical user interface (GUI), allowing users from different backgrounds and with different purposes to work with it. Besides that, the integration of the Machine Learn-ing algorithms provides a robust method for the gesture recognition. Next are presented the main contributions of the ZatLab System.

4. MAIN CONTRIBUTIONS

As concrete contributions there are the various modular tools developed in the scope of this framework now available as source software, in the form of addons for open-Frameworks6, namely:

• A skeleton joint representation module - allows the visual feedback of the subject being captured.

5http://www.openframeworks.cc

6openFrameworks is a powerfull C++ toolkit designed to

develop real-time projects. Nowadays, is a popular platform for experiments in generative sound art, creating interactive installations and audiovisual performances.

270 A. Baltazar and L. Martins / ZatLab: Gesture Recognition Framework for Artistic Performance Interaction - Overview

• An OSC transmission module able to read and trans-mit data in real-time from the Vicon Blade Motion Capture proprietary program.

• A gesture recognition module based in DTW. • A gesture recognition module based in HMM. • The entire framework (ZtS) consisting on the tools

listed previously working together in a single opera-tional framework for the recognition of real-time ges-tures and event triggering.

All these will be available for download at openFrame-works website. Meanwhile they can be downloaded at GitHub7.

Next are described the two artistic applications of the ZtS framework and the evaluation made on it.

Figure 1: ZatLab system architecture diagram.

Figure 2: The setup used for FestivalIn. On the left, the system setup, you can notice the Kinect bellow the LCD TV. On the right top an example of the visuals and left bottom a kid playing with it.

5. ARTISTIC APPLICATIONS

5.1 The ZtS in a Performance

MisoMusic Portugal was commissioned to create an inter-active multimedia Opera (to debut in September 2013), by the renown Polish Festival ’Warsaw Autumn’ (Warszawska Jesie˚A ˇD)8.

7https://github.com/andrebaltaz/ofxZtS

8http://warszawska-jesien.art.pl/en/wj2013/home

Knowing the work developed in the scope of this project, MisoMusic proposed the use of the ZtS framework in the Opera to control real-time audio samples and the direct sound input of the voice of one performer.

The framework had to be tailored to the composer/performer (Miguel Azguime) needs. He wanted to control sound sam-ples and live voice input with his movements and gestures. In this case, the framework was adapted with several trig-gers that controlled expressive sounds in a MAX/MSP patch.

The ZtS framework enabled several types of musical ex-pression:

• The trigger of sound samples with the movement ve-locity of the hands of the performer.

• The access to eight banks of sound samples either by performing a gesture or by pressing a midi pedal. • The trigger of capturing a sound action (sound sample

or live voice input). The performer was able to freeze a sound when he performed a holding hands pose. This enabled the performer to control the captured sound in terms of pitch, reverb, feedback and loudness. When he wanted he just needed to shake is arms to release the sound.

The framework was used for the solo of one of the main Opera characters, performed by Miguel Azguime himself (video at https://andrebaltazar.wordpress.com). The sys-tem travelled with the Opera through out the entire tour, thus being presented in Lisbon, Poland and Sweden.

In sum, the result of the developments made specially for the Opera use was very interesting. The relation between human movement and sound manipulation was immediately perceived by the audience, therefore creating a particular arouse during that part of the piece. Of course the credit also goes to the performer, in this case Miguel, that learned very quickly to interact and get exactly what he wanted from the framework, when he wanted, thus enabling him to add extra layers of emotion and enhancement to the solo he performed.

5.2 The Performer Opinion

Once the Opera presentations were finished, one asked Miguel Azguime, the author/performer and main user of the ZtS framework, to answer a few questions about the system and to transmit his opinion about it. Here is a literal quote of an excerpt from the text he sent.

A clear perception to the public that the gesture is that of inducing sound, the responsiveness of the system to al-low clarification of musical and expressive speech, effectively ensuring the alternation between sudden, rapid, violent ges-tures, sounds on the one hand and modular suspensions by gesture in total control of the sound processing parameters on the other, constituted a clear enrichment both in terms of communication (a rare cause and effect approach in the con-text of electronic music and it certainly is one of its short-comings compared with music acoustic instruments) and in terms of expression by the ability of the system to translate the language and plastic body expression.

Clearly, as efficient as the system may be, the results thereof and eventual artistic validation, are always depen-dent on composite music and the way these same gestures are translated into sound (or other interaction parameters) and therefore is in crossing gesture with the sound and the intersection of performance with the musical composition (in this case) that is the crux of the appreciation of Zatlab. However, regardless of the quality of the final result, the system has enormous potential as a tool sufficiently open

A. Baltazar and L. Martins / ZatLab: Gesture Recognition Framework for Artistic Performance Interaction - Overview 271

and malleable in order to be suitable for different aesthetic, modes of operation and different uses.”

5.3 The ZtS as Public Installation

Another application of the system consisted in making it as an interactive installation at Festival Innovation and Cre-ativity (FestivalIN)9, Lisbon. The FestivalIN was announced

as the biggest innovation and creativity aggregating event being held in Portugal, precisely in Lisbon at the Interna-tional Fair of Lisbon.

Departing from the developments made to the Opera, the framework was adapted to be more responsive and easy to interact with. The users were able to trigger and control sound samples, much like Miguel did on the Opera, however they did not had the same level of control.

Since the purpose was to install the application at a kiosk and leave it there for people to interact with, the visuals were further developed to create some curiosity and attract users. The human body detection algorithm was also cus-tomized in order to filtrate the control, amongst the crowd, to only the person closer and centered to the system.

The response to the system was very good, in particular amongst the children. All day long there was someone play-ing with it. The fact that the people were detected imme-diately either if they were just passing by or really wanted to interact was a key factor to the system popularity. The persons saw their skeleton mirrored on the screen and wave at it, therefore triggering sounds and building up the users curiosity. Soon enough they understand the several possi-bilities and were engaged, interacting and creating musical expressions.

In Figure 2 you can see the setup and some interactions with the system.

6. CONCLUSIONS

This paper proposed a flexible and extensible framework for recognition of gestures in real time. The goal of the proposed framework is to capture gestures (in a non intru-sive way) and recognize them, thus allowing to set triggers and control performance events, including musically expres-sive events. The main improvement this framework brings to the formers is the integration of the Machine Learning algorithms (namely the DTW and HMM) for gesture recog-nition.

Two artistic applications of the system were presented. The first one, in particular, revealing the importance of the framework in the NIME domain.

A software implementation of the system described in this paper is available as free and open source software. To-gether with the belief that this work showed the potential of gesture recognition, it is expected that the software im-plementation may stimulate further research in this area as it can have significant impact in many HCI applications such as interactive installations, performances and Human-Computer Interaction per se.

7. ACKNOWLEDGMENTS

This work was developed under the Portuguese Science and Technology Foundation Grant number SFRH/BD/61662/2009.

8. REFERENCES

[1] A. Baltazar, L. Martins, and J. Cardoso. ZATLAB: A Gesture Analysis System to Music Interaction. In 6th International Conference on Digital Arts (ARTECH 2012), 2012.

9http://www.festivalin.pt/

[2] F. Bevilacqua and R. Muller. A gesture follower for performing arts. Proceedings of the International Gesture . . . , pages 3–4, 2005.

[3] M. A. Bokowiec. V ! OCT ( Ritual ): An Interactive Vocal Work for Bodycoder System and 8 Channel Spatialization. In NIME 2011 Proceedings, pages 40–43, 2011.

[4] A. Camurri, S. Hashimoto, M. Ricchetti, A. Ricci, K. Suzuki, R. Trocca, and G. Volpe. Eyesweb: Toward gesture and affect recognition in interactive dance and music systems. Comput. Music J., 24(1):57–69, Apr. 2000.

[5] A. Camurri, C. L. Krumhansl, B. Mazzarino, and G. Volpe. An Exploratory Study of Anticipating Human Movement in Dance. Stimulus, (i):2–5, 2004. [6] a. Camurri, G. Volpe, G. D. Poli, and M. Leman.

Communicating expressiveness and affect in multimodal interactive systems. Multimedia, IEEE, 12(1):43–53, Jan. 2005.

[7] G. Castellano, S. Villalba, and A. Camurri. Recognising human emotions from body movement and gesture dynamics. Affective computing and intelligent . . . , pages 71–82, 2007.

[8] C. Dobrian and F. Bevilacqua. Gestural control of music: using the vicon 8 motion capture system. In Proceedings of the 2003 conference on New interfaces for musical expression, pages 161–163. National University of Singapore, 2003.

[9] R. Fiebrink, D. Trueman, and P. Cook. A

metainstrument for interactive, on-the-fly machine learning. In Proc. NIME, volume 2, page 3, 2009. [10] A. Kendon. Gesticulation and speech: two aspects of

the process of utterance. In M. R. Key, editor, The Relationship of Verbal and Nonverbal Communication, pages 207–227. Mouton, The Hague, 1980.

[11] D. V. Nort, M. M. Wanderley, and D. Van Nort. The LoM Mapping Toolbox for Max/MSP/Jitter. In Proceedings of the International Computer Music Conference, New Orleans, USA, 2006.

[12] P. Polotti and M. Goina. EGGS in Action. In NIME, number June, pages 64–67, 2011.

[13] R. Povall. Technology is with us. Dance Research Journal, 30(1):1–4, 1998.

[14] W.-M. Roth. Gestures: Their Role in Teaching and Learning. Review of Educational Research,

71(3):365–392, Jan. 2001.

[15] M. L. Rowe and S. Goldin-meadow. development. First Language, 28(2):182ˆa ˘A¸S199, 2009.

[16] J. C. Schacher. Motion To Gesture To Sound : Mapping For Interactive Dance. Number Nime, pages 250–254, 2010.

[17] G. Volpe. Expressive Gesture in Performing Arts and New Media: The Present and the Future. Journal of New Music Research, 34(1):1–3, Mar. 2005.

[18] G. Wang, P. Cook, and Others. ChucK: A concurrent, on-the-fly audio programming language. In

Proceedings of International Computer Music Conference, pages 219–226, 2003.

[19] R. Watson. A survey of gesture recognition techniques technical report tcd-cs-93-11. Department of

Computer Science, Trinity College ˆa ˘Ae, (July), 1993., [20] T. Winkler. Making motion musical : Gesture

mapping strategies for interactive computer music. 1995.

272 A. Baltazar and L. Martins / ZatLab: Gesture Recognition Framework for Artistic Performance Interaction - Overview