Dual Transform based Feature Extraction for Face

Recognition

Ramesha K1, K B Raja2 1

Department of Telecommunication Engineering, Vemana Institute of Technology, Koramnagala Bangalore-560034, India

2Department of Electronics and Communication Engineering,

University Visvesvaraya College of Engineering, Bangalore University, K R Circle, Bangalore-560001, India

Abstract

Face recognition is a biometric tool for authentication and verification, has great emphasis in both research and practical applications. Increased requirement on security, fully automated biometrics on personal identification and verification has received extensive attention over the past few years. In this paper Dual Transform based Feature Extraction for Face Recognition (DTBFEFR) is proposed. The images from database are of different size and format, and hence are to be converted into standard dimension, which is appropriate for applying DT-CWT. Variation due to expression and illumination are compensated by applying DWT on an image and also DWT reduces image dimension by decomposition. The DT-CWT is applied on LL subband, which is generated after two-level DWT, to generate DT-CWT coefficients to form feature vectors. The feature vectors of database and test face are compared using Random Forest, Euclidian Distance and Support Vector Machine matching algorithms. The correct recognition rate, false reject rate, false acceptance rate and efficiency are better in the case of proposed method as compared to existing techniques.

Key Words: Face Recognition, Histogram Equalization, Discrete Wavelet Transform, Random Forest, Dual Tree Complex Wavelet Transform, Support Vector Machine.

1. INTRODUCTION

Biometrics refers to a science of analyzing human body parts for security purposes and the word is derived from the Greek words [1] bios (life) and metrikos (measure). Most of the biometric system employed in real-world application is unimodal; they depend on the evidence of a single source of information for authentication. Biometric identification is becoming more popular now a day’s, due to the existing security requirements of society in the field of information, business, military, e-commerce and etc. In the mid-19th century, criminal identification division of the police department in Paris [2], developed and practiced the idea of using many body characteristics to identify criminals. Since then fingerprint recognition technique emerged rapidly in law enforcement to identify the criminals. The different

techniques for recognition of a person is based on (i) physiological characteristics such as fingerprint, face, iris, retinal blood vessel patterns, hand geometry, vascular pattern, and DNA, and (ii) behavioral characteristics such as voice, signature and keystroke. The verification of a person using biometrics is more secured since, biometric parameters are the parts of human body hence cannot be stolen and/or modified, compared to traditional systems such as Personal Identification Number (PIN), passwords, smartcards etc. Face recognition is a nonintrusive method, and facial images are the most common biometric characteristic used by humans to make a personal recognition. The popular approaches for face recognition are based on either: (i) The location and shape of facial attributes such as the eyes, eyebrows, nose, lips and chin, and their spatial relationships, or (ii) The overall analysis of the face image that represents a face as a weighted combination of a number of canonical faces. Some of the face recognition systems are commercially available and their performance is reasonably good but they impose some restrictions on variation such as illumination, expression, pose, occlusions.

Support Vector Machine (SVM), Neural Network [17] and Random Forest (RF) [18, 19] may be used for matching.

2.

RELATED WORK

Sidra Batool Kazmi et al., [20] presented an automatic recognition of facial expressions from face images. The features are extracted by performing three level 2-D discrete wavelet decomposition of image and further dimensionality reduction is achieved by performing Principal Component Analysis (PCA) and the features are supplied to a bank of five binary neural networks, each trained for a particular expression class using one-against-all approach. Neural network outputs are combined using a maximum function. Taskeed Jabid et al., [21] presented a novel local feature descriptor, the Local Directional Pattern (LDP), for recognizing human face. A LDP features are obtained by computing the edge response values in all eight directions at each pixel position and generating a code from the relative strength magnitude. Each face is represented as a collection of LDP codes for face recognition process. Xiaoyang Tan and Bill Triggs [22] introduced image preprocessing stage based on gamma correction, Difference of Gaussian filtering and robust variance normalization. Local Binary Pattern (LBP) proves to be the best of these features, and we improve on its performance in two ways: by introducing a 3-level generalization of LBP, Local Ternary Patterns (LTP), and by using an image similarity metric based on distance transforms of LBP image slices.

Vaishak Belle et al., [23] presented a system for detecting and recognizing faces in images in real time which is able to learn new identities in instants. To achieve real-time performance used random forests for both detection and recognition, and compared with well-known techniques such as boosted face detection and SVM’s for identification. Bo Du et al., [24] presented comparisons of several preprocessing algorithms such as Histogram Equalization, Histogram Specification, Logarithm transform, Gamma Intensity Correction and Self Quotient Image for illumination insensitive face recognition. Analysis is done on face databases CMU-PIE, FERET and CAS-PEAL. Zhi-Kai Huang et al., [25] presented a method for Multi-Pose Face Recognition in color images, to addresses the problems of illumination and pose variation. The color Multi-Pose faces image features were extracted based on Gabor wavelet with different orientations and scales filters, then the mean and standard deviation of the filtering image output are computed as features for face recognition. These features were fed into SVM for face recognition. Harin Sellahewa and Sabah A Jassim [26] presented the fusion strategy for a multi-stream face

recognition scheme using DWT under varying illumination conditions and facial expressions. Based on experimental results, argue for an image quality-based, adaptive fusion approach to wavelet-based multi-stream face recognition.

K Jaya Priya and R S Rajesh [27] presented face recognition method on local appearance feature extraction using DT-CWT. Two parallel DWT with different low pass and high pass filters in different scales were used to implement DT-CWT. To generate six different directional subbands with complex coefficients, the linear combination of subbands generated by two parallel DWT is used. Face is divided into several non-overlapped parallelogram blocks. The local mean, standard deviation and energy of complex wavelet coefficients are used to explain face image. K Jaya Priya and R S Rajesh [28] proposed multi resolution and multi direction method for expression and pose invariant face recognition based on local fusion of magnitude of the DT-CWT detailed subband at each levels. Multi orientation information on each image is obtained by a subset of band filtered images containing coefficients of DT-CWT to characterize the face textures. The overall texture features of an image at each resolution are obtained through fusion of the magnitude of the detailed subbands. Fused subbands are divided into small subblocks, from these extracted compact and meaningful feature vectors using simple statistical measures. Yue-Hui Sun and Ming-Hui Du [29] proposed face detection using DT-CWT on spectral histogram. Laplacian of Gaussian filter and DT-CWT filter are used to capture spatial and frequency properties of human faces at different scales and different orientations. Then, the responses convolved with the filters are summarized to multi dimensional histograms. The histogram matrix is fed to the trained SVM for classification.

3. BACKGROUND

In this section necessary background for the work such as HE, DCT, DWT and DT-CWT are discussed.

3.1 Histogram Equalization (HE)

where all the values are equi-probable. For image I(x, y) with discrete k gray values histogram is given by Eq. (1) ) 1 .( ... ... ... N i n P( i)=

where i = 0, 1, 2,... k-1 grey level and N is total number of pixels in the image.

Transformation to a new intensity value is defined by Eq. (2) ) 2 ...( ... ... ... 1 k 0 i 1 k 0 i ) i ( P N i n HE

I

∑

∑

− = − = = =

Output values are from domain of [0, 1]. To obtain pixel values in to original domain, it must be rescaled by the k−1 value. The enhanced image using HE is shown in Figure 1.

Fig. 1: (a) Original Image (b) HE Image.

3.2 Discrete Cosine Transform

The DCT [31] is the illumination normalization approach for face recognition under varying lighting conditions. The main idea of the method is to reduce illumination variation by eliminating low frequency coefficients in the logarithm DCT domain. The 2D M*N DCT is defined using Eq. (3)

) 3 ...( ... 2N 1)v (2y cos 2M 1)u (2x cos 1 -M 0 x 1 -N 0 y f(x.y) α(u)α(v) v) C(u,

∏ + ∏ + ∑ = ∑= =and the inverse transform is given in Eq. (4)

) 4 ..( ... ... 2N 1)v (2y cos 2M 1)u (2x cos v) cos(u, 1 -M 0 u 1 -N 0 v α(u)α(v) v) f(u,

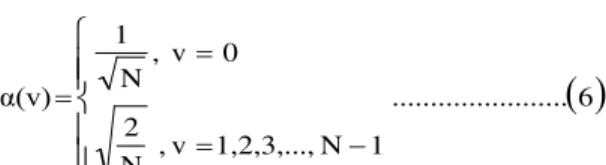

∏ + ∏ + ∑ = ∑= =and the coefficients are given in Equations (5) and (6)

( )

5 ... ... ... 1 M 1,2,3,..., u , M 2 0 u , M 1 α(u) − = = =( )

6 ... ... ... 1 N 1,2,3,..., v , N 2 0 v , N 1 α(v)

− = = =Normally illumination lies in the low frequency band, and it can be reduced by removing low frequency components. This is achieved by setting to zero, this works like an ideal high pass filter. The DCT coefficient determines the overall illumination of a face image, so, it sets to the same value to obtain the desired uniform illumination as in Eq. (7)

( )

7 ... ... ... ... MN logμo C(0.0)=The value µ0 is chosen near the middle level of the original image. This approach, by discarding the DCT coefficients of the original image, only adjusts the brightness, whereas, by discarding the DCT coefficients of the logarithm image, adjusts the illumination and

recovers the reflectance characteristic of the face.

3.3 2D-Discrete Wavelet Transform

The 2D-DWT of a signal x is implemented by iterating the 2D analysis filter bank on the lowpass subband image. Here, at each scale there are three subbands instead of one. There are three wavelets which are associated with the 2D wavelet transform. The Figure 2 illustrates three wavelets as gray scale images. The first two wavelets are oriented in the vertical and horizontal directions and the third wavelet does not have a dominant orientation. The third wavelet mixes two diagonal orientations and gives rise to the checkerboard artifact.

filtering, thresholding and quantization upsets the delicate balance between the forward and inverse transforms, lead to reconstructed signal artifacts. (iv)Lack of Directionality: Fourier sinusoids in higher dimensions correspond to highly directional plane waves, the standard tensor product construction of M-D wavelets produces a checkerboard pattern that is simultaneously oriented along several directions. This lack of directional selectivity complicates modeling and processing of geometric image features such as ridges and edges.

Fig. 2: 2D-Wavelets

3.4 Dual-Tree Complex Wavelet Transform

(DT-CWT)

Fortunately, we have solutions to the four DWT shortcomings. Dual Tree Complex Wavelet Transform [33], a form of discrete wavelet transform which generates complex coefficients by using a dual tree of wavelet filters to obtain their real and imaginary parts. DT-CWT has the following properties to overcome the drawbacks of DWT:

1. Approximate shift invariance; 2. Good directional selectivity in 2-dimensions (2-D) with Gabor like filters also true for higher dimensionality: m-D); 3. Perfect reconstruction; 4.Limited redundancy: 2× redundancy in 1-D (2d for d-dimensional signals), this is less than the log2N× redundancy of a perfectly shift-invariant

DWT; 5. Efficient order N computation. DT-CWT introduces limited redundancy (2m:1 form-dimensional signals) and allows the transform to provide approximate shift invariance and directionally selective

filters by preserving the properties of perfect reconstruction and computational efficiency with balanced frequency responses. The only drawback is a moderate redundancy:

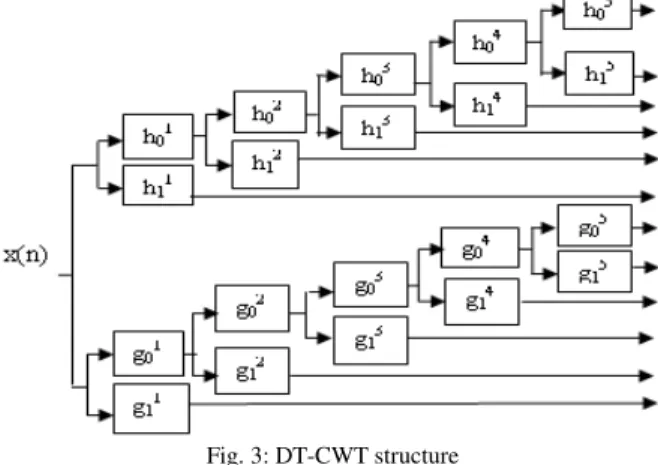

The dual-tree complex DWT of a signal x(n) is implemented using two critically-sampled DWTs in parallel on the same data, as in Figure 3. To gain advantage over DWT, the filters designed in the upper and lower DWTs are different and are designed to interpret the subband signals of the upper DWT as the real part of a complex wavelet transform, and lower DWT as the imaginary part. When designed in this way the DT-CWT is nearly shift invariant, in contrast to the

classic DWT. The DT-CWT is used to implement 2D wavelet transforms where each wavelet is oriented, and useful for image processing such as image denoising and enhancement applications.

Fig. 3: DT-CWT structure

There are two types of the 2D dual-tree wavelet transform, they are real and complex. The real 2-D dual-tree DWT is times expansive and the complex 2-D dual-tree 2-DWT is 4-times expansive, and they are oriented in six distinct directions.

Real 2-D Dual-Tree Wavelet Transform: The real

2-D dual-tree DWT of an image x is implemented using two critically sampled separable 2D-DWTs in parallel. Then we take the sum and difference, for each pair of subbands. The six wavelets associated with the real 2D dual-tree DWT and are oriented in a different direction as illustrated in the Figure 4. Each subband of the 2-D dual-tree DWT corresponds to a specific orientation.

Fig. 4: Directional wavelets for reduced 2-D DWT

Complex 2-D Dual-Tree Wavelet Transform: The

real case, the sum and difference of subband images is performed to obtain the oriented wavelets.

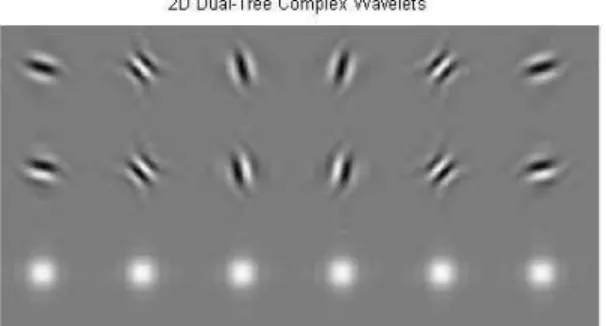

Fig. 5: 2D Dual-Tree Complex Wavelets

The six wavelets displayed on the first and second row are representing real and imaginary part of a set of six complex wavelets, and the third row represents the magnitude of the six wavelets. The magnitudes of the complex wavelets have bell-shaped behavior, rather than oscillatory behavior. The resulting complex wavelet is then approximately one sided in the frequency domain. It has the ability to differentiate positive and negative frequencies and produces six subbands oriented in ±150, ±450, ±750.

4. MODEL

In this section proposed DTBFEFR model is discussed. Face recognition using DWT and DT-CWT for illumination, expression and pose variations with different kind of databases is as shown in the Figure 6.

Fig. 6: Block diagram of the Proposed DTBFEFR model.

4.2 Proposed DTBFEFR Model

The face recognition using DWT and DT-CWT for illumination, expression and pose variations with different kinds of databases is as shown in the Figure 7.

Face Database: The available face Databases such as

L-Spacek, ORL, Yale-B, JAFEE, and CMU-PIE are considered for experimental purpose. The image is preprocessed by resizing to 2n * 2n , which is suitable for DT-CWT.

DWT: The DWT is used to decompose the original image into four subbands. The significant information of the original image is present in the low-low (LL) subband that represents the significant features of the original image. The two level DWT is applied on face image to achieve more reduction in significant information of an image is as shown in the Figure 7. The DWT removes expression and illumination variations and gives better contrast and resolution as shown in Figure 8.

(a) (b) (c)

Fig.7: (a) Original Image, (b) 1-level Wavelet Decomposition and (c) 2-level Wavelet Decomposition.

(a) (b)

Fig. 8: (a) Original Image (b) DWT Image

Feature Extraction by DT-CWT:DWT alone gives

larger feature length and very low accuracy compared to DT-CWT. The DT-CWT overcome the drawbacks of DWT and provides more information with respect to the particular directions such as ±150, ±450, ±750.

The number of features and dimensions are reduces as the number of DT-CWT level increases. The number of features for level-1, level-2, level-3, level-4 and level-5 are 393216, 98304, 24576, 6144 and 1536 respectively, and corresponding reduction in image size is as shown in the Figure 9. In the proposed algorithm, 5-level DT-CWT is used to generate magnitude and phase features of length 1536. Reduction in number of features obviously reduces memory requirement and computational time.

Test Image

DWT

Feature Extraction Using DT-CWT

Pattern Matching

Match or Non-match DWT

Feature Extraction Using DTCWT

Fig. 9: DT-CWT images at different levels.

Face Classification: Matching techniques such as

ED, RF and SVM are used for face classification to compute Correct Recognition Rate (CRR), False Acceptance Rate (FAR), False Rejection Rate (FRR) and Equal Error Rate (EER).

5. ALGORITHM

Prolem Definition: The biometric face recognition is verified by DWT and DT-CWT with multi-matching classifiers is proposed to identify a person.

The Objectives:

• The Face recognition using DTBFEFR. • Reduce the value of FAR, FRR and EER. • Increase the overall efficiency.

The DTBFEFR algorithm to verify a person using DWT and DT-CWT is given in the Table 1.

Table 1: Algorithm of DTBFEFR

6.

RESULTS

AND PERFORMANCE

ANALYSIS

For performance analysis we used available five databases such as L-Spacek, JAFEE, ORL, Yale-B and CMU-PIE. The L-spacek database consists of 119 persons with 19 image samples per person. The database consists of 900 images with no background, light variation, hair style change and with minor variations in head turn, tilt and slant. To evaluate FRR and CRR, 100 persons with 18 image samples per person are considered to create database and one image per person is used as test image. To evaluate FAR, remaining 19 persons from the L-Spacek database is considered. The JAFEE face database consists of 10 persons, with 19 images per person. Here first 6 persons with 20th image is considered to evaluate FRR and CRR and the remaining 4 persons images are considered to

evaluate FAR. The ORL face database consists of 40 persons and each person with 9 images. First 30 persons with eight images per person are considered to evaluate FRR and CRR. To evaluate FAR remaining 10 persons are considered. The Yale-B database consists of 57 persons, each person with 30 images. To evaluate FRR and CRR first 30 persons are considered and to evaluate FAR remaining 27 persons are considered. The CMU-PIE face database consists of 68 persons with different modes such as illumination, lighting and talking conditions, each person with 45 images. To evaluate FRR and CRR first 35 persons are considered and to evaluate FAR remaining 33 persons are considered. Mixed (MX) face database is created by combining L-Spacek, ORL, JAFEE, Yale-B and CMU-PIE database images.

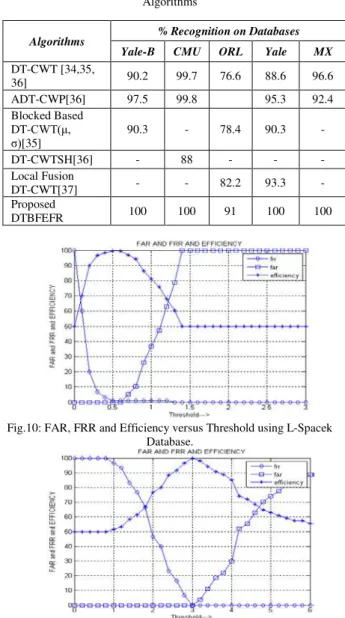

The percentage face recognition rate of the proposed algorithm is compared with other face recognition techniques using transformations such as DT-CWT, DT-CWT on Histogram Equalization and DT-CWT on DCT with different databases given in Table 2. It is seen that the proposed algorithm using DT-CWT on DWT gives 100% recognition compared to other transformation techniques.

Table 2: Comparison of Recognition Rate of the Proposed Algorithm with other Algorithms.

The performance evaluation parameters such as CRR, EER, Efficiency, FAR and FRR for different face database using DTBFEFR with different matching techniques viz., ED, RF and SVM are given in Tables 3, 4 and 5 respectively. For best performance the databases L-Spacek, Yale-B and Mixed images of sizes 64*128, 512 * 512 and 256 * 256 are considered respectively and also the databases JAFEE, ORL and CMU-PIE of image sizes 128 * 128 are considered for the proposed method DTBFR. In the case of RF classifier, the performance parameters CRR and FRR are better compared to ED and SVM. The CRR is 100% with all the three classifiers for databases such as L-Spacek, JAFEE, ORL and CMU-PIE, except Yale-B face database. It is observed that the CRR values are better in the case of RF compared to ED and SVM. The overall performance efficiency of the proposed algorithm is better in the case of ED compared to RF and SVM. The efficiency on different databases with

• Input : Face Database, Test Face Image

• Output : Match/Mismatch Face Image 1.Read Face image from various databases 2.Resize the face images

3.Apply 2-level DWT and consider only LL subband 4.Use 5-level DT-CWT on LL subband to generate features 5.ED, RF and SVM are used as classifiers to verify a person.

Data base

Algorithms

DT-CWT

HE+ DT-CWT

DCT+

DT-WT DTBFEFR L-Spacek 87.2% 96.7% 89.4% 100%

three classifiers is given in the Table 6 and it is noticed that using ED classifier gives better recognition rate compared to RF and SVM.

Table 3: DTBFEFR using Euclidean Distance

Table 4: DTBFEFR using Random Forest

Table 5: DTBFEFR using Support Vector Machine

The variations of FAR, FRR and Efficiency with respect to the threshold for different face databases viz., L-Spacek, JAFEE, ORL, Yale-B, CMU-PIE_Illumination, PIE_Lights, CMU-PIE_Talking and Mixed databases are given in the Figures 10, 11, 12, 13, 14, 15, 16, and 17 respectively. It is noticed that the value of overall efficiency is less in the case of ORL face database as few face images are occluded by spectacles and other components.

The percentage recognition of the proposed DTBFEFR algorithm is compared with existing face recognition algorithms such as Dual Tree Complex Wavelet Transform based Face Recognition [34, 35, 36], Anisotropic Dual-Tree Complex Wavelet Packets (ADT-CWP) [34], Dual Tree Complex Wavelet Transform based Face Recognition with Single View [35] (ADT-CWT (μ, σ)), Face Detection using DT-CWT on Spectral Histogram (DT-DT-CWTSH) [36] and Local Fusion DT-CWT [37] and it is given in Table 7.

It is observed that recognition rate in the proposed algorithm is about 100% for five databases except on ORL database.

Table 6: The Overall Experimental Efficiency of the DTBFEFR with Multi-matching Classifiers.

Table 7: Comparison of the Proposed Algorithm with Other Algorithms

Fig.10: FAR, FRR and Efficiency versus Threshold using L-Spacek Database.

Fig.11: FAR, FRR and Efficiency versus Threshold using Yale-B Database.

Database CRR

%

EER

% %η

FAR %

FRR %

L-Spacek 100 0 100 0 0 JAFEE 100 0 100 0 0

ORL 100 9 91 9 9

Yale-B 83.3 0 100 0 0 CMU_Illum 100 0 100 0 0 CMU_Light 100 0 100 0 0 CMU_Talk 100 0 100 0 0

MX 100 0 100 0 0

Database CRR

%

EER

% %η

FAR %

FRR %

L-Spacek 100 0.5 99.5 1 0 JAFEE 100 8.5 91.5 17 0 ORL 100 1.7 98.4 3.3 0 Yale-B 76.7 3.3 96.7 3.3 3.3 CMU_Illum 100 2.8 97.2 5.7 0 CMU_Light 100 0 100 0 0 CMU_Talk 100 1.8 98.2 2.9 0 MX 100 9 91 16 2

Database CRR

% EER

% % η

FAR %

FRR %

L-Spacek 100 0 99 0 0 JAFEE 100 0 100 0 0 ORL 100 9 100 0 0 Yale-B 76.7 0 93.4 3.3 10 CMU_Illum 100 0 100 0 0 CMU_Light 100 0 100 0 0 CMU_Talk 100 0 100 0 0 MX 100 0 100 0 0

Database Classifiers

ED RF SVM

L-Spacek 100% 99.5% 99% JAFEE 100% 91.5% 100%

ORL 91% 98.44% 100%

Yale-B 100% 96.67% 93.4% CMU-PIE 100% 98.44% 100%

MX 100% 91% 100%

Algorithms % Recognition on Databases

Yale-B CMU ORL Yale MX

DT-CWT [34,35,

36] 90.2 99.7 76.6 88.6 96.6 ADT-CWP[36] 97.5 99.8 95.3 92.4 Blocked Based

DT-CWT(μ,

σ)[35]

90.3 - 78.4 90.3 -

DT-CWTSH[36] - 88 - - - Local Fusion

DT-CWT[37] - - 82.2 93.3 - Proposed

Fig.12: FAR, FRR and Efficiency versus Threshold using ORL Database.

Fig.13: FAR, FRR and Efficiency versus Threshold using JAFEE Database.

Fig.14: FAR, FRR and Efficiency versus Threshold using CMU-PIE Illumination Database.

Fig.15: FAR, FRR and Efficiency versus Threshold using CMU-PIE Lights Database

Fig.16: FAR, FRR and Efficiency versus Threshold using CMU-PIE Talking Database.

Fig.17: FAR, FRR and Efficiency versus Threshold using Mixed Database.

7. Conclusion

In this paper dual transform based feature extraction method is proposed for face recognition. The L-Spacek, ORL, Yale-B, JAFEE, CMU-PIE and Mixed database images are used to test the proposed algorithm. DWT is applied on Facial images to normalize expression and illumination variations, which greatly reduces image dimension by retaining visually significant components of an image. Further reduction in dimension is obtained by using DT-CWT, which gives out feature vectors of face image. Using feature vectors face image is verified using ED, SVM and RF. It is observed that the performance parameters are improved in the case of proposed algorithm compared to the existing algorithms. In future the algorithm may be tested by fusing features of individual transformations used and also fusion at matching level.

References

[1]. Marcos Faundez-Zanuy, “Biometric Security Technology,” Encyclopedia of Artificial Intelligence, 2009, pp. 262-264.

[2]. Anil K Jain, Arun Ross and Salil Prabhakar, “An Introduction to Biometric Recognition,” IEEE Transaction on Circuits and Systems for Video Technology, 2004, vol. 14, no.1, pp. 4-20.

[3]. A Abbas, M I Khalil, S Abdel-Hay and H M Fahmy, “Expression and Illumination Invariant Preprocessing Technique for Face Recognition,” Proceedings of the International Conference on Computer Engineering and System, 2008, pp. 59-64.

[4]. Jukka Holappa, Timo Ahonen and Matti Pietikainen, “An Optimized Illumination Normalization Method for Face Recognition,” Proceedings of the IEEE Second International Conference on Biometrics: Theory, Applications and Systems, 2008, pp. 1-6.

International Conference on Computational Science, 2008, pp. 357-361.

[6]. S Shan, W Gao, B. Cao, and D. Zhao, “Illumination Normalization for Robust Face Recognition against Varying Lighting Conditions,” Proceedings of the IEEE Workshop on Analysis and Modeling of Face Gestures, 2003, pp. 157– 164.

[7]. Shan Du and Rabab Ward, “Wavelet based Illumination Normalization for Face Recognition,” Proceedings of the IEEE International Conference on Information Processing, 2005, vol. 2, pp. 954 - 957.

[8]. Shan Du and Rabab Ward, “Component-Wise Pose Normalization for Pose-Invariant Face Recognition,” Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, 2009, pp. 873-876.

[9]. Yali LI, Shengjin WANGand Xiaoqing DING,

“Person-Independent Head Pose Estimation based on Random Forest Regression,” Proceedings of IEEE Seventeenth International Conference on Image Processing, 2010, pp. 1521-1524.

[10].Ramesha K, K B Raja, Venugopal K R and L M Patnaik, “Feature Extraction based Face Recognition, Gender and Age Classification,” International Journal on Computer Science and Engineering, 2010, vol. 02, no.01S, pp. 14-23. [11].Ramesha K and K B Raja, “Face Recognition

System Using Discrete Wavelet Transform and Fast PCA,” Proceedings of the International Conference on Advances in Information Technology and Mobile Communication, CCIS 157, 2011, pp. 13-18.

[12].Marios Savvides, Jingu Heo, Ramzi Abiantun, Chunyan Xie and Vijay Kumar, “Class Dependent Kernel Discrete Cosine Transform Features for Enhanced Holistic Face Recognition in FRGC-II,” Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, 2006, vol. 5, no. 2, pp. 185-188.

[13].Derzu Omaia, Jankees V D Poel and Leonardo V Batista, “2D-DCT Distance based Face Recognition using a Reduced Number of Coefficients,” Proceedings of Twenty Second Brazilian Symposium on Computer Graphics and Image Processing, 2009, pp. 291-298.

[14].Alaa Eleyan, Huseyin Ozkaramanli and Hasan Demirel, “Complex Wavelet Transform based Face Recognition,” Eurasip Journal on Advances in Signal Processing, 2008, vol. 2008, pp. 1-13. [15].Chao-Chun Liu and Dao-Qing Dai, “Face

Recognition using Dual-Tree Complex Wavelet Features,” IEEE Transactions on Image Processing, 2009, vol. 18, issue 11, pp. 2593- 2599.

[16].Gunawan Sugiarta Y B, Riyanto Bambang, Hendrawan and Suhardi, “Feature Level Fusion of Speech and Face Image based Person Identification System,” Proceedings of the Second International Conference on Computer Engineering and Applications, 2010, pp. 221-225.

[17].S S Ranawade, “Face Recognition and Verification using Artificial Neural Network,” International Journal of Computer Applications, 2010, vol.1, no. 14, pp. 21-25.

[18].Albert Montillo and Haibin Ling, “Age Regression from Faces using Random Forests,” Proceedings of the IEEE International Conference on Image Processing, 2009, pp. 2465-2468. [19].Gerard Biau, Luc Devroye and Gabor Lugosi,

“Consistency of Random Forests and Other Averaging Classifiers,” Journal of Machine Learning Research, 2008, vol. 9, pp. 2015-2033. [20].Sidra Batool Kazmi, Qurat-ul-Ain and M Arfan

Jaffar, “Wavelets based Facial Expression Recognition using a Bank of Neural Networks,” Fifth International Conference on Future Information Technology, 2010, pp. 1-6.

[21]. Taskeed Jabid, Md. Hasanul Kabir, and Oksam Chae, “Local Directional Pattern for Face Recognition,” International Conference on Consumer Electronics, 2010, pp. 329 – 330.

[22].Xiaoyang Tan and Bill Triggs, “Preprocessing and Feature Sets for Robust Face Recognition,” Proceedings of the IEEE International Conference on Computer Vision and Pattern Analysis, 2007, pp. 1-8.

[23].Vaishak Belle, Thomas Deselaers and Stefan Schiffer, “Randomized Trees for Real-Time One-Step Face Detection and Recognition,” Proceedings of the Nineteenth International Conference on Pattern Recognition, 2008, pp.1-4. [24].Bo Du, Shiguang Shan, Laiyun Qing and Wen

Gao, “Empirical Comparisons of Several Preprocessing Methods for Illumination Insensitive Face Recognition,”IEEE International Conference on Acoustics, Speech, and Signal Processing, 2005, vol. 2, pp. 981-984.

[25].Zhi-Kai Huang, Wei-Zhong Zhang, Hui-Ming Huang and Ling-Ying Hou, “Using Gabor Filters Features for Multi-Pose Face Recognition in Color Images,” Proceedings of the IEEE Second International Conference on Intelligent Information Technology Application, 2008, pp. 399-402.

[27].Priya K J and Rajesh R S “Local Statistical Features of Dual-Tree Complex Wavelet Transform on Parallelogram Image Structure for Face Recognition with Single Sample,” Proceedings of the International Conference on Recent Trends in Information, Telecommunication and Computing, 2010, pp. 50-54.

[28].K Jaya Priyaand R S Rajesh, “Local Fusion of Complex Dual-Tree Wavelet Coefficients based Face Recognition for Single Sample Problem,” Proceedings of the International Conference and Exhibition on Biometrics Technology, 2010, vol. 2, pp. 94-100.

[29].Yue-Hui Sun and Minghui Du, “DT-CWT Feature Combined with ONPP for Face Recognition,” Published by Springer-Verlag Berlin, Heidelberg in the book of Computational Intelligence and Security, 2007, pp. 1058 – 1067.

[30].Mariusz Leszczyński, “Image Preprocessing for Illumination Invariant Face Verification,” Journal of Telecommunication and Information Technology, 2010, vol. 4, pp. 19-25.

[31].Yuehui Chen and Yaou Zhao, “Face Recognition using DCT and Hierarchical RBF Model,” IDEAL 2006, LNCS 4224, 2006, pp. 355-362.

[32].Sathesh and Samuel Manoharan, “A Dual Tree Complex Wavelet Transform Construction and its Application to Image Denoising,” International Journal of Image Processing, vol. 3, issue 6, pp. 293- 300.

[33].Ivan W Selesnick, Richard G Baraniuk and Nick G. Kingsbury, “The Dual-Tree Complex Wavelet Transform,” IEEE Signal Processing Magazine, 2005, pp. 123-151.

[34].Yigang Peng, Xudong Xie, Wenli Xu and Qionghai Dai, “Face Recognition using Anisotropic Dual-Tree Complex Wavelet Packets,” Proceedings of the Nineteenth International Conference on Pattern Recognition, 2008, pp. 1-4.

[35].K Jaya Priya and R S Rajesh, “Dual Tree Complex Wavelet Transform based Face Recognition with Single View,” Proceedings of the International Conference on Computing, Communications and Information Technology Applications, 2010, vol. 5, pp. 455-459.

[36].Yue-Hui Sun and Ming-Hui Du, “Face Detection using DT-CWT on Spectral Histogram,” Proceedings of the IEEE International Conference on Machine Learning and Cybernetics,2006, pp. 3637 – 3642.

[37].K Jaya Priya and R S Rajesh, “Local Fusion of Complex Dual-Tree Wavelet Coefficients based Face Recognition for Single Sample Problem,” International Journal by Elsevier Ltd., 2010,vol. 2, pp. 94-100.

Ramesha K awarded the B.E degree in E & C from Gulbarga University and M.Tech degree in Electronics from Visvesvaraya Technological University. He is pursuing his PhD. in E & C Engineering at JNTU Hyderabad, under the guidance of Dr. K. B. Raja, Assistant Professor, Department of Electronics and Communication Engineering, University Visvesvaraya College of Engineering. He has over 8 research publications in refereed International Journals and Conference Proceedings. He is currently an Assistant Professor, Dept. of Telecommunication Engineering, Vemana Institute of Technology, Bangalore. His research interests include Image processing, Computer Vision, Pattern Recognition, Biometrics, and Communication Engineering. He is a life member of Indian Society for Technical Education, New Delhi.

K B Raja is an Assistant Professor, Dept. of

Electronics and Communication Engineering,