Universidade de Aveiro Departamento deElectr´onica, Telecomunica¸c˜oes e Inform´atica, 2016

Jos´

e Miguel Costa

Silva

Content Distribution in OTT Wireless Networks

Distribui¸

c˜

ao de Conte´

udos em Redes OTT sem Fios

Universidade de Aveiro Departamento deElectr´onica, Telecomunica¸c˜oes e Inform´atica, 2016

Jos´

e Miguel Costa

Silva

Content Distribution in OTT Wireless Networks

Distribui¸

c˜

ao de Conte´

udos em Redes OTT sem Fios

Disserta¸c˜ao apresentada `a Universidade de Aveiro para cumprimento dos requisitos necess´arios `a obten¸c˜ao do grau de Mestre em Engenharia Electr´onica e de Telecomunica¸c˜oes, realizada sob a orienta¸c˜ao cient´ıfica da Professora Doutora Susana Sargento, Professora Associada com Agrega¸c˜ao do Departamento de Eletr´onica, Telecomunica¸c˜oes e Inform´atica da Uni-versidade de Aveiro e co-orienta¸c˜ao cient´ıfica do Doutor Lucas Guardalben, Investigador do Instituto de Telecomunica¸c˜oes de Aveiro.

o j´uri / the jury

presidente / president Professor Doutor At´ılio Manuel da Silva Gameiro

Professor Associado do Departamento de Eletr´onica, Telecomunica¸c˜oes e In-form´atica da Universidade de Aveiro

vogais / examiners committee Professor Doutor Manuel Alberto Pereira Ricardo Professor Associado da Universidade do Porto (Arguente)

Professora Doutora Susana Isabel Barreto de Miranda Sargento Professora Associada com Agrega¸c˜ao do Departamento de Eletr´onica, Telecomu-nica¸c˜oes e Inform´atica da Universidade de Aveiro (Orientadora)

agradecimentos/ aknowledgments

Queria agradecer em primeiro lugar aos meus pais que me possibilitaram esta oportunidade em poder estudar na universidade e no curso que escolhi e por todo o apoio que me deram. Queria tamb´em agradecer a minha madrinha pelo apoio.

Em segundo lugar, mas n˜ao menos importante, queria agradecer a minha namorada Deolinda Moura que me aturou todos estes anos e me ajudou a conseguir ultrapassar certos momentos dif´ıceis, acreditando sempre em mim.

Queria agradecer tamb´em a todos os meus amigos, Carina, Ra´ul, Carolina, Tiago e Andr´e pela ajuda e apoio.

Agrade¸co tamb´em `a Professora Doutora Susana Sargento e ao Doutor Lucas Guardalben por me terem orientado neste percurso.

Para terminar, agrade¸co ao grupo de investiga¸c˜ao do NAP, em particular ao Carlos, ao Jo˜ao Nogueira e ao Pedro por todo o apoio, ao Instituto de Telecomunica¸c˜oes de Aveiro pelos recursos facultados e a todos os profes-sores que ao longo de todos estes anos tive a oportunidade de conhecer e que contribu´ıram para a finaliza¸c˜ao de mais uma etapa na minha vida. O meu muito obrigado a todos!

“The best way to predict the future is to create it.” -Peter Drucker

Resumo Nos pa´ıses desenvolvidos, cada vez mais a Internet ´e considerada um bem essencial. A necessidade de estar “online”, partilhar e aceder a conte´udos s˜ao rotinas frequˆentes no dia-a-dia das pessoas, tornando assim a Internet num dos sistemas mais complexos em opera¸c˜ao.

A maioria das comunica¸c˜oes tradicionais (telefone, r´adio e televis˜ao) est˜ao a ser remodeladas ou redefinidas pela Internet, dando origem a novos servi¸cos, como o protocolo de Internet por voz (VoIP) e o protocolo de Internet de televis˜ao (IPTV). Livros, jornais e outro tipo de publica¸c˜oes impressas est˜ao tamb´em a adaptar-se `a tecnologia web ou tˆem sido reformuladas para blogs e feeds. A massifica¸c˜ao da Internet e o aumento constante das larguras de banda oferecidas aos consumidores criaram condi¸c˜oes excelentes para servi¸cos multim´edia do tipo Over-The-Top (OTT). Servi¸cos OTT referem-se `a entrega de ´audio, video e outros via Internet sem usar o controlo dos operadores de rede.

Apesar da entrega OTT apresentar uma proposta atrativa (e lucrativa, ol-hando para o r´apido crescimento de servi¸cos como o YouTube, Skype e Netflix, por exemplo) esta sofre de algumas limita¸c˜oes. ´E necess´ario manter n´ıveis elevados de Qualidade-de-Experiˆencia (QoE) para continuar a atrair clientes. Para isso ´e fundamental uma rede de distribui¸c˜ao de conte´udos capaz de se adaptar `a rapidez com que os conte´udos s˜ao requeridos e rapi-damente descartados e que consiga albergar todo o tr´afego.

Esta disserta¸c˜ao foca-se na distribui¸c˜ao de conte´udos OTT nas redes sem fios, por forma a endere¸car a falta de trabalhos de investiga¸c˜ao nesta ´area. ´

E proposta uma solu¸c˜ao que visa poder ser integrada pelos equipamentos de rede para, desta forma, estes serem capazes de prever que tipo de conte´udo os consumidores conectados (ou nas proximidades) possam vir a solicitar e coloc´a-lo em mem´oria antes de ser pedido, melhorando a percep¸c˜ao com que os consumidores recebem o mesmo. Dada a falta de informa¸c˜ao na literatura sobre gest˜ao e controlo de proxy caches para sistemas embutidos, o primeiro passo foi testar e avaliar dois algoritmos de cache diferentes: Nginx e Squid. Os resultados mostram que existe um compromisso entre o desempenho de cache e velocidade no processamento dos pedidos, apre-sentando o Nginx um melhor desempenho mas piores tempos nas respostas aos pedidos. Foi tamb´em verificado que o tamanho da cache nem sempre determina um melhoramento significativo nos resultados. `As vezes, manter apenas o conte´udo mais popular em cache ´e suficiente.

De seguida, foram propostos e testados dois algoritmos de previs˜ao de conte´udos (prefetching ) em cen´arios de mobilidade, dada as caracter´ısticas das redes sem fios, onde foi poss´ıvel observar melhorias de desempenho muito significativas, demonstrando que existe a possibilidade de ser vi´avel um investimento nesta ´area, embora isto implique um aumento na capaci-dade de processamento/ consumo de energia dos equipamentos de rede.

Abstract In developed countries, the Internet is increasingly considered an essential and integral part of people’s lives. The need to be “online”, share and access content are frequent routines in people’s daily lives, making the Internet one of the most complex systems in operation.

Most traditional communications (telephone, radio and television) are being remodelled or redefined by the Internet, giving rise to new services such as Voice over Internet Protocol (VoIP) and Internet Protocol Television (IPTV). Books, newspapers and other types of printed publications are also adapting to the web technology or have been redesigned for blogs and feeds. Massification of the Internet and the constant increase in bandwidth offered to the consumers have created excellent conditions for services such as OTT. OTT Services refer to the delivery of audio, video and other data over the Internet without the control of network operators.

Although the OTT delivery presents an attractive solution (and profitable, looking at the fast growing services such as YouTube, Skype and Netflix, for example), it suffers from some limitations. It is necessary to maintain high levels of Quality-of-Experience (QoE) to continue to attract customers. In order to do this, a content distribution network is fundamental to adapt to the speed with which the contents are required and quickly discarded and that can accommodate all the traffic.

This dissertation focuses on the distribution of OTT contents in wireless networks, in order to address the lack of research work in this area. A solu-tion is proposed that can be integrated by the network equipment so that it is able to predict what kind of content consumers connected (or nearby) may request and put it in memory before being requested, improving consumers’ perception of the service. Given the lack of information in the literature on management and control of proxy caches for embedded systems, the first step was to test and evaluate two different cache algorithms: Nginx and Squid. The results show that there is a trade-off between cache perfor-mance and speed in processing the requests, with Nginx delivering better performance but worse response times. It was also found that cache size does not always determine a significant improvement in results. Sometimes keeping just the most popular content cached is enough.

Afterwards, two algorithms for predicting prefetching contents in mobility scenarios were proposed and tested, given the characteristics of the wireless networks, where it was possible to observe very significant performance improvements, demonstrating that there is a possibility for an investment in this area, although this implies an increase in the processing capacity and power consumption of the network equipment.

Contents

Contents i

List of Figures iii

List of Tables v

Acronyms vii

1 Introduction 1

1.1 Motivation . . . 1

1.2 Objectives and Contributions . . . 2

1.3 Document Organization . . . 3

2 State of the art 5 2.1 Introduction . . . 5

2.2 Over-The-Top (OTT) Multimedia Networks . . . 6

2.2.1 OTT Multimedia Services in Telecommunication Operators . . . 6

2.3 Content Delivery Networks (CDNs) . . . 7

2.3.1 The CDN Infrastructure . . . 8

2.3.2 Content Distribution Architectures . . . 10

2.3.2.1 Centralized Content Delivery . . . 10

2.3.2.2 Proxy-Caching . . . 11

2.3.2.3 Peer-to-Peer (P2P) . . . 12

2.3.3 Content Delivery Network Interconnection (CDNI) . . . 12

2.3.4 Mobile Content Delivery Networks (mCDNs) . . . 14

2.3.5 CDNs and Multimedia Streaming . . . 15

2.4 Multimedia Streaming Technologies . . . 15

2.4.1 Traditional Streaming . . . 16

2.4.2 Progressive Download . . . 17

2.4.3 Adaptive Streaming Technologies . . . 19

2.4.3.1 Adaptive Segmented HTTP-based delivery . . . 19

2.5 Prefetching . . . 21

2.6 Multimedia Caching . . . 24

2.6.1 Caching Algorithms . . . 24

2.6.2 Proxy-Caching Solutions . . . 26

3 Wireless Content Distribution 29

3.1 Introduction . . . 29

3.2 Problem Statement . . . 29

3.3 Proposed Solution . . . 30

3.3.1 Proxy Caching Strategies for Embedded Systems . . . 32

3.3.2 Prefetching and Mobile Consumers . . . 34

3.4 Chapter Considerations . . . 36

4 Implementation 37 4.1 Introduction . . . 37

4.2 Architecture and Implementation Overview . . . 37

4.2.1 Consumers . . . 37 4.2.2 Nodes . . . 40 4.2.2.1 Node Initialization . . . 40 4.2.2.2 Prefetching . . . 41 4.2.2.3 Neighbors Manager . . . 45 4.3 Chapter Considerations . . . 47

5 Integration and Evaluation 49 5.1 Introduction . . . 49

5.2 Hardware and Operating System Description . . . 49

5.2.1 Consumers and Origin Server . . . 49

5.2.2 Single Board Computer with Wireless USB Adapter . . . 50

5.2.3 Operating System - OpenWrt . . . 50

5.3 Raspberry and Web Servers Configuration . . . 51

5.3.1 Raspberry Configuration . . . 51

5.3.2 Squid Configuration . . . 52

5.3.3 Nginx Configuration . . . 53

5.4 Evaluation . . . 54

5.4.1 Performance Metrics . . . 54

5.4.2 Proxy Cache Metrics . . . 55

5.4.3 Support Scripting . . . 55

5.4.3.1 Script CPU Usage and Load . . . 56

5.4.3.2 Scripts to perform the test scenario . . . 57

5.4.3.3 Scripts to generate final results . . . 60

5.4.4 Scenario 1: Caching strategies for embedded systems . . . 60

5.4.4.1 Approach by Number of Requests . . . 60

5.4.4.2 Approach by Time . . . 63

5.4.4.3 Approach by Cache Size . . . 65

5.4.5 Scenario 2: Prefetching and Mobile consumers . . . 68

5.5 Chapter Considerations . . . 72

6 Conclusion and Future Work 75 6.1 Conclusions . . . 75

6.2 Future Work . . . 75

List of Figures

1.1 OTT Video Ecosystem - Example . . . 1

2.1 Typical CDN infrastructure [1] . . . 8

2.2 Centralized OTT delivery . . . 11

2.3 Proxy Cache OTT delivery . . . 11

2.4 P2P OTT delivery . . . 12

2.5 CDNI use case 1 . . . 13

2.6 CDNI use case 2 . . . 13

2.7 Typical mCDN infrastructure . . . 15

2.8 Traditional Streaming [2] . . . 17

2.9 Progressive Download Architecture [3] . . . 18

2.10 Example how Progressive Download works [3] . . . 18

2.11 Progressive Download features [3] . . . 18

2.12 Segmented HTTP Adaptive Streamings [4] . . . 19

2.13 IIS Smooth Streaming Media Workflow [5] . . . 20

2.14 Client Manifest File - Example . . . 21

3.1 Typical Wireless CDN infrastructure . . . 31

3.2 Proposed Architecture Overview . . . 32

3.3 Scenario used to test different cache approaches . . . 34

3.4 Scenario used to test prefetching and consumers mobility . . . 36

4.1 Architecture main blocks. . . 38

4.2 Mobile Consumer Flow Chart . . . 39

4.3 Methods Implemented in Mobile Consumers . . . 40

4.4 One Node: Block Diagram and Interactions . . . 41

4.5 Initialization Flow Chart . . . 42

4.6 First Algorithm Flow Chart . . . 43

4.7 Second Algorithm Flow Chart . . . 44

4.8 ServerSocket Flow Chart . . . 46

4.9 Packet fields . . . 47

4.10 ClientSocket Flow Chart . . . 47

4.11 Example of how Prefetching is sent through Neighbors Manager . . . 48

5.1 Raspberry Pi 2 [6] . . . 50

5.2 TP-LINK TL-WN722N USB Wireless Adapter [7] . . . 51

5.4 Nginx Configuration Example . . . 54

5.5 CPU/Load Measure Flow Chart . . . 56

5.6 MakeAll Scenario 1 Flow Chart . . . 58

5.7 MakeAll Script Scenario 2 Flow Chart . . . 59

5.8 Cache Performance (number of requests). . . 61

5.9 Request Time vs Qualities (number of requests) . . . 62

5.10 Request Time vs Technologies (number of requests) . . . 62

5.11 CPU Load and Usage (number of requests). . . 63

5.12 Cache Performance (time). . . 64

5.13 Request Time vs Qualities (time) . . . 64

5.14 Request Time vs Technologies (time) . . . 64

5.15 CPU Load and Usage (time). . . 65

5.16 Cache Performance (cache). . . 66

5.17 Request Time vs Qualities (cache). . . 67

5.18 Request Time vs Technologies (cache). . . 68

5.19 CPU Load and Usage (cache). . . 69

5.20 Cache Performance (prefetching). . . 71

5.21 Request Time vs Qualities (prefetching). . . 71

5.22 Request Time vs Technologies (prefetching). . . 72

List of Tables

2.1 FIFO cache Replacement Policy . . . 24

2.2 LRU cache Replacement Policy . . . 25

2.3 MPU cache Replacement Policy . . . 25

2.4 Comparison of Five Proxy Cache Solutions - Main features . . . 27

3.1 Available Qualities and Average Size per Chunk . . . 33

4.1 Videos and their Probabilities to be Requested. . . 38

Acronyms

AP Access Point

ABS Adaptive Bitrate Streaming CAPEX Capital Expenditures

CARP Cache Array Routing Protocol CDN Content Delivery Network

CDNI Content Delivery Network Interconnection

CDNi-WG Content Delivery Networks interconnection - Work Group CPU Central Processing Unit

CSV Comma-Separated Values DASH Dynamic Adaptative Streaming DHCP Dynamic Host Configuration Protocol EST-VoD Electronic Sell Through VoD

FIFO First-In-First-Out

FLV Flash Video

GDB GNU Debugger

GPL GNU General Public License

HDD Hard disk drive

HDS HTTP Dynamic Streaming

HLS HTTP Live Streaming

HTCP Hypertext Caching Protocol HTTP Hypertext Transfer Protocol

HTTPS Hypertext Transfer Protocol Secure

IDE Integrated Development Environment IIS Internet Information Services

IP Internet Protocol

IPTV Internet Protocol Television ISP Internet Service Provider IT Information Technology LFU Least Frequently Used LRU Least Recently Used

MB MegaByte

Mbps Megabits Per Second

mCDN Mobile Content Delivery Network MPEG Moving Pictures Expert Group MPU Most Popularly Used

NAT Network Address Translation OPEX Operational Expenditures

OS Operating System

OTT Over-The-Top

P2P Peer-to-Peer

PC Personal Computer

PEVq Perceptual Evaluation of Video Quality POSIX Portable Operating System Interface PVR Personal Video Recorder

QoE Quality-of-Experience QoS Quality-of-Service

QT QuickTime

RAN Radio Access Network RDT Real Data Transport

RTCP Real-Time Control Protocol RTMP Real-Time Messaging Protocol

RTP Real-Time Transport Protocol RTSP Real-Time Streaming Protocol SBC Single Board Computer

SD Secure Digital

SSH Secure Shell

S-VoD Subscription VoD

TV Television

T-VoD Transaction VoD

TCP Transmission Control Protocol UDP User Datagram Protocol USB Universal Serial Bus

VoD Video-on-Demand

VoIP Voice over Internet Protocol VCR Video Cassette Recorder Wi-Fi Wireless Fidelity

WMV Windows Media Video

Chapter 1

Introduction

1.1

Motivation

In recent years, with increasing Internet access speeds and the proliferation of mobile devices, consumer habits have been changing. There is a clear increasing trend of non-linear Television (TV) video watching versus broadcast TV services. Regarding Video-on-Demand (VoD) consumption, most of the traditional movie rental stores have closed and either focused on online video delivery (e.g. Netflix).

People tend to prefer services that cover multi-screen support and where they can access anytime/everywhere. As a consequence, the number of OTT based services, characterized by being transmitted through the network of an operator without control in the distribution, has been on the rise. Some examples of heavily used OTT services are shown in Figure 1.1.

Figure 1.1: OTT Video Ecosystem - Example.

This growth has raised several issues, both in terms of scalability, reliability and QoE. The network needs to adapt to the growing number of clients that use it everyday and request content. It is necessary to store the most craved contents near the consumers, in order to avoid overloading the entire network. Although the structure that exists today is being developed in this direction, the big problem is the consumer’s QoE.

The consumer’s QoE of a service is directly related to the opinion that the user gets right after using it. A service that presents a large delay or continuous quality breaks in the visualization of a video stream is, therefore, evaluated with low QoE. Although, in theory,

the network is able to accommodate all consumers, in practice this implies a reduction in the QoE in proportion to the total number of clients to serve. From a 2013 report by Conviva [8], 39.3% of video views experienced buffering, 4% of the views failed to start, and 63% of the views experienced low resolution [9].

There is a need to improve the content delivery time to the users, in order to improve their QoE. One way to do this is to predict in advance what kind of content they may require, based on their recent interactions with the service, and store them in memory so that they can be delivered quickly when requested. This process is called prefetching.

One of the most critical points in the whole delivery is the wireless layer where, on the one hand, there is stronger interference (which leads to a higher packet loss that will have impact on the video experience felt by the consumer) and, on the other hand, the resources are very limited, either at the processing level or at the memory level. However, this is the closest layer to the user, and thus, it is the one where it is possible to obtain better content delivery times if they are cached.

Another challenge in wireless networks is the consumers mobility. There is no point in predicting and caching content if it is never requested because the consumer has already moved. It is necessary to be able to send the content to other neighboring network nodes (e.g. Access Points (APs)) before the consumers arrive there, to make it seamless to the users movement. These APs must work together, thus forming a wireless content distribution network.

Predicting content is not easy, but it is quite challenging and it certainly brings motivation to the Information Technology (IT) professionals.

1.2

Objectives and Contributions

The objective of this dissertation is to build a decentralized and scalable wireless content distribution network, which addresses the high consumption of content, the optimized place-ment of functionalities in mobile networks and the dynamic dissemination of content across the web. The main objectives are as follows:

• Evaluate different proxy cache solutions: for the management and control of scattered caches along the wireless network in the available APs.

• Propose, implement and test different prefetching algorithms: in order to predict the content that will be requested by the consumers and its location in this way to cache it before it is requested.

• Improve consumers QoE: in order to provide the best possible experience at a given point in time (which will happen if the requested content is cached), the multimedia services need to be in real or nearly real time, without freeze or with low-buffering. • Build a demonstrator in the laboratory: which allows testing different solutions for

the management and control of scattered caches along the network in mobility scenarios and with the prefetching mechanisms.

The work on the prefetching algorithms for the wireless content distribution network will be published in a scientific paper.

1.3

Document Organization

This document is organized as follows:

• Chapter 1 contains the Introduction of the work.

• Chapter 2 presents the state of the art about Over-The-Top (OTT) Multimedia Networks, Content Delivery Networks (CDNs), Multimedia Streaming Technologies, Prefetching and Multimedia caching.

• Chapter 3 presents the problem behind OTT multimedia networks and the proposed solution to improve it, explaining the scenarios used.

• Chapter 4 presents the architecture and the implementation of the proposed solution. • Chapter 5 presents the integration and the evaluation of the implemented solution. • Chapter 6 presents the conclusion and the future work.

Chapter 2

State of the art

2.1

Introduction

In order to give a better comprehension of this document to the reader, this chapter presents the fundamental concepts which support the developed work, and an analysis of related work in this area of study. The chapter is structured as follows:

• Section 2.2 introduces the concept of Over-The-Top (OTT) Multimedia Networks and some other concepts very used, such as Linear TV, Time-shit TV and Video-on-Demand (VoD).

• Section 2.3 introduces the concept of Content Delivery Networks (CDNs), explaining their main structure, functionalities and main architectures. As currently consumers require access to content everywhere, there is a need to introduce new concepts such as Content Delivery Network Interconnection (CDNI) and Mobile Content Delivery Networks (mCDNs). In the end it is also addressed the issues of using CDNs to serving multimedia content.

• Section 2.4 presents the evolution of the types of streaming, starting with the tradi-tional streaming, moving to the well established progressive download and then adaptive and scalable streaming, giving special importance to adaptive segmented HTTP-based delivery, since it will be used in the implementation of this MSc thesis.

• Section 2.5 explains the concept of prefetching, focusing then on web prefetching algorithms, such as prefetch by Popularity, Lifetime and Good Fetch. These algorithms only take into account as metric the characteristics of the web objects. Thus, it is also presented an evolutionary algorithm that takes into account the consumers behavior and the content itself.

• Section 2.6 provides an overview of popular caching algorithms namely First-In-First-Out (FIFO), Least Recently Used (LRU) and Least Frequently Used (LFU). It also presents an approach developed in our research group - Most Popularly Used (MPU). This section ends with a survey of proxy-caching solutions for embedded systems. • Section 2.7 presents the chapter summary and considerations.

2.2

Over-The-Top (OTT) Multimedia Networks

Nowadays, most of the people have access to Internet and increasingly with higher speeds. As a consequence, OTT multimedia networks has grown in recent years. In OTT multimedia networks the content is delivered without the involvement of multiple-system operator, that controls the distribution the content. Since the Internet Service Provider (ISP) networks are being used to allow a service from a third-party, to use their network for free, this type of delivery is called Over-the-Top, and is considered unmanaged delivery.

The networks used to deliver Internet Protocol (IP) can be classified into two main classes: managed/closed or unmanaged/open [10], depending on if the operator controls the traffic or not.

In a managed network, ISP guarantees a very high Quality-of-Service (QoS) to subscribers (people who pay for it). This type of service is used in IPTV such as AT&T [11]. IPTV refers to the delivery of digital television and other audio and video services over broadband data networks using the same basic protocols that support the Internet.

On the other hand, in a unmanaged network, the ISP does not guarantee QoS. All the content has the same treatment (no resource reservation is made). Because of that, the consumption of video services over the best effort Internet raises multiple issues, like QoE [12].

The QoE comes from the users’s expectations. In order to provide the best possible experience at a given point in time, the multimedia services need to be in real or nearly real time, that is why the video delivery can not freeze or has low-buffering. Also, in live events, low end-to-end delay is required.

In order to minimize/overcome these issues, the multimedia delivery infrastructure needs to be well understood, and is thus the focus of the following sections.

2.2.1 OTT Multimedia Services in Telecommunication Operators

To provide strong competition to “pure-OTT” business models (Youtube, Netflix...), telecommunication operators are also moving towards OTT-based distribution systems, due to the fact that television visualization has become more asynchronous [10].

The linear channel schedule will be less important than the programs themselves. Because of that, telecommunication operators want to give users the choice of accessing the content and services they want, and pay for, in a wide range of client devices, instead of being constrained to a location (home) or a device (TV). Live television will still attract audience, particularly for news, sports, events and national occasions.

As a consequence of this shift to OTT services, it is important to understand what are CDNs, streaming protocols and how to improve QoE. The following sections will discuss this.

Telecommunication operators, to deliver their TV contents, may use:

• Linear TV, i.e. ”regular TV broadcast” respecting a predetermined program lineup, that was considered for decades as the traditional and more popular way of watching TV programs. This is still the dominant way of watching TV from national free-to-air TV services and major Pay-TV Operators [13].

• Time-shift TV relates to the visualization of deferred TV content, i.e linear-TV con-tent that is recorded to be watched later, using one of the following services:

1. Pause TV, allowing users to pause the television program they are currently watch-ing, from a few seconds up to several hours. Users can resume the TV broadcast when they want, continuing where they left off, skip a particular segment or even-tually catch up to the linear broadcast [13].

2. Start-over TV gives the opportunity for the users to restart programs that have already started or finished from the beginning. The amount of time that can be possible to rewind varies from operator to operator ranging from some minutes up to 24 h. The number of TV channels supporting this feature is also a decision of the operator [13].

3. Personal Video Recorder (PVR), where the recordings depend on the user action, i.e., they only occur if the user proactively schedules a TV program or a series to be recorded, or if he decides to start recording a program that is being watched. The behavior of the service is similar to the one of a Video Cassette Recorder (VCR); however, with a larger storage capacity and nonlinear access. The user can start watching a recording whenever he wants, even if the program is still being recorded [13].

4. Catch-up TV is the most advanced time-shift service, relying on an automated process of “Live to VoD”. With this service, TV operators offer recorded content of the previous hours up to 30 days, and the number of recorded TV channels varies from operator to operator. Using this type of service, users can catch up on TV programs that have been missed or that they explicitly decide to watch [13]. • Video-on-Demand (VoD) refers to services where users need to pay to watch a

specific content through one of the following ways:

1. Transaction VoD (T-VoD) is the most typical version of the service, where cus-tomers need to pay a given amount of money whenever they want to watch a content from the VoD catalog. The rental time depends from operator to oper-ator, but is usually of 24 or 48 h, during which they can watch it several times [13].

2. Electronic Sell Through VoD (EST-VoD) is a version of the VoD service involving the payment of a one-time fee to access the purchased content without restrictions, usually on a specific operator platform. This method of VoD is similar in OTT providers like Apple iTunes and Amazon Instant Video; it is also being offered by traditional Pay-TV operators like Verizon’s FiOS TV [13].

3. Subscription VoD (S-VoD) corresponds to the business model also adopted by OTT providers like Netflix, and where customers pay a monthly fee that allows them to watch whatever they want from provider catalog for an unlimited number of times [13].

2.3

Content Delivery Networks (CDNs)

The impressive dissemination of the Internet worldwide has generated a significant shift in its usage, with respect to what it was originally conceived for. Initially, the Internet was mainly designed as a robust, fault-tolerant network to connect hosts for military and scientific

applications. Nowadays, one of the main usages of the Internet is content generation, sharing and access of millions of simultaneous users [14], becoming one of the most complex systems in operation, both in number of protocols supported and sheer scale. As a consequence, CDNs arise to provide the end-to-end scalability necessary to support this growth.

CDNs emerged in 1998 [15] to become a fundamental piece of modern delivery infras-tructure. They were developed to provide numerous benefits such as a shared platform for multi-service content delivery, reduced transmissions costs for cacheable content, improved QoE for end users and increased robustness of delivery, i.e, maximizing bandwidth. For these reasons they are frequently used for large-scale content delivery. In this context, the content refers to the data being managed (video, audio, documents...) and its associated metadata.

Some examples of CDN application technologies are found on Media Providers (YouTube, Netflix), Social Networks (Facebook, Twitter), Network Operators (Telefonica, Vodafone) and Services Providers (Amazon CloudFront, Akamai).

2.3.1 The CDN Infrastructure

CDNs are complex with many distributed components collaborating to deliver content across different network nodes. Usually, they can be subdivided into three main functional blocks: delivery and management, request routing and performance measurement. In figure 2.1 it is represented the typical CDN infrastructure.

Figure 2.1: Typical CDN infrastructure [1]. Content Delivery and Management System

As can be seen, CDNs are composed by multiple servers, CDN nodes, sometimes called replica or surrogate servers that acquire data from the origin servers (using a private network), which contain all the data. This replica servers store copies of the origin servers’ content so that they can serve content themselves, reducing the load of the origins.

To deliver content to end users with QoS guarantees, CDN administrators must ensure that replica servers are strategically placed across the Web. Generally, the issue is to place M replica servers among N different sites (N > M) in a way that yields the lowest cost (widely known as the minimum K-median problem [16]). With an optimal number of replica servers, ISPs will benefit by reducing the bandwidth consumption and performance Web servers by reducing latency for their clients.

After properly placing the replica servers into a CDN, there comes another issue: what content should be replicated in the replica servers? This is commonly known as content out-sourcing. Traditionally, three main categories for content outsourcing have been established [17]:

• Cooperative push-based : content replication based on prefetching with cooperation from replica servers. First, the content is prefetched (loaded in cache before it is requested) to replica servers, and then the replica servers cooperate in order to reduce the replication and update cost. In this scheme, the CDN maintains a mapping between content and replica server, and each request is directed to the closest replica server (that has the requested object), or otherwise, the request is directed to the origin server. This approach is traditionally not used on commercial networks given that proper content placement algorithms require knowledge about the Web clients and their demands, which is data that is not commonly available for CDN providers.

• Uncooperative pull-based : content replication similar to traditional caches without prefetch-ing and cooperation. In this approach, clients’ requests are directed to their closest replica server. If the content is not in cache (cache miss), then the request is directed to another replica server or to the origin server. In other words, the replica servers, which serve as caches, pull content from the origin server when a cache miss occurs. The problem in this approach is that CDNs do not always choose the optimal server from which to serve the content. However, in its simplicity lies the key for successful deployments on popular CDNs such as Akamai or Mirror Image.

• Cooperative pull-based : an evolution of the uncooperative pull-based where the replica servers cooperate with each other in the event of a cache miss. In this approach, the content is also not prefetched. Client requests are directed to their closest replica server but, in case of cache miss, the replica servers cooperate in order to find neighboring servers that can accommodate the request and avoid requests to the origin server. This approach typically draws concepts and algorithms from Peer-to-Peer (P2P) technologies. The issue of what content to place on the CDN node is not a trivial one. The two main limitations are: dynamic and user-specific content, and the cache limit resources of edge/replica servers that must be properly managed in order to take the best results (more cache hit ratio).

Depending on how the CDN is devised, multiple protocols may be used in the interaction between the different replica servers, such as Cache Array Routing Protocol (CARP) [18] or

Hypertext Caching Protocol (HTCP) [19]. However, the CDN administrator usually imple-ments its own communication or interaction protocols. Sharing cache contents among Web proxies reduces significantly traffic to the Internet [20].

Request Routing System

A request routing system is responsible to forward requests of end users to the best replica server able to serve the request. This choice is determined through a set of algorithms specif-ically designed for the purpose. It means that, in the context of request routing, the best server is not necessarily the closest one in terms of physical distance [21]. The selection process should consider some aspects, like network proximity (in terms of hops), the client perceived latency and server load, for example. Request routing system also interacts with the content delivery and management system to maintain the content stored in CDN nodes up-to-date [22].

Performance Measurement

Measuring the performance of anything is the most important thing. In terms of CDN means to understand the impact on user side (QoE), traffic billing due to inefficient use of bandwidth and required number of servers, which affect Capital Expenditures (CAPEX) and Operational Expenditures (OPEX).

In order to get the best performance, CDNs are also designed to delivery specified content. As a consequence, Akammai HD [23] is more optimized for streaming than CloudFlare [24], for example, which is more optimized for serving dynamic applications. In a few words, a single optimal and universal solution for CDNs does not exist.

2.3.2 Content Distribution Architectures

As previously said, CDNs come to provide a scalable OTT delivery network, in order to reduce transmission costs for cacheable content, increasing robustness and QoE to the consumers.

With this in consideration, it becomes necessary to decentralize OTT delivery. Thus, in this sub-section it will be presented some details about content distribution architectures. First, it will be presented the centralized approach and then two of the most widely used approaches, Proxy-Caching and P2P, in order to improve scalability and reliability.

2.3.2.1 Centralized Content Delivery

The centralized approach to OTT delivery is the simplest one. Clients are directly con-nected to the origin servers without any intermediate, as depicted in figure 2.2.

When the client wants a specific content, a unicast stream is created directly between the origin server and the consumer device. The advantage of this approach is the lowest delivery delay when streaming live content. However, this approach introduces many disadvantages. Firstly, this may not be scalable. Each new consumer connected implies more bandwidth requirements which is extremely expensive. Secondly, this approach does not scale properly with geographically distributed consumers. The farther away the consumers are from origin server, the access delay to the content is increased, which is more problematic if the streaming

Figure 2.2: Centralized OTT delivery.

session is using Transmission Control Protocol (TCP). Another problem is security: direct access to the origin servers can be problematic.

2.3.2.2 Proxy-Caching

The proxy-caching approach is an alternative to the centralized solutions and has the main objective of decentralizing OTT delivery, introducing security and scalability. This architecture is illustrated in figure 2.3.

Figure 2.3: Proxy Cache OTT delivery.

As can be seen, consumers only communicate directly with the proxy cache (intermediate) that acquires the content from the origin server and caches it. The number of segments cached for each object is dynamically determined by the cache admission and replacement policies.

The use of proxy caches brings several advantages when compared with the centralized one. The first and most obvious is scalability. This approach can handle more users at the same time, because the proxy cache, in case of cache hit, can deliver the content directly to the consumer, avoiding the need to ask for it to the origin, congesting it. In this case, it also improves user QoE since the proxy caches are closer to the user.

Security is also improved, since the consumers can not interact directly with the origin server. In terms of bandwidth, costs are reduced too through savings in core and transit network traffic.

This approach has some disadvantages like increased management, deployment complexity and increased end-to-end delay in case of cache miss. However, the benefits of this approach outweigh its disadvantages.

2.3.2.3 Peer-to-Peer (P2P)

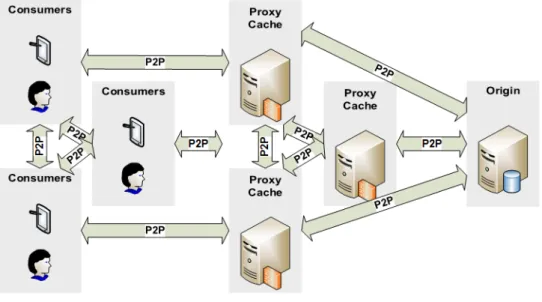

The P2P approach is another possibility to decentralize OTT delivery and also increase security and scalability. This architecture is illustrated in figure 2.4.

Figure 2.4: P2P OTT delivery.

In this approach, consumers and proxy caches provide resources as well as use them. Each node (peer) may communicate with others to locate and exchange missing parts (called chunks) of a certain content. The biggest advantage of using P2P is the origin bandwidth savings, since it uses the uplink capacity of users’ and proxy caches’ connections.

Although P2P uses the available upstream bandwidth better than proxy-caching approach, P2P streaming has several problems which prevent it from being widely used in OTT envi-ronment, such as the startup delay of a new streaming session (location and acquiring data from peers takes longer than streaming directly from an origin or proxy cache), and additional delays with playback lag in live streaming (but this can be reduced using agiler [25]).

2.3.3 Content Delivery Network Interconnection (CDNI)

CDNs provide numerous benefits in large-scale content delivery (as mentioned in previous subsections). As a result, it is desirable that a given item of content can be delivered to the consumer regardless of consumer’s location or attachment network. This creates a need for interconnecting standalone CDNs, so they can interoperate and collectively behave as a single delivery infrastructure [26, 27].

Typically, ISPs operate over multiple areas and use independent CDNs. If these individual CDNs were interconnected, the capacity of their services could be expanded without the CDNs themselves being extended. An example of CDNI between two different CDN providers located in two different countries is illustrated in figure 2.5.

Figure 2.5: CDNI use case 1.

As can be seen, CDNI enables CDN A to deliver content held within country A to con-sumers in country B (and vice versa) by forming a business alliance with CDN B. Both can benefit with the possibility of expanding their service without extra investment in their own networks. One of the advantages of CDNI for content service providers is that it enables them to increase their number of clients without forming business alliances with multiple CDN providers [28].

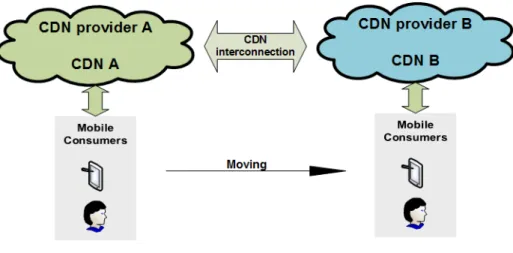

Another use case of CDNI is to support mobility [29] (figure 2.6). Telecommunication operators want to give users the choice of accessing the content and services they want, and pay for, in a wide range of client devices, instead of being constrained to a location (home) or device (TV). As a consequence, with CDNI, users can access the same content seen at home when outside the home on their smartphones or tablets.

Figure 2.6: CDNI use case 2.

As described above, CDNI provides benefits to CDN service providers as well as to con-sumers, since they can expand their service and the content is delivered, to the end-users, from a nearby replica server, improving QoE. However, since legacy CDNs were implemented using proprietary technology, there has not been any support for an open interface for connecting with other CDNs. Even though several CDNs could be interconnected, it is still difficult to

know the consumption of each user in order to charge. Hopefully, Content Delivery Networks interconnection - Work Group (CDNi-WG) is working to allow the CDN collaboration under different administrations [30]. The documents can be accessed online at [31].

2.3.4 Mobile Content Delivery Networks (mCDNs)

In the last few years, with the advances of broadband wireless technology that provides high-speed access over a wide area, the proliferation of mobile devices, such as smartphones and tablets, is rapidly growing and expected to increase significantly in the coming years. In fact, the increasing number of wireless devices that are accessing mobile networks worldwide is one of the primary contributors to global mobile traffic growth [32].

As a consequence, and because of the constraints and impairments associated with wireless video delivery that are significantly more severe than those associated with wire line, there is the need to consider it as a potential performance bottleneck in next generation delivery of high-bandwidth OTT multimedia content, that is the main objective of this MSc thesis. Three of the primary issues that must be considered for wireless video distribution are:

1. Mobile Device CPU, screen and battery limitations: mobile devices are typically multi-use communication devices that have different processors, display sizes, resolutions and batteries. Usually, mobile devices with smaller form factors utilize processors with lower capability and screen size than tablets for example, but the battery lasts longer. For these reasons, it is necessary that the video assets be transcoded into formats and “gear set” that ensure both good quality and efficient use of resources for transmission and decoding. The battery consumption for video delivery to client device must be minimized.

2. Wireless channel throughput impairments and constraints: the uncontrolled growth of Wi-Fi networks (that is, probably, the most popular technology to support personal wireless networks), particularly in densely populated areas where Wi-Fi networks must coexist, leads to interference problems. The impact of adjacent channel interference depends on the transmission power of the interferers, their spectrum transmit masks, on the reception filters of the stations receiving the interfering signal, and on the statistical properties of the interferers’ utilization of the spectrum. This problem can dramatically affect the performance of wireless networks, particularly in live video delivery. Another aspect is the lower available bandwidth and typically smaller displays on mobile devices, the “gear sets” used for wireless are very different than those for wire line. An example is a device with a 640x480 display that can work with video bitrates anywhere between 0.16 and 1.0 Megabits Per Second (Mbps), as opposed to 2.5 Mbps required by a 1280x720 display.

3. Diverse wireless Radio Access Network (RAN): wireless networks can vary between commercial GSM, 3G and LTE up to personal networks such as Wi-Fi and Bluetooth. Video delivery solutions should be RAN agnostic. Should the client side control the requested video bit rate, differences in RAN performance can be automatically accom-modated as long as there are enough “gears” provided to span the required range [33]. In order to improve performance in wireless video delivery (with impact in the global optimization of OTT multimedia delivery) it arises the concept of mobile Content Delivery Networks (mCDNs), that is illustrated in figure 2.7.

Figure 2.7: Typical mCDN infrastructure.

As can be seen, mCDNs are used to optimize content delivery in mobile networks (which have higher latency, higher packet loss and huge variation in download capacity) by adding storage to an AP, such as a wireless router, so that it can serve as a dispersed cache/replica server, providing the content (if it is cached) with low latency and high user experience, preventing network congestion. Although this approach presents improvements, the cache size is reduced compared to traditional proxy caches used in the core network. Thus, the ideal would be to cache only content that would be requested by consumers in the near future.

Consequently, in later sections, this document will address prefetching and multimedia caching algorithms to better understand how the content in replica servers can be replaced.

2.3.5 CDNs and Multimedia Streaming

As mentioned before, CDNs have been developed to serve the content to Internet users, improving performance and providing scalable services. Although using CDNs brings many benefits, serving multimedia content presents some issues.

To better understand this problem let’s take as an example the streaming of high-quality multimedia content. As can be expected, high-quality implies larger size (in MegaBytes (MBs)) than normal multimedia streaming. To keep this content in memory it is necessary a significant amount of free space on the servers Hard disk drive (HDD). As a direct conse-quence, this reduces the number of items that a given server may hold and potentially limits the cache hit ratios. Another problem is the significant impact on traffic volume of the net-work in the event of cache miss, due to its size; it may reduce the user QoE, since it will take more time to populate the replica server. Additionally, if the user does not require all the content or skip portions of a video, many resources are wasted.

Live streaming services represent another challenge to CDNs. If the impact on traffic volume is significant, the delay (origin to consumer) is higher and may not be considered anymore “live” streaming service.

In order to tackle these problems, several advanced multimedia stream technologies have been developed, and are presented in the next section.

2.4

Multimedia Streaming Technologies

Streaming is the process of transferring data through a channel to its destination, where data is decoded and consumed via client/device in real time. The difference between streaming

and non-streaming scenarios (also known as file downloading) is that, in the first case, the client media player can begin to play the data (such as a movie) before the entire file be transmitted, as opposed to non-streaming, where all data must be transferred before being played back. Streaming is never a property of the data that is being delivered, but is an attribute of the distribution channel. In other words this means that, theoretically, most media can be streamed up [34].

With the recent advances in high-speed networks and since full file transfer in the download mode usually suffers long and perhaps unacceptable transfer time, streaming media usage has increased significantly [35]. As a consequence, IPTV platforms and OTT services, such as Netflix, are growing up in popularity in the last decade.

Typically, video stream services use different types of media streaming protocols. Although they differ in implementation details, they can be classified into two main categories: Push-based and Pull-Push-based protocols.

In Push-based streaming protocols, after the establishment of the client-server connection, the server streams packets to the client until the client stops or interrupts the session. There-fore, in Push-based streaming, the server maintains a session state with the client, listening for session-state changes [36]. The most common session control protocol used in Push-based streaming is Real-Time Streaming Protocol (RTSP), specified in RFC 2326 [37].

On the other hand, in Pull-based streaming protocols, the media client is the active entity that requests content from the media server. Consequently, the server remains idle or blocked, waiting for client requests. Hypertext Transfer Protocol (HTTP) is a common protocol for Pull-based media delivery [36].

The type of content being transferred and the underlying network conditions usually determine the methods used for communication. To give an example, in “live” streaming the priority is on low latency, jitter and efficient transmission when occasional losses might be tolerant; otherwise, in on-demand streaming, when the content does not exhibit any particular time constraints or time relevance, the quality is the most important.

In the following subsections the most important streaming protocols will be presented, starting with Traditional Streaming (Push-based) which was the first protocol implemented, proceeded with Progressive download that is one of the most widely used Pull-based me-dia streaming, and ends with an analysis of adaptive streaming protocols (also Pull-based), namely the segmented HTTP-based delivery, given its growing popularity and potential.

2.4.1 Traditional Streaming

RTSP (Real-Time Streaming Protocol) is a good example of a traditional streaming and may use Real-Time Transport Protocol (RTP) (but the operation of RTSP does not depend on the transport mechanism used to deliver continuous media) and Real-Time Control Protocol (RTCP) [37].

RTSP can be defined as a statefull protocol, which means that after the establishment of a client-server connection, the server keeps track of the client’s session state. The client can communicate its state to the server by using commands such as Play (to start the streaming), Pause (to pause the streaming) or Teardown (to disconnect from the server and close the streaming session) [38, 39]. Traditional streaming using RTP streaming protocol is illustrated in figure 2.8.

After a session between the client and server has been established, the server starts sending the media, using RTP data channel either over User Datagram Protocol (UDP) or TCP, as

Figure 2.8: Traditional Streaming [2].

a stable stream of small packets (the default RTSP packet size is 1452 bytes, which means that, in a video decoded at 1 megabits per second, each packet contains information of about 11 milliseconds of video). To maintain a stable session, RTSP data may be interleaved with RTP and RTCP packets in order to collect QoS data such as bytes sent, packet losses and jitter. In cases of non-critical packets losses, this protocol supports grateful degradation of the playback quality and this is why it is used for live streaming.

RTSP has several issues such as scalability and complexity. The support of millions of devices requires managing millions of sessions and multiple ports. For this reason, along with advances in available network capacity and bandwidth, the use of RTSP becomes outdated, although niche use-cases still exist such as video-conferencing.

Other examples of traditional streaming protocols include Real-Time Messaging Protocol (RTMP) (belongs to Adobe Systems’) and RTSP over Real Data Transport (RDT) protocol (belongs to RealNetworks’) [38].

2.4.2 Progressive Download

Progressive download is a pseudo-streaming method very currently used, because it allows high scalability. This approach is a simple file download from an HTTP Web server. The term “progressive” arises due to the fact that, as soon as the media player receives some data, the playback may begin, while the download is still in progress (typically to the Web browser cache). Therefore, progressive download is considered a pseudo-streaming due to these particular characteristics [38].

Progressive download architecture is illustrated in figure 2.9, and an example, which helps to understand better how progressive download works, can be seen in figure 2.10. In this example, the first dark blue bar shows how far the video has been viewed, and the light blue bar shows how much the video was loaded into the video browser (which is a buffer/cache). Thus, once the buffer is filled with a few seconds of video, the video will begin to play as if it is in real time.

Figure 2.9: Progressive Download Architecture [3].

Figure 2.10: Example how Progressive Download works [3].

Video (FLV), QuickTime (QT), Windows Media Video (WMV) and bit rates (video quality) (figure 2.11) in order to optimize the stream depending on the user’s device, which does not happen in the traditional streaming. Progressive download ensures better quality than traditional streaming, because there is no packet loss in the client.

Figure 2.11: Progressive Download features [3].

The advantage of being supported via HTTP is what makes it highly scalable. File downloading through HTTP is stateless (if an HTTP client requests some data, the server responds by sending the data, without caring about remembering the client or its state), and because of that, it can easily use proxy servers and distributed caches or CDNs.

The consequence associated with this scalability is the loss of several features of RTSP, such as no support for live streaming, no adjustable streaming based on QoS metrics or graceful degradation (missing packets will stop playback, waiting for the required data to be downloaded). However, progressive download is widely used and supported by most media players and platforms, including Adobe Flash, Silverlight, and Windows Media Player [38].

2.4.3 Adaptive Streaming Technologies

Adaptive streaming arises as a way to take advantage of the major benefits of each tech-nology previously analyzed. If on the one hand RTSP contemplates some adaptation via the feedback QoS metrics sent through RTCP, on the other it presents scalability problems, as opposed to progressive download, which presents high scalability, but no support for live streaming, no adjustable streaming based on QoS metrics or graceful degradation.

In scenarios of unreliable or varying network conditions, adaptation is a crucial feature of any streaming technology. The concept of adaptive video streaming is based on the idea to adapt the bandwidth required by the video stream to the throughput available on the network. The adaptation is performed by varying the quality of the streamed video and thus its bit rate, that is the number of bits required to encode one second of playback [40].

There are several ways to provide adaptation. The most typically used cases are in the encoding or distribution processes. Once the segmented HTTP-based delivery uses adaptation in the distribution process, only this class will be discussed.

2.4.3.1 Adaptive Segmented HTTP-based delivery

Segmented HTTP-based delivery can be seen as an evolution of progressive download streaming, because it provides the same benefits without the disadvantages of not supporting adaptation or live streaming.

The idea behind this method is to chop the media file into fragments (or ”chunks”), usually 2 to 4 seconds long [38], and then encode each fragment at different qualities and/or resolutions. Thereafter, the adaptation to the quality or resolution fragment is done on the client side based on several parameters, such as the available throughput and user’s device (battery level, available computing resources), e.g., the client can switch to a higher bit rate if bandwidth permits. This approach is illustrated in figure 2.12.

Figure 2.12: Segmented HTTP Adaptive Streaming [4].

The client has full control over the downloaded data. Thus, it can mix different chunks of the same video and the only impact will be on the quality or resolution of the media. The algorithmic process of deciding the optimal representation for each fragment, in order to optimize the streaming experience, is the major challenge in adaptive streaming systems. Problems such as estimating the dynamics of the available throughput, control the filling level of the local buffer/cache (in order to avoid underflows and consequently playback interrup-tions), maximize the quality of the stream and minimize the delay between the user’s request

and the start of the playback is not trivial [40].

Although this technology presents several challenges, it is growing in popularity due to its potential. Consequently, multiple implementations of segmented HTTP-based delivery emerged such as Apple HTTP Live Streaming (HLS), Microsoft Smooth Streaming, Adobe HTTP Dynamic Streaming (HDS) and Moving Pictures Expert Group (MPEG) Dynamic Adaptative Streaming (DASH).

Since the implementation of this MSc thesis uses Microsoft Smooth Streaming, it will be presented in a more detail below.

Microsoft Smooth Streaming

Smooth Streaming was developed by Microsoft in order to provide a response in adaptive streaming area. It is based on the HTTP and MPEG-4 file format standards [41]. To create a Smooth Streaming presentations it is necessary an encoder (usually Microsoft Expression Encoder or other compatible solutions) to encode the same source content at several quality levels, typically with each level in its own complete file. Then the content is delivered using a Smooth Streaming-enabled Internet Information Services (IIS) origin server. Thereafter, a Smooth Streaming Client is required. Microsoft provides client implementation based on Silverlight [5]. The IIS Smooth Streaming media workflow is illustrated in figure 2.13.

Figure 2.13: IIS Smooth Streaming Media Workflow [5].

After the IIS origin server receives a request for media, it will send to the client a manifest file about the requested media and dynamically create cacheable virtual fragments from the video files. The benefit of this virtual fragment approach is that the content owner can manage complete files rather than thousands of pre-segmented content files [5].

There are two different manifest files - client and server, which are in Extensible Markup Language (XML) format. The client manifest may be downloaded by the client and processed in order to initiate the playback. This file reveals the internal structure of the adaptive content, such as the number of available tracks (encoded streams), resolution, duration and

how they are fragmented (number of chunks and each duration). With this information, the client may decide to first request the lowest quality chunks in order to evaluate the network conditions and then decide to scale up the quality or not. An example of client manifest file is show in figure 2.14.

Figure 2.14: Client Manifest File - Example.

The server manifest file is used by the streaming service (usually IIS origin server). This file provides a macro description of the encoded content, such as the number of encoded streams (tracks), the track type (video, audio or text), the location of each track and some more information (codec type, bit rate, resolution, etc).

The following section will present the importance of using a prefetching algorithm in CDNs, since the content on Internet continues to grow but the size of the cache is fixed.

2.5

Prefetching

Prefetching is a generic term used to refer to something that was previously loaded into memory before being explicitly requested. Thus, web prefetching is a technique which reduces

the user-perceived latency by predicting web objects and storing them in advance, hoping that the prefetched objects are likely to be accessed in the near future [42, 43, 44]. Therefore, a prefetching mechanism needs to be used in conjunction with a caching strategy.

Prefetching strategies are diverse and no single strategy has yet been proposed which provides optimal performance, since there will always be a compromise between the hit ratio and bandwidth [45]. Intuitively, to increase the hit ratio, it is necessary to prefetch those objects that are accessed most frequently but, to minimize the bandwidth consumption, it is necessary to select those objects with longer update intervals [44]. To be effective, prefetching must be implemented in such a way that prefetches are timely, useful and introduce little overhead [45]. Downloading data that is never used is of course a waste of resources.

Many studies have been made over the years. Initially, the prefetching algorithms con-sidered only as metric the characteristics of the web objects, such as their access frequency (popularity), sizes and lifetimes (update frequency). This led to the proposal of several of prefetching algorithms such as prefetch by popularity, lifetime and good fetch, to name a few. Prefetch by Popularity: Markatos et al. [46] suggested a “Top Ten” criterion for prefetching web objects. Each server maintains access records of all objects it holds and, pe-riodically, calculates a list of the 10 most popular objects and keeps them in memory [44, 47]. The problem with this approach is that it assumes that all users have the same preferences, and, moreover, it does not keep track of a users’s history of accesses during the current session [48]. A slight variance of the “Top Ten” approach is to prefetch the m most popular objects from the entire system. Since popular objects are most likely to be required, this approach is expected to achieve the highest hit rate [44, 47].

Prefetch by Lifetime: The lifetime of an object is the interval between two consecutive modifications of the object. Since prefetching increases system resource requirements, such as server disk and network bandwidth, the latter will probably be the main limiting factor [49]. Thus, since the content is downloaded from the web server whenever the object is updated, in order to reduce the bandwidth consumption it is natural to choose those objects that are less frequently updated. Prefetch by lifetime will selects m objects with the longest lifetime to replicate in the local cache and, thus, aims to minimize the extra bandwidth consumption [44, 47].

Good Fetch/Threshold: Venkataramani et al. [50] proposed a threshold algorithm that balances the access frequency and update frequency (lifetime), and only fetches objects whose probability of being accessed before being updated exceeds a specified threshold. The intuition behind this criterion is that objects with relatively higher access frequencies and longer update intervals are more likely to be prefetched. Thus, this criterion provides a nat-ural way to limit the bandwidth wasted by prefetching.

Although these methods exhibit some efficacy, recent studies reveal that the prefetching algorithms must take into account several factors, such as the session/consumer behavior and the content itself. Andersson et al. [51] investigated the potential of different prefetching and/or caching strategies for different user behaviors in a catch-up TV network (concept explained in subsection 2.2.1). The objective was to reduce the zapping time, i.e. the time from the channel selection to start of playout or even quick reaction to fast forward or rewind, in order to improve the user’s perceived QoE. In this study, consumers were divided into two

groups, depending on their behavior: zappers and loyals.

Zappers had as main feature fast switching between programs, searching for the desired view, while loyals consume the first selection to the end before requesting another view. With this division, Andersson et al. [51] wanted to be able to better interpret the behavior of their consumers, in order to improve the service and keep the customer satisfied. The main conclusions reached were that zappers are more prone to watch several different streams per session than loyals, although they had shorter sessions. It was also discovered that zapping prone channels exist. A movie channel, for example, had a greater number of zapping behavior in comparison with a mixed channel. Therefore, it is interesting to compare the channel’s request patterns and not just the consumer behavior.

An analysis regarding different gains of prediction of episodes of the same series is also carried out. Loyals have a higher probability than zappers to go from episode X to episode X + 1. Zappers, on the other hand, request previous episodes more likely than loyals. Thus, a good prefetching algorithm should be able to adapt to the profiling of both consumers and contents.

In a similar approach, but without division into groups, Nogueira et al. [52] presents a detailed analysis on the characteristics of users’ viewings. The results show that Catch-up TV consumption exhibits very high levels of utilization throughout the day, especially on weekends and Mondays. While a higher utilization on the weekends is expected, since consumers tend to have more free time, the service utilization on Mondays is explained as being due to users catching-up on programs that they missed on the weekends. The superstar effect is also notorious. In an universe of 88,308 unique programs, the top 1,000 programs are responsible for approximately 50% of the total program requests. Results also show that most Catch-up TV playbacks occur shortly after the original content airing and users have a preference for mostly General, Kids, Movies and Series content in virtue of not being time dependent. Thus, Sport and News genres quickly become irrelevant after the first two days.

All data referring to the knowledge of the habits of each user, their preferences and even the days of greater affluence to the service will be helpful in the elaboration of an effective and efficient prefetching mechanism.

In [53] it is presented an algorithm that takes into account information collected from the user session in real time. Bonito et al. [53] conceived this prediction system which must be able to adapt itself to changes in a reasonable time. Thus, a set of “prediction machines” is defined, evolved and evaluated through an evolutionary algorithm in order to obtain bet-ter prediction performances using information gathered from user sessions. A user session is defined as a sequence of web requests from a given user. Each request is composed by an identifier “GET”, “POST” or “HEAD” request to a web server coming from a host with specified IP address over the HTTP protocol [RFC 2616]. It is considered the user as an anonymous entity, approximately identified by a combination of the source IP address and the client cookie. The same person connecting to the same site at a later time would be identified as a different user. A request from a user is only considered valid if the HTTP response code is 2xx (success). Thus, the evolutionary algorithm manages a series of user requests, learning from them and trying to find a pattern in order to predict the user’s next action.

The prefetching techniques do not reduce user latency, they only use the time that the network is not being used, trying to predict the user next action, reducing the response time if

the prediction is done correctly. The following section will present the concept of multimedia caching and the most widely used algorithms.

2.6

Multimedia Caching

Section 2.3 explains in detail how the multimedia content is delivered in OTT networks using the CDN infrastructure. This infrastructure, as mentioned, is composed by multiple replica servers that acquire and cache data from origin servers, in order to bring the content near to the consumer, avoiding a large network congestion. Multimedia caching is then the process of storing multimedia data (web content and video) in a cache [54].

The cache storage potential depends on several factors, such as user behavior, content popularity and the caching algorithm itself, since the size of replica servers cache is reduced. Thus, a prefetching algorithm (see section 2.5) should be used along with the cache algorithm in order to help predicting the user behavior and better decide whether the content is relevant to cache.

Therefore, this section will present the only factor that has not yet been discussed, the caching algorithm. Thus, the next subsection provides an overview of popular caching algo-rithms, such as FIFO, LRU and LFU. It also presents an approach developed in our group, the MPU.

2.6.1 Caching Algorithms

In order to understand how some cache policies work, it is preferable to consider a simple example: the reference string (1,2,3,4,1,2,5,1,2,3,4,5) represents the order in which content is requested (different numbers represent different contents), with a cache size of 3 elements [55].

The simplest page-replacement algorithm is a FIFO algorithm. A FIFO replacement algorithm associates with each page the time when that page was brought into memory. When a page must be replaced, the oldest page is chosen. Table 2.1 demonstrates the application of the reference string to a cache employing FIFO, and shows that 3 cache hits are achieved, along with 9 pages faults.

Table 2.1: FIFO cache Replacement Policy

Iteration 1 2 3 4 5 6 7 8 9 10 11 12

Request 1 2 3 4 1 2 5 1 2 3 4 5

Result miss miss miss miss miss miss miss hit hit miss miss hit

Page 1 1 1 1 4 4 4 5 5 5 5 5 5

Page 2 2 2 2 1 1 1 1 1 3 3 3

Page 3 3 3 3 2 2 2 2 2 4 4

This algorithm is easy to understand and program. However, its performance is not always good, once the FIFO is well known for being vulnerable to the B´el´ady’s anomaly [56].

LRU replacement algorithm uses the recent past as an approximation of the near future, removing the least recently accessed items as need in order to have enough space to insert a new item. This approach does not suffer from B´el´ady’s anomaly because it belongs to a class of page-replacement algorithms called stack algorithms [55]. Table 2.2 demonstrates the

![Figure 2.1: Typical CDN infrastructure [1].](https://thumb-eu.123doks.com/thumbv2/123dok_br/15966558.1100494/32.892.156.753.589.1034/figure-typical-cdn-infrastructure.webp)

![Figure 2.12: Segmented HTTP Adaptive Streaming [4].](https://thumb-eu.123doks.com/thumbv2/123dok_br/15966558.1100494/43.892.146.762.711.916/figure-segmented-http-adaptive-streaming.webp)

![Figure 2.13: IIS Smooth Streaming Media Workflow [5].](https://thumb-eu.123doks.com/thumbv2/123dok_br/15966558.1100494/44.892.203.709.578.899/figure-iis-smooth-streaming-media-workflow.webp)