Universidade do Minho

Escola de Engenharia

Mauro José Ferreira de Freitas

Personalized approach on a smart image

search engine, handling default data

Universidade do Minho

Dissertação de Mestrado

Escola de Engenharia

Mauro José Ferreira de Freitas

Personalized approach on a smart image

search engine, handling default data

Mestrado em Engenharia Informática

Trabalho realizado sob orientação de

Professor Cesar Analide

ii

Acknowledgements

I would like to thank my supervisor Cesar Analide who gave me the opportunity to develop this project under his guidance and helped me on my academic growth in the last two years during my master's degree journey.

I would also like to show my appreciation for professors José Maia Neves and Henrique Vicente who helped me in the development of scientific contribution.

To Bruno Fernandes I would like to thank the support provided during the course of this project. His contribution was essential in the past few months during the completion of this project and also in carrying out the scientific contributions.

To all my friends, thanks for everything we've been through in the last five years since we were admitted in Informatics Engineering. Your support was very important to complete this stage of my academic life.

Finally I thank my parents and sisters who were always available to help me, providing all conditions for the achievement of all my objectives.

iii

Abstract

Search engines are becoming a tool widely used these days, there are different examples like Google, Yahoo!, Bing and so on. Adjacent to these tools is the need to get the results closer to each one of the users. Is in this area that some work has been developed recently, which allowed users to take advantage of the information presented to them, with no randomness or a sort of generic factors.

This process of bringing the user closer to the results is called Personalization. Personalization is a process that involves obtaining and storing information about users of a system, which will be used later as a way to adapt the information to present. This process is crucial in many situations where the filtering of content is a requirement, since we deal daily with large amounts of information and it is not always helpful.

In this project, the importance of personalization will be evaluated in the context of intelligent image search, making a contribution to the project CLOUD9-SIS. So, it will be evaluated the information to be treated, how it will be treated and how it will appear. This evaluation will take into account also other examples of existing search engines. These concepts will be used later to integrate a new system of searching for images, capable of adapting its results depending on the preferences captured from user interactions. The usage of the images was only chosen because CLOUD9-SIS is intended to return images as a result, it was not developed or used any technique for image interpretation.

Keywords: Personalization; Case-Based Reasoning; Intelligent Systems; Search Engines;

iv

Resumo

Os motores de busca estão a tornar-se uma ferramenta bastante utilizada nos dias de hoje, existindo diferentes exemplos, tais como Google, Yahoo!, Bing, etc. Adjacente a essas ferramentas surge a necessidade de aproximar cada vez mais os resultados produzidos a cada um dos utilizadores. É nesta área que tem sido desenvolvido algum trabalho recentemente, o que permitiu que os utilizadores, pudessem tirar o melhor proveito da informação que lhes é apresentada, não havendo apenas uma aleatoriedade ou factores de ordenação genéricos.

A este processo de aproximação do utilizador aos resultados dá-se o nome de Personalização. A Personalização é um processo que consiste na obtenção e armazenamento de informações sobre os utilizadores de um sistema, para posteriormente serem utilizadas como forma de adequar a informação que se vai utilizar. Este processo é determinante em várias situações onde a filtragem dos conteúdos é um requisito, pois lidamos diariamente com grandes quantidades de informação e nem sempre esta é útil.

Neste projecto, vai ser avaliada a preponderância da Personalização no contexto da pesquisa inteligente de imagens, dando um contributo ao projecto CLOUD9-SIS. Assim, será avaliada a informação a ser tratada, a forma como será tratada e como será apresentada. Esta avaliação terá em consideração também exemplos de outros motores de busca já existentes. Estes conceitos serão posteriormente utilizados para integrar um novo sistema de procura de imagens que seja capaz de adaptar os seus resultados, consoante as preferências que vão sendo retiradas das interacções do utilizador. O uso das imagens foi apenas escolhido porque o projecto CLOUD9-SIS é suposto retornar imagens como resultado, não foi desenvolvida nem utilizada nenhuma técnica de interpretação de imagens.

Palavras-chave: Personalização; Raciocínio Baseado em Casos; Sistemas Inteligentes; Motores

v

Contents

Acknowledgements ... ii Abstract ... iii Resumo ...iv Contents ... vList of Figures ... vii

Abbreviations ... viii

1. Introduction ... 1

1.1 Motivation ... 3

1.2 Objectives ... 4

1.3 Structure of the document ... 5

2. State of the Art ... 6

2.1. Intelligent Systems ... 6

2.1.1 Artificial Neural Network ... 6

2.1.2 Case-Based Reasoning ... 7

2.1.3 Genetic and Evolutionary Algorithms ... 12

2.2. Personalization over search engines ... 14

2.2.1 Benefits... 17

2.2.2 Disadvantages ... 18

3. Technologies ... 19

3.1. Programming Language ... 19

3.2. Android ... 20

3.3. Case-Based Reasoning - Decision ... 21

3.4. Bing API ... 21

4. Implementation ... 23

vi

4.1.1 String Comparison ... 25

Jaro-Winkler ... 26

Levenshtein ... 26

Dice’s Coefficient ... 27

4.1.2 Handling Default Data ... 28

4.2. Data Model ... 34 4.3. Mobile Application ... 37 4.3.1 Search ... 38 4.3.2 Settings ... 40 4.3.3 Results ... 44 5. Case Study ... 47 6. Results ... 52

7. Conclusion and Future Work ... 57

7.1. Synthesis of the work done ... 57

7.2. Relevant work... 59

7.3. Future work ... 60

vii

List of Figures

Figure 1 - Typical CBR Cycle (Aamodt and Plaza, 1994). ... 2

Figure 2 - Example of a ANN structure, showing a possibility of interconnections between neurons ... 7

Figure 3 - CBR paradigm illustration. ... 7

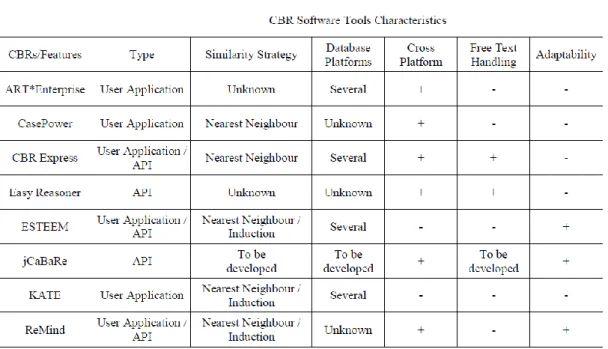

Figure 4 - Characteristics of existent CBR Software Tools. ... 12

Figure 5 - GEA process of getting a solution to a problem. ... 13

Figure 6 - Four main factors that personalize searches on Google. ... 15

Figure 7 - Example of Yandex suggestions and results. ... 16

Figure 8 - Architecture of the Solution. ... 23

Figure 9 - Sequence Diagram of the Solution ... 24

Figure 10 - Sequence Diagram of the Server. ... 25

Figure 11 - Adapted CBR cycle taking into consideration the normalization phase. ... 28

Figure 12 - Evaluation of the Degree of Confidence. ... 33

Figure 13 - Case base represented in the Cartesian plane. ... 33

Figure 14 - Case base represented in the Cartesian plane. ... 34

Figure 15 - Structure of the CBR Cases. ... 35

Figure 16 - Structure of the CBR Cases Adapted... 35

Figure 17 - Data Model of the Solution. ... 36

Figure 18 - Search Screen. ... 38

Figure 19 - Use Case Diagram of Search. ... 39

Figure 20 - Sequence Diagram of Search. ... 40

Figure 21 - Settings Screen. ... 42

Figure 22 - Use Case Diagram of Settings. ... 43

Figure 23 - Sequence Diagram of Settings. ... 43

Figure 24 - Results Screen... 45

Figure 25 - Result Sport Screen. ... 53

Figure 26 - Personalized Results Screen. ... 54

viii

Abbreviations

ANN Artificial Neural Network CBR Case-Based Reasoning

GEA Genetic and Evolutionary Algorithms IS Intelligent Systems

API Application Programming Interface DoC Degree of Confidence

QoI Quality of Information DFT Density Functional Theory

CD Constitutional Molecular Descriptor QD Quantum-Chemical Molecular Descriptor BP-ANN Back Propagation Artificial Neural Network EA Evolutionary Algorithms

1

1. Introduction

Search engines are technologies that have long appeared in our day-to-day. However, they are still evolving, searching for a solution adapted to the concept of each user.

This issue has been a concern for the major search engines that gradually turned their results more targeted to each user. This means that the information itself is not the only factor influencing the results.

This connection of the information with the user gains a new dimension, contributing greatly to the efficiency of the search. This improvement was confirmed by the research made by Yandex, which is the most used search engine in Russia. They include countries such as Ukraine, Belarus and Kazakhstan only by the use of Personalization. Results showed that users click 37% more on the first result when personalized, making the process of finding the information the user is looking for 14% faster (Kruger A., 2012).

The scope of this project is to take a non-personalized search engine and manipulate it using techniques of Intelligent Systems, this manipulation is intended to make user's will a filtering parameter.

This issue has been addressed in various search engines, however there is little information on the techniques used, so this project shows a way to use the power of intelligent systems techniques to create a support system for a search engine.

Almost all search engines are beginning to use the personalization, however they use it in different ways. This is because personalization can be based in Q&A forms or in users historic. Within these two categories, personalization can be based only on the responses to forms or else the combination of the user with the preferences of similar users (Analide C. and Novais P., 2012).

Besides personalization there are other techniques related to the extraction of information that have to be studied with the intention to create a more reliable system. In this subject there are a few possibilities however this project will explore the capacities of the CBR. The decision for this technique will be explained next.

The problem described above can be partially solved using the previous searches, which are stored on a kind of database. This procedure is very connected to the philosophy of CBR

2

systems, which can solve a problem by getting information from previous experiences kept on a knowledge base.

An example is the medical health screening which in most cases are based on previous experiences. According to Aamodt and Plaza this technique is a possibility for problems where the amount of information is wide and where the past experiences could lead to a possible solution. These last experiences provide helpful information needed to solve the current case.

However CBR can only be applied when there's something similar between cases that can be kept and then used as a first step to achieve a solution to a new problem (Balke T. et al, 2009).

This technique has a wide range of application, and this was expressed by Khan, Hoffmann and Kolodner, which uses CBR for medical problems or by Davide Carneiro who came up with a solution to the law problem of dispute resolution (Carneiro D., 2009).

Besides all this great features, the availability of data and the cost of obtain it, continues to be the major problem within this technique.

Aamodt and Plaza in 1994 defined a model that is nowadays widely accepted when speaking about CBR.

3

As seen on Figure 1 there a few vocabularies that were adopted. For example, a problem

is expressed as a case and the previous cases are kept on a domain knowledge. They also clarify that, in order to work with CBR, the first step is to define the structure of the case. This structure is almost always composed by the problem, the description and the solution however it is possible to be adapted according the scope of the problem. This structure will be important from now on, it will have to be kept for every cases and will have to include every information helpful for the search of a solution.

Having the new case structured correctly the following step is to retrieve from the knowledge base the cases more similar to the new case. This measure of similarity is limited by a threshold that can be suited to each case study.

With the retrieved cases and the new case obtained, both could be combined on the reuse phase to provide a solution. After this step the solution is confirmed and can be tested and adapted before being established as a final solution, which would be stored on the domain knowledge.

The revise phase is one of the most important because is where the case is tested and where the user can interact with the suggested solution. At this stage the solution can be adapted or corrected providing more reliability to the cases that are stored. This interaction can solve some problems that automatic adaptation can't. Kolodner in 1992 defined the main adaptation techniques as the adaptation of attributes of the case, the usage of rules and the analog transfer (Kolodner J., 1992).

While a valuable solution, that will be stored on the domain knowledge, were not achieved, the process can become iterative.

1.1 Motivation

Based on an existing search engine, the motivation lies in the possibility of creating an intelligent system able to capture information from users so it can be used later.

Many search engines use personalization, however there are few references on the techniques used and they are often related to the location and previous searches of the user.

This gap has been detected and became the biggest motivating factor, since some new paths can be explored in regards to this issue.

4

Another motivation lies on the possibility to create a new CBR model able to deal with unknown information in a much effective way than the already developed systems. This new approach is being studied and now it can be improved and implemented to retrieve better results. There is also the fact that this approach was never applied to this area, which represents an additional challenge to this work.

Finally this work is intended to be applied on a mobile application, so another motivation is the development of the application itself however in regards to the knowledge extraction there's the motivation of creating a system able to communicate with many other systems.

1.2 Objectives

The goal of this project is to develop a mobile application that provides a search engine with the possibility to perform a regular search and with an intelligent and customizable module, able to provide to the users some content perfectly suited to his particular taste, his preference, i.e., his wills. This work is intended to provide a new approach to a search engine with new techniques, using the images only as the result to the analysis of the query performed. The images won't be handled by any particular system that could help when trying to find some matches to the query presented to the system. However, the system will be developed on a way that some additional modules could be added on future to enhance the solution we want to create. This will give the possibility to establish a connection between cases also by analyzing some features of the images such as colors or shadows.

To do this, techniques of knowledge analysis and extraction were studied, in order to create models able to determine the most effective way to collect information from the user and the searches. These techniques must be capable of handling large amounts of different kinds of information, such as uncertain or unknown information, and still be able to produce a result in good time.

In regards to the uncertain or unknown information there's also the objective of adapting a new approach that will be studied to the context of this project. This approach will have to be capable of dealing with the storage of large amounts of data.

Related to the knowledge extraction, in this work it will communicate with a mobile application, however since the beginning an objective is to create a cross platform solution, so

5

the knowledge extraction system will be able to communicate with web applications for instance, once the search engines are mostly used on web (Google, Yahoo, etc.).

Finally the objective to the mobile application is to create a user friendly environment with the simplicity of many other search engines, that allows the user to instinctively reach his purposes of finding the right content.

1.3 Structure of the document

This document will be split in seven parts. The first one where will be explained the project, establishing a context, the motivation to explore this path and which objectives are intended to be reached.

Then there's the state of the art where is described how search engines are dealing with this subject, showing when possible examples of how it's made. It is also made an analysis of some intelligent systems techniques that were thought to be used in this project. This analysis contains also some advantages, disadvantages and application areas for each technique.

The following chapter is related to the technologies that were used in the implementation proposed in this project. Here there are some explanations of how the technologies operate and how they could be useful in our implementation. There is also a conclusion about the analysis performed on the previous chapter in regards of the intelligent system technology to use.

The fourth chapter is one of the most important chapters of this thesis. The Implementation chapter is divided in three sub chapters where is detailed the major decisions taken in the creation of the back office, the data model and the mobile application.

To complement this new approach and in regards of the handling default data technique, it was created a case study that was described on chapter five. This case study helps to understand how the CBR system will handle the repository and how the new cases will be handled when presented to the system.

The second last chapter is where the results of the work performed over this project are described. Here some conclusions are taken in regards of the techniques that were chosen.

Finally the last chapter is the conclusion and future work. This chapter is divided in three major topics, the synthesis of the work performed, the relevant work such as scientific contributions and some guidelines about what is being done and what could be done in future in order to take this project to the next level.

6

2. State of the Art

After choosing the theme, a research was made in order to understand what has been done in this area. It is well known that the major search engines such as Google, Yahoo!, Bing and so on, use personalization in their searches. This factor is also used in other systems that are not exactly a search engine such as Amazon or eBay. However it is unclear which technique is used to filter and suggest the results.

It is known however that the vast majority of users of the systems mentioned above are against the collection of information during their use. Yet only 38% of users know how to work around this situation (Barysevich A., 2012).

2.1. Intelligent Systems

Since the purpose is the development of an intelligent system, there are some possibilities to be considered related to the existing techniques.

There are many techniques and each one has its pros and cons, so the choice of the technique required a reflection to realize which one suited well in this project. After this reflection it was chosen the technique considered closer to the project requirements.

There are 3 main techniques used with regards to intelligent systems, they are the Artificial Neural Networks, Case-Based Reasoning and Genetic and Evolutionary Algorithms.

2.1.1 Artificial Neural Network

Artificial neural networks are computational systems that seek for a problem solution using, as base structure, the central nervous system of humans. It is also considered an artificial neural network any processing structure whose paradigm is connectionism.

7

Figure 2 - Example of a ANN structure, showing a possibility of interconnections between neurons

2.1.2 Case-Based Reasoning

The case-based reasoning is a paradigm that consists in solving problems, making use of previous cases that have occurred. When a problem is proposed, a similarity to those observed earlier is calculated in order to see which one is closest. Discovered that similar case the same solution is applied.

8

When applying this type of paradigm, first step consists in creating a structure for each case, which is usually composed of the problem itself, the solution and the justification.

The choice for this kind of reasoning is usual in areas where knowledge from experience is vast, when there are exceptions to the rule and when learning is crucial (Analide C., et al., 2012;Kolodsen J., 1992;Aamodt A. and Plaza E., 1994).

As it was said before, the range of a CBR system is extremely wide. A few examples are medical diagnosis, law, assisted learning, diets and so on.

According to Riesbeck and Shank or Tsinakos, CBR systems can improve assisted student learning. SYIM_ver2 is a software developed to monitor the student's performance, saving his learning needs or even answering the student's questions. The reasoning of this system is based on a CBR (Riesbeck C. and Schank R., 1991;Tsinakos A., 2003).

This system identifies similar questions and uses them to answer a new one. The search of similar questions is made according to a key search feature. The PISQ (Process of Identification of Similar Questions) is responsible to find and provide a solution on Educational knowledge base.

SYIM_ver 2 allows the user to make two kinds of searches, a free text search and a controlled environment search, which was an advantage when compared with the previous version.

In some countries is now becoming possible to solve law problems using previous experiences. This fact opened new paths for CBR systems to explore the law issue and there are many examples, one of them offers a solution to the online dispute resolution. This problem is recent since we now face a new era with a huge technology focus, which became the traditional courts not capable to deal with that amount of cases.

With the acceptance of this process new systems start to appear. In 2009/2010, Davide Carneiro presented a solution that uses past experiences to achieve a quicker solution to the real problem (Carneiro D., et al., 2009; Carneiro D., et al., 2010).

Previously in 1989 Rissland and Ashley developed a model based on hypothetical and actual cases which gave him the name HYPO. (Rissland E. and Ashley K., 1989) The input is from the user and is called Current Fact Simulation (CFS), where each case includes a description, the arguments, the explanation and justification.

9

The output is provided as a case-analysis-record with HYPOS analysis; a claim lattice showing graphic relations between the new case and the cases retrieved by the system; 3-ply arguments used and the responses of both plaintiff and defendant to them; and a case citation summary (Rissland E. and Ashley K., 1989). Related to the domain knowledge, it is structured in three places: the cases-based of knowledge (CBK), the library of dimensions and the normative standards. CBK is where the cases are stored and ,the dimensions encode legal knowledge about a certain point of view.

HYPO was a start for other systems that took advantage of the great results on legal reasoning. TAX-HYPO on tax law (Rissland E. and Skalak D., 1989), CABARET for income tax law (Skalak D. and Rissland E., 1992) and IBP for predictions on argumentation concepts (Brüninghaus S. and Ashley K., 2003) are some of the examples that derived from HYPO.

Kolodner in 1992 and Khan and Hoffmann in 2002 presented two solutions for two different problems such as medical diagnosis and diet recommendations, respectively (Kolodner J., 1992; Khan A. and Hoffman A., 2002). In case of medical diagnosis is important to filter from a huge amount of diseases and symptoms, to achieve the better treatment, as quickly as possible. The first reasoning within doctors is to use previous cases solved, to select the best solution to the current case. Making comparison with the CBR reasoning, the doctor receives a new case (patient) with a specific disease and symptoms. After achieving a solution, this new case became part of the doctor knowledge. Future cases can be easily and quickly solved based on the knowledge, improving the speed of the solution and decreasing the expenses. The CBR system will also improve the reliability and the speed of the process. In 1993 Kolodner suggested that cases should be presented in a form of a triple <problem, solution, justification>.

Besides that, the only purpose of the system is to "suggest", the final decision should be validated and adapted, if needed, by the doctor, giving more quality to the cases retained.

Regarding to the diet recommendation, this system is able to provide a menu specific to some personal features such as medical conditions, calorie restrictions, nutrients requirements or even culture issues. In 2002 Khan and Hoffman proved that a system using CBR is able to recommend a diet, fitting the patient needs.

Once more the solution is only a recommendation the expert should adapt, if needed, and then evaluate the solution provided by the available cases of the knowledge base.

On the other hand, there are several CBR software tools developed and tested, which are being applied in real situations, namely Art*Enterprise, developed by ART Inference Corporation

10

(Watson I., 1996). It offers a variety of computational paradigms such as a procedural programming language, rules, cases, and so on. Present in almost every operating system, this tool contains a GUI builder that is able to connect to data in most of proprietary DBMS formats. It also allows the developer to access directly the CBR giving him/her more control of the system, which in most situations may turn into a drawback.

The CBR itself is also quite limited since the cases are represented as flat values (attribute pairs). The similarity strategy is also unknown, which may represent another constraint since there is no information about the possibility of adapting it. These features are presented on Figure 4.

CasePower which is a tool developed using CBR. It was developed by Inductive Solutions Inc.(Watson I., 1996) and uses the spreadsheet environment of Excel. The confines of the Excel functionalities are echoed on the CBR framework, making it more suitable for numerical applications. The retrieval strategy of CasePower is the nearest neighbor, which uses a weighted sum of given features. In addition to this strategy the system attaches an index to each case in advance, improving the system performance in the retrieval process. The adaptation phase in this system may be made through formulas and macros from Excel but the CBR system in itself cannot be adapted as explained in Figure 4.

CBR2 denotes a family of products from Inference Corporation, and may be the most successful CBR. This family is composed by CBR Express which stands for a development environment, featuring a customer call tracking module; the CasePoint which is a search engine for cases developed by CBR Express; the Generator that allows the creation of cases-bases through MS Word or ASCII files; and finally the Tester which is a tool capable of providing metrics for developers. In this tool the cases structure comprises a title, a case description, a set of weighted questions (attribute pairs), and a set of actions. Such as CasePower, CBR2 uses the nearest neighbor strategy to retrieve the cases initially. Cases can also be stored in almost every proprietary database format. However, the main and most valuable feature of CBR2 is the ability to deal with free-form text. It also ignores words like and, or, I, there, just to name a few. It also uses synonyms and the representation of words as trigrams, which makes the system tolerant to spelling mistakes and typing errors.

The EasyReasoner that is a module within Eclipse, being extensively regulated since it is available as a C library, so there is no development interface and is only suitable for experienced C programmers. This software developed by Hayley Enterprises (Watson I., 1996) is very similar

11

to ART and also ignores noise words and use trigrams to deal with spelling mistakes. Once more, these tools are not likely to be adapted because it is an API, and the similarity strategy is not even known.

The ESTEEM software tool, which was developed by Esteem Software Inc., has its own inference engine, a feature that allows the developer to create rules, providing a control over the induction process. Moreover, cases may have a hierarchy that can narrow the searches, which is very useful when accessing multiple bases and nested cases. The matching strategy is also the nearest neighbor. The advantages of this tool are the adaptability of the system and the usage of two different similarity strategies (nearest neighbor and induction). A disadvantage is the fact that it only runs on Windows (Watson I., 1996).

jCaBaRe is an API that allows the usage of Case-Based Reasoning features. It was developed using Java as a programming language, which gives this tool the ability to run in almost every operating system. As an API, jCaBaRe has the possibility to be adapted and extended providing a solution for a huge variety of problems. The developer can establish the cases attributes, the weight of each attribute, the retrieval of cases, and so on. One of its main limitations is the fact that it still requires a lot of work, by the developer, to achieve an working system. Several features must be developed from scratch.

The Kate software tool that was produced by Acknosoft (Althoff K-D., et al., 1995) and is composed by a set of tools such as Kate-Induction, Kate-CBR, Kate-Editor, and Kate-Runtime. The Kate-Induction tool is based on induction and allows the developer to create an object representation of cases, which can be imported from databases and spreadsheets. The induction algorithm can deal with missing information. In these cases, Kate is able to use the background knowledge. The Kate-CBR is the tool responsible for case comparison and it uses the nearest neighbor strategy. This tool also enables the customization of the similarity assessments. Kate-Editor is a set of libraries integrated with ToolBook, allowing the developer to customize the interface. Finally, Kate-Runtime is a set of interface utilities connected to ToolBook that allows the creation of an user application.

Another CBR system is the ReMind, a software tool created by Cognitive Systems Inc. (Watson I., 1996). The retrieval strategies are based on templates, nearest neighbor, induction and knowledge-guided induction. The templates retrieval is based on simple SQL-like queries, the nearest neighbor focus on weights placed on cases features and the inductive process can be made either automatically by ReMind, or the user can specify a model to guide the induction

12

algorithm. These models will help the system to relate features providing more quality to the retrieval process. The ReMind is available as a C library. It is also one of the most flexible CBR tools, however it is not an appropriate tool for free text handling attributes. The major limitations of this system are the fact that cases are hidden and cannot be exported, and the excessive time spent on the case retrieval process. The nearest neighbor algorithm is too slow, and besides the fact that inductive process is fast, the construction of clusters trees is also slow (Watson I., 1996).

The characteristics of all these tools are compiled on Figure 4, where the implemented features are represented by “+” and the features not implemented by “---”. Figure 4 provides an overview of the main features existing in each tool.

Figure 4 - Characteristics of existent CBR Software Tools.

2.1.3 Genetic and Evolutionary Algorithms

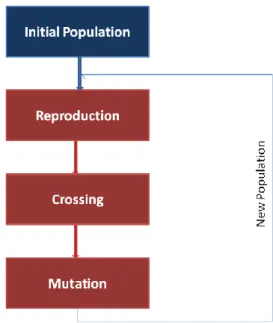

Genetic and evolutionary algorithms are computational models based on the concept of inheritance and evolution, such as the evolution of species. This algorithms use concepts of evolution and selection, mutation and reproduction operators. All those processes are dependent on the population, in a particular environment.

13

These algorithms are probabilistic and seek for an optimal local solution. First is established a population, where each individual represents a quality to the solution, and then the population will suffer several recombinations and mutations.

On the process of solving a problem, first a random population is established and the elements closer to the solution are chosen, meanwhile, crosses between individuals are made to generate new points of development.

Finally the mutation is performed and new elements are created through random changes. With this new population, the previous steps are repeated until you reach the optimal solution.

Figure 5 - GEA process of getting a solution to a problem.

Genetic and evolutionary algorithms are perfect for optimization problems, problems where the solution is difficult to find and when finding the solution requires parallelism (Analide C. et al., 2012; Back T., 1996; Linden R., 2006).

The GEA Systems have many applications such as Computer-Aided molecular design (Clark D. and Westhead D., 1996), molecular recognition (Willet P., 1995), drug design (Parrill A., 1996), protein structure prediction and chemistry (Pedersen J. and Moult J., 1996).

In molecular design there is an important activity related to the creation of new molecules, with designed qualities, in this area EA (Evolutionary Algorithms) proved to be an alternative. Venkatasubramanian et al. developed an algorithm where the molecules are converted to strings using a symbolic representation and then they are optimized to have the

14

desired properties. Glen and Payne invented also a GA (Genetic Algorithm) that allows the creation of molecules but within constraints.

Another application is the handling of the chemical structure, in this case the GA was used to determine the minimum chemical distance between two structures. The GA solved this problem by mapping one structure into another.

In quantum mechanics the EAs have been used to obtain approximate solutions to the Schrödinger equation. Zeiri et al, use an EA to calculate the bound states in a double potential and in the non-linear density function calculation. To achieve that, they use real-value encoding.

Related to Macromolecular structure prediction, protein tertiary structure is one of the most common challenges in computational chemistry because the mechanisms of folding are understood and the conformational space is enormous. EA helped on the problem of the search space, however the main concern was to find a suitable fitness function, because that is what defines the objective of the system. Once more EAs proved to be a reliable solution.

Finally in the field of protein similarity, a GA for protein structure comparison was developed by May and Johnson.

This system is a binary string GA that encodes three translations and three rigid body rotations. During the encoding, a transformation is generated to overlap one protein on another. The result is given by the fitness function that uses dynamic programming to determine the equivalent.

In general, GEA is used to get optimal results in cases where the domain is too vast or there is a need to merge several techniques, because the solution is to difficult to achieve. It is also used when the parallelism is needed to get a solution.

2.2. Personalization over search engines

In the past few years the search engines have been evolving. Some years ago every search performed returns the exactly same result to any user, however nowadays that doesn't happen anymore. The results didn't become completely different they become adapted, with some commonality.

According to the search, Engine Journal of article "Dealing with personalized search [Infographic]", Google searches are shaped by four main factors, which are the location, the

15

language, the search history and the personal user settings. These are the main concerns of Google to try to get the results closer to the users.

Figure 6 - Four main factors that personalize searches on Google.

However there other factors for instance, regarding the location, some systems split the location in many levels such as country, city or metropolitan areas. In this case Google, when receive a search query about a restaurant the results are ordered by distance, letting the user know where they can find the nearest restaurant of that kind.

Some systems also differentiate the searches performed on web from the searches from mobile. Regarding to the Personal History, Bing started to adapt their results not only by previous searches but also by likes or shares on social network, such as Facebook or Twitter.

These two also helped on personalization of the results providing information about social connections, where the results get influenced by user's friends (Search Engine Land, 2013).

In 2004 Sugiyama, Hatano and Yoshikawa proposed a solution that tried to created a profile of the user without any effort from the user. They evaluate existing systems, which require the user to fulfill questionnaires or to rate the results provided, and decided to create a solution able to retrieve and rank the results without asking anything to him. This solution provides results to the user, and monitors the history based on clicks of the user, adapting the user profile on the fly. This way the next query performed on the system will take into account the current profile of the user. The results collected from the analysis of the system revealed that this approach is reliable to the personalization issue on search engines (Sugiyama K., et al., 2004).

Danny Sullivan also introduces three further factors to the Personalization. In first place he explains that in location it is possible to specify the city or metropolitan area, as a way to suggest results. Second factor lies on fact of searches done by desktops present different results

16

from mobile. Finally, he talks about the social connections, since he considers the content viewed by friends a way to customize the search on a search engines (Sullivan D., 2011).

Other example of Personalization usage is Yandex, the Russian search engine, where a personalized search is considered useful only if the results are related to a context and user interests collected through previous searches.

An example is Figure 7:

Figure 7 - Example of Yandex suggestions and results.

Figure 7 illustrates the fact that, if a user searches a lot about games, on his search he will see hints of games first, but if instead the user is interested in culture, then the suggestions will be cultural. However the formula used to calculate the relevance of the document to the search does not allow the use of factors such as personal information. The information used by the search engine to personalize search only takes into account the history and the clicks on results (Yandex about page, 2014).

In Personalization, Bing gave the first steps recently and opted to use a strategy based on two scenarios, which are the location and history. Besides that, they are trying to develop a new feature called adaptive search. This search consists on contextualizing the results, i.e., trying to understand what the user is looking for.

17

As an example, we have the “Australia” query. In this case you need to understand if the user is looking for information of a possible trip for holidays, or else if it is a movie buff interested on "Australia" movie (Bing Blogs, 2011; Sullivan D., 2011).

Focusing on the subject oriented to the concept of personalized images search, this is something new and with short information. With technological advances it becomes possible for the user to access an endless amount of pictures. However, there are some cons, because it is harder to find what we really want.

One possible approach is related to the image interpretation using its elements or colors, after that the image is categorized. Usually this concept becomes associated with systems that evolve through the similarities that are being found (Bissol S., et al., 2004).

Another approach is to contextualize the images using tags. This concept arises normally associated to social network or photo sharing sites and consists in assigning tags to images on publication. This will group the images into categories, allowing them to be available on other searches.

This method is quite popular since it only requires the system to collect the tags, building your base of knowledge (Borkar R., et al. ; Lerman K. and Plangprasopchok A., 2010).

2.2.1 Benefits

One of the main benefits of personalized search is related to the improvement of the quality of decisions made by consumers. According to Diehl, the internet reduced the cost of obtaining information however the capacity of dealing with that amount of information didn't evolve. This fact reduced the quality of consumers decision and provided a new path to be explored, developing new tools to help the consumer on decision process. The analysis of personalized results when taking decisions proved a positive correlation between personalization and the quality of the decisions. In the previous study it was concluded, that lower costs on search lead to no personalization, making consumers choose, options of lower quality (Diehl K., 2003).

Another study conducted by Haubl G. and Benedict G.C. also revealed that personalized searches reduces the quantity of options inspected (Benedict G. and Haubl G, 2012).

18

2.2.2 Disadvantages

A disadvantage that is always present when talking about personalization is the fact that personalization limits the user experience over the internet. According to Eli Pariser personalization lacks user possibility to meet new information (Pariser E., 2011). In 2002, Thomas W. Simpson said that "objectivity matters little when you know what you are looking for, but its lack, is problematic when you do not" (Simpson T., 2012).

Another disadvantage is the circulation of private information between companies. Nowadays, many systems gather the information about the users without their consent or knowledge, and that information is valuable for internet companies creating a privacy issue that doesn't benefit personalization.

Related to this work some researches were made and some achievements were reached in areas like elderly healthcare, where there has been some issues related to privacy and data protection (Costa A., et al., 2013).

The problem is connected to Ambient Assisted Living where the patients and doctors could be helped by technology. However the doctors have a deontological code to follow and respect, something that have to be kept by the ones who manage the system.

However there's no legislation on the computer scientific area that protects the information managed by the systems that are developed.

Something that also happens is that our information we share on internet or even information that is collected by our actions are gathered by companies that gets a huge profit when handling it.

19

3. Technologies

As software product, the solution of this project is trying to provide some technologies, each one trying to solve the problems that were described before. In this chapter it will be explained the technologies that were closer and the explanation of why it was chosen.

3.1. Programming Language

The programming language chosen to be used within this project was Java. This language was first thought to be used when a mobile application became part of a solution. This fact reduced largely the wide range of programming languages available to develop this solution. However this only narrows the development of the mobile application itself, the choice of the programming language for the web server were not affected however for consistency it was maintained the same on server side.

That was, one of the reasons although there were others, for example Java is a versatile and modular programming language which is good it is possible to develop some modules that can be adopted without changing all the structure of the solution. This fact helped to create a generic solution before adapt it to this particular case. Giving the possibility to cover several case studies James Gosling also developed java to class-based and object oriented being released in 1995 (Gosling J., McGilton H., 1996).

The ability of java to deal with concurrency, as it was said before CBR deals with huge amount of information which can cause a delay retrieving cases, with concurrency this problem can be circumvented (Goetz B., Peierls T., 2006).

Other fact was the highly usage by other users which provided some background with some frameworks that were needed and helped with the portability of the system.

Finally java is well-funded and supported which makes it a choice to long-term since it's not expected that with so many followers and so many frameworks it will disappear soon.

Although all these advantages it is clear that it's not perfect there are some problems such as memory consumption or performance (Jelovic D., 2012). However they were not a sufficient to change the decision of using Java.

20

3.2. Android

The platform used to develop the mobile application was Android. The choice was made due to the amount of users that everyday join this platform, over 1.5 million every day. The programming language that is based was also a factor to take into account, without forgetting the easy way to test and distribute.

When speaking about the mobile platform to achieve the results pretending, there were two possibilities IOS and Android. These two are the most common and more supported due to the amount of users that everyday requests new features (NetMarketShare, 2014). These origins the creation of new frameworks, everyday helps to achieve some base features, such as http request or image loading features bases on URL searches.

However between these two, there's one that stands out. That's Android.

Developed by one of the most reliable trademarks on market, Google inc., it was released as an open source project since 2008 and then a lot of users started to develop many other frameworks to interact with Android.

This open source philosophy helped the exponential growth that occurred in the last years but also became the system more robust and fault tolerant.

In sum, this framework is a reliable choice because it is used by many users which potentiate its growth and improvement.

Furthermore as an open source operating system, the cost of development of an app is much lower when compared with iOS.

Finally Android is based in Java which provides a huge adaptation and modularity to the software developed to run its devices.

This modularity enables our solution to be part of a single solution or for instance become a module within other softwares that look at this approach as a potential help to bigger problems.

21

3.3. Case-Based Reasoning - Decision

Analyzed those three techniques (ANN, CBR and GEA), it was possible to realize a bit in which consisted and what is their most common uses. Established the scope of this project as something that need to evolve, but without the need of parallelism or an optimal solution, it was clear from the beggining that genetic and evolutionary algorithms would not be the path to follow.

In addition, the use of previous cases is a factor that is intended to be exploited. This field suited well either in ANN, through the cases used on network training, either the RBC, with the experience through previous cases (Analide C., et al., 2012; Aamodt A. and Plaza E., 1994).

Since it is intended that the user searches are recorded, to be used later as a suggestion or filter on searches carried out, it becomes evident the need to go consuming a knowledge base, built on previous experience of the user and not so much in need to "teach" a structure to respond more effectively to the problem (Kolodsen J., 1992). Both paths would be viable, however the choice was made for the CBR technique, because it is intended that, during use, each user can check its knowledge base so it can "see" if some of the previous cases are closer to any search already done.

This is a case where the CBR can be used effectively to suggest, through the similarity of cases. Using this technique it also becomes easier to share the cases between users. Using ANN the system would have to add cases to train the network, and only then have improvements on search results (Analide C., et al, 2012).

Finally this project can contain exceptions to the rule, an example is the Australia query, explained before. Many users can run this query but they have not the same intent.

3.4. Bing API

This project has an objective related to the creation of an image search engine, so there is a need to work over an already developed framework that can provide results about a query. The creation of a system from scratch was not an option once there are some search engines already working, and has more than satisfying results. These results had to be reliable in order to provide to the system, sets of images that are connected to the subject searched. Analysing those factors and looking for a solution with huge acceptance and simplicity, Bing was the best choice (Rao L., 2012).

22

Bing is a search engine such as Google or Yahoo, from Microsoft, released in 2009. This search engine is growing since the beginning gaining lots of users and becoming the second choice of the search engines, only being overcome by Google. More than the fact of being used by lots of users is the fact that Bing is present in more than 30 languages which helps on personalization regarding the location of the users (Rao L., 2012).

As it was said before Bing is improving its search system trying to integrate personalization on the results, however they features started by the most common like location, search history, social connections and so on. This fact along with the possibility to filter the results only to images also helped to decide for the usage of Bing API.

Bing API is very simple once it is only necessary to make an http request to a specific address, and than convert the result to the objective we want. In this project for instance the results are converted on a set of urls to the images that are provided to an Image loader. That image loader uses the urls to get the images placing them on a view of the Android application. But there is also another feature given by Bing API that led to its choice, the feature "Related Search". This feature suggests some queries related to the issue searched that could be interesting to the user. Those related searches, are one of the steps taken into account to the personalization process of our solution once this project did not reject the techniques already used, this project is trying to evolve the personalization analyzing some new paths that were not explored yet.

Regarding the results, they are easily converted once they are returned in XML or JSON, which are notations commonly used. The usage of those notations helps on getting the information needed from images since there are lots of libraries that handle with notations, providing results in good time. The time spent on getting the results is important since one of the objectives of the project is the retrieval of results in good time.

Finally regarding the results they are used twice on this, they are used without any filter to give the user the possibility to face new information not focusing its knowledge only in things from its interest. And they are also used on personalization process being presented to the system that will determine some related content to be suggested to the user based on the query and results.

23

4. Implementation

In this chapter will be explained, the most relevant decisions made during the development of this solution and it will also be explained with more detail the architecture of the solution and its components. This chapter is also important to give a general overview about the workflow of all process and components such as the Android application, the CBR system of the server and the communication between them.

The Figure 8 provides an overview about this solution in regards three-tier architecture:

Figure 8 - Architecture of the Solution.

This architecture showed on Figure 8 clearly defines three layers as it was said before. Starting from the bottom, the Model layer is where the data base is located, this layer is responsible for providing and storing the information that will be manipulated by the system.

The middle layer named Controller is where the information given by the user is used by the system to find appropriate solution, based on the system itself and based on the information from the model layer. Basically this layer is the intermediary between the other two. In this system the user needs some information from the database (Model layer) however that

24

information cannot be given directly, there is a need for some computation before the information retrieval. This computation is performed on the controller layer.

Finally on the top there is the presentation layer, here is where the user of the platform can request information and where after the computation the information is shown.

With the objective of providing an overall perspective of the workflow of our solution, it was created a sequence diagram (Figure 9) that show how the user interacts with the system performing a query and how the servlet handles the information received, with the purpose of converting it into something the CBR system understands.

It is also explained how the CBR system communicates with the database and finally it is represented all the way back into the result presented to the user.

Figure 9 - Sequence Diagram of the Solution

4.1. BackOffice

The back office of this solution is a main component, because it represents all the logic behind the results that are presented to the user. These components contains the CBR System, which is responsible for receiving a case, convert it into the new structure, collect similar cases, choose a solution, revise the solution and finally retain the new case. In this CBR System it was introduced

25

two new techniques one for handling default data and another one for string comparison that will be explained better on the next chapters.

Still on the server there is a servlet responsible for the communication between the user request (Presentation Layer) and the CBR System.

Figure 10 - Sequence Diagram of the Server.

4.1.1 String Comparison

One of the big concerns about the problem presented on this work was the string comparison, because the problem itself is related to the query the user wants to be answered, the images are only the way to present the results. The string comparison is what will be used together with the handling default data technique to achieve the results closer to user's will. Once more it is important to clarify that no processing is applied to the image with the intention to enhance the search of similar results.

Many other systems deal with string fields however there's almost always a guide to be followed that narrows the search, for instance the fields have some possibilities to be chosen by the user. This is a good practice to enhance the speed of case retrieval and the similarity between the results. However not only the search on the knowledge base is narrowed, the input

26

and the possibility to enhance the query are also narrowed and that limits user experience within a system. Besides that, there are some cases where the free text input fields are needed. For example a search engine, we want to write what we are looking for and it is not possible to have search filters that match all the user needs. On those cases, string comparison become part of the solution with some metrics.

The metrics used within this project were Jaro-Winkler distance, Dice's coefficient and Levenshtein distance.

Jaro-Winkler

As it was said before the Jaro-Winkler distance is the measure that results from the comparison of two strings. It was extended from the Jaro Distance and its usage was planned for the detection of duplications. In this metric the two strings are more similar as higher as the distance between them. It is more suitable for short strings and its similarity is between 0 and 1, where 0 represents no similarity at all and 1 represents a perfect match.

To calculate the similarity there a formula expressed on two concepts, the number of matching characters (m) and half of the number of transpositions (t). This formula represents something simple, if the number of the matching characters between the two strings (s1 and s2) is 0, so the distance is also 0, otherwise:

(1)

The transpositions are taken into account since the order of the matching characters, are important to the matching measures.

With this process every character of first string (s1) are compared with every matching characters of second string(s2).

Levenshtein

Considered as valid in 1965 by Vladimir Levenshtein this measure is different from the previous. That's because Levenshtein distance calculates the differences between two words. This distance is measured looking into any insertion, deletion or substitution between first string (s1) and second string (s2).

27

It is recommended for situations where is intended to find short matches in long texts or situations where only a few differences are expected, such as spell checkers for example. When used for two long strings, the cost of calculating the distance is very high, almost the product of both string lengths.

Compared with Jaro-Winkler this distance respects the triangle inequality, this is the sum the Levenshtein distance between s1 and s2 is equal or lower than the sum of Levenshtein distance between s1, s3 and s2, s3, just like on a triangle where the sum of two sides are always greater than or equal to the remaining side.

The formula to calculate the distance is:

(2)

The result will always be at least the difference between sizes and at most the length of the biggest string.

Dice’s Coefficient

Dice’s Coefficient is a measure of similarity between two sets. This similarity is based in the number of common bigrams which are pairs of adjacent characters. This metric was developed by Thorvald Sorensen and Lee Raymond Dice and can be applied in several areas with different needs. For instance it can be used for sets, vector or this case for string.

The formula to calculate Dice’s Coefficient is :

28

4.1.2 Handling Default Data

The second concern about this project is related to the fact of handling default data. This project is intended to deal with different kinds of information or even deal with missing or incomplete information. But the objective is create a solution, to retrieve a valid solution or those cases too.

To become this solution reliable it was applied two new concepts, the DoC and the QoI. The DoC is the confidence applied to each term of extension of a predicate which helps on the normalization of the cases and on the improvement of speed of cases retrieval.

This process of normalization based on the calculation of DoC can be expressed on a new CBR cycle.

Figure 11 - Adapted CBR cycle taking into consideration the normalization phase.

This new cycle introduced a new phase called normalization where the new case introduced to the system is converted into the new structure to enhance the quality of the case retrieval. This conversion improves the quality of the similarity measures and makes all the system operate with normalized cases making the retain phase a process that add an already normalized case to the knowledge base. The DoC is also used to calculate the similarity between cases. This approach was previously used on real cases with good results, it was applied on

29

schizophrenia diagnosis (Cardoso L., et al., 2013) and asphalt pavement modeling (Neves J., 2012).

To fulfill this implementation some changes need to be performed related to the knowledge representation and reasoning.

Those changes use concepts of Logic Programming (LP) paradigm that were previously used for other purposes such as Model Theory (Kakas A., et al., 1998; Gelfond M. and Lifschitz V., 1988; Pereira L. and Anh H., 2009) and Proof Theory (Neves J., 1984; Neves J., et al., 2007).

In 1984 J. Neves created a new way to present knowledge representation and reasoning, which was used in this project. This new new representation is ELP (Extended Logic Program) which is a proof theoretical approach using an extension to an LP language. This extension is composed by a set of clauses in the form.

(4) (5) In the previous formulas ? is a domain atom representing falsity,pi,gj and p are classic

literal, can be a positive atom or a negative atom, if preceded of negation sign . Following this kind of representation a program is also associated to a set of abducibles (Kakas A., et al., 1998; Pereira L. and Anh H., 2009). Those abducibles are exceptions to the extensions of predicates.

Finally the purpose of this representation is to improve the efficiency of search mechanisms helping on the solution of several problems.

The decision making processes still growing and some studies were made related to the qualitative models and qualitative reasoning in Database theory and in Artificial Intelligence research (Halpern J., 2005; Kovalerchuck B. and Resconi G., 2010).

However specifically related to the knowledge representation and reasoning in LP, there were some other works that presented some promising results (Lucas P., 2003; Machado J., et al., 2010).

On those works was presented the QoI(Quality of Information) measure, that were related to the extension of predicate i and gives a truth-value between 0 and 1. Where 1 represents

30

known information (positive) or false information (negative). When the information is unknown, the QoI can be measure by:

(6)

in situations where there is not set of values to be taken by the extension of predicates. In this cases N denotes the cardinality of the set of terms or clauses of extension of predicate.

When there's a set of values to be taken the QOI will consider the set of abducibles and use its cardinality. If the set of abducibles is disjoint the QOI is given by:

(7)

otherwise it will be handled a Card-combination subset with Card elements ( ):

(8)

Another element taken into account is the importance given each attribute of the predicate. It is assumed that the weights of all attributes are normalized

(9) with all the previous elements it is now possible to calculate a score for each predicate given by:

31

this way it is possible to create a universe of discourse based on the logic programs with the information about the problem. The result are productions of the type:

(11) The DoC is given by representing the confidence in a particular term of the extension of a predicate.

Summarizing the Universe of discourse can be represented by the extension of the predicates:

(12) but this new concept does not simply changes the structure of the cases, it also changes the way of calculation of the similarity between them.

So analyzing each case there's an argument for each attribute of the case.

This argument can have several types of values, it can be unknown, member of a set, may be in the scope of an interval or can qualify an observation. The arguments compose a case, that is mapped from a clause.

For instance we can consider a clause where the first argument value fits within the interval [35,65] which the interval [0,100] as a domain. The second argument is an unknown value () and its domain ranges the interval [0,20]. And finally the third argument is the certain value 1 within the interval [0,4].

Considering the case data as the predicate extension:

(13) One may have the following representation:

(14)

32

The following step after setting the clauses or terms of the extension of the predicates is the transformation of the arguments into continuous intervals, using its correspondent domains. The first argument is already an interval, so this step is only applied for the following arguments. The second argument has an unknown value so it's correspondent interval matches it's domain. The third argument is a certain value so its interval is within its value ([1,1]).

Finally the 0.85 value represents the QoI of the term . This step able the determination of the attributes values ranges for as explained next:

(15)

At this step it is possible to calculate the Degree of Confidence of each attribute. For instance the DoC of the first argument ([35,65]) will demote the confidence that the attribute fits the interval [35,65]. But first a normalization where every domain have to fit a common interval [0,1].

The normalization procedure is according to the normalization

(16)

33

Once the domains are normalized the equation is applied to each attribute, where is the range of normalized interval.

Figure 12 - Evaluation of the Degree of Confidence.

Below, it is shown the representation of the extensions of predicates within the universe of discourse. At this stage all the arguments are nominal and represent the confidence that attribute fit inside the intervals referred previously.

(17)

With this representation it is now possible to represent all cases of the knowledge based in a graphical form with Cartesian plane in terms of QoI and DoC of each case.

34

To select, the best solution of new case is represented in the graphic according to its DoC and QoI and then it is selected a suitable duster which is measured according to a similarity measure given in terms of the modulus represented below. In the following figure it is represented the duster (red circle) and the new case (red square).

Figure 14 - Case base represented in the Cartesian plane.

4.2. Data Model

Starting from the lower level of this solution, we will explain the data model. The data model represents the structure of the database that will be used by the CBR system. As it was explained on chapter 2 the CBR system has a repository where the cases are stored. This repository is also used by the CBR system to find the best solution to a new case. So the database should follow a structure that enables the system to retrieve the result that is intended to be on this project and, at the same time follow some guidelines from the CBR system. For that reason the data model was one of the components that were more discussed, because it has to respect some requisites and it has to be adapted, since our system is supposed to grow and new features could appear.

One of the requisites was to respect the structure of the cases of CBR systems that was normally used. When studying the concept of the CBR, the structure that was mostly used to explain the case structure, was a structure where is defined the problem, the solution to the problem and a justification to the solution presented (Analide C., et al., 2012).